- SAP Community

- Products and Technology

- CRM and Customer Experience

- CRM and CX Blogs by Members

- Run SAP Commerce (Hybris) on Google cloud using Ku...

CRM and CX Blogs by Members

Find insights on SAP customer relationship management and customer experience products in blog posts from community members. Post your own perspective today!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

ravi_avulapati

Explorer

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

10-31-2019

8:53 PM

Introduction

This is the third and final part of the multi-part blog post series on containerization & orchestration technologies Docker and Kubernetes with SAP Commerce (Hybris). This series aims at leveraging Docker and Kubernetes to deploy and run SAP Commerce (Hybris) and Solr in master-slave mode on the cloud.

If you are interested in the introduction to aspects, details of the custom recipe that makes the creation of docker images easier, you can visit the first part of this series.

If you are interested in creating Hybris, HSQLDB Docker images, which is the second part of this blog post series, you can visit the post.

In this post, we look in detail at deploying SAP Commerce (Hybris) to the cloud.

This post assumes that you have knowledge of Docker, Kubernetes and SAP Commerce (Hybris). The platform version used in this blog post is SAP Commerce 1905, Solr version is 7.7. SAP Commerce 1905 requires Java 11 or above.

If you don't have knowledge of Kubernetes, executing the scripts provided as guided should do it; having knowledge will help understand the technical nuances.

In this post, terms SAP Commerce and Hybris are used interchangebly, they both mean the same.

What is Google Kubernetes Engine (GKE)?

From GKE documentation - Google Kubernetes Engine provides a managed environment for deploying, managing, and scaling your containerized applications using Google infrastructure. The GKE environment consists of multiple machines (specifically, Google Compute Engine instances) grouped together to form a cluster.

To simply put it, GKE provides Kubernetes capabilities as part of the Google Cloud Platform (GCP) that can be used to orchestrate Docker containers.

Before we dive into GKE, let's see how to push images to a docker repository A.K.A registry from which the images are pulled in to Google Kubernetes Engine (GKE).

Upload Docker images to an image repository

To deploy Hybris, HSQLDB on GKE, Docker images created earlier should be able to be accessed from GKE. For that, we need to upload/push images to Docker registries like Docker Hub or Google's Container Registry or Amazon Elastic Container Registry or Azure Container Registry or your company's Docker repo.

In this section, let's look at how to upload images to Docker hub, the process would be similar for other repositories as well, please refer to the corresponding documentation.

As the first step, log on to Docker from command line (If you do not have an account, you can signup at https://hub.docker.com or https://cloud.docker.com, docker hub allows one private repo with their free plan)

$ docker login --username=dockerhubusername --password=password

$ docker tag local-image:tagname reponame:tagname

$ docker push reponame:tagnameExample:

Assuming that the local image is 'valtech_b2c_spartacus_dockerized_platform' and registry is 'valtechus/sap-commerce-1905' and contains functionality for a feature/version 'feature-xyz'

$ docker login --username=testuser --password=password

$ docker tag valtech_b2c_spartacus_dockerized_platform:feature-xyz valtechus/sap-commerce-1905:feature-xyz

$ docker push valtechus/sap-commerce-1905:feature-xyzNow that we have uploaded Docker images to a registry let's move on to the deployment of Hybris, DB, and Solr images to GKE.

Deploy to GKE

If you do not have a google cloud account, you need to sign-up at https://console.cloud.google.com. You will be asked for a credit card for billing purposes.

To simplify the demonstration of each step, Kubernetes configuration and the corresponding shell scripts are made available here. We run the scripts as we walk through each step.

Overview of the deployment

Let's have a quick overview of the deployment steps before we go into each step -

- Create a project - GCP project is the organizational unit that encapsulates necessary services and access to the services. All the tasks that are going to be performed is within the project scope.

- Create a cluster - This is where GKE runs its Kubernetes orchestration by setting up a master and worker nodes.

- Setup a secret that is necessary to pull the docker image from a private docker registry. This how GKE is able to access images from a private registry.

- Deploy HSQLDB on GKE

- Deploy Solr on GKE

- Deploy Hybris on GKE

- Expose Hybris application using a load balancer using Kubernetes load balancer service.

- Walkthrough of configuration - By this time, the details might have gotten overwhelmed, this section serves as a walkthrough of the high-level details that have been encountered thus far.

- Deploy Hybris aspects on GKE.

- Manual and Automatic scaling

- Monitoring and Logging in GKE - Briefly touch base GKE's monitoring and logging capabilities.

- Cleanup - Finally, as an optional step, the script deletes the cluster so that the billing accrual can be avoided.

Looks like quite a bit number of steps? Two things - First, yes, to deploy a platform of magnitude SAP Commerce combined with a DB and Solr, that is expected. Second, don't worry too much, the scripts that are made available will make it a lot easier, most of the tasks mentioned above are done with just execution of a one-liner script, rest is about the explanation. Let's dive right in!

Use Google Cloud Shell

Go to Google Cloud Platform Console and activate cloud shell

Upon activation of cloud shell, you should see something similar to the below

Clone Kubernetes configuration, scripts

As mentioned above, Kubernetes configuration and scripts are included that make deployment of Hybris, DB, and Solr on GKE easier. Time to employ the scripts!

Git command-line is available by default in the cloud shell. Let's clone the repo and navigate to the right directory.

$ git clone https://bitbucket.org/valtechny/sap-commerce-gke.git

$ cd sap-commerce-gkeNow, update YAML files in 'sap-commerce' directory with your SAP Commerce image. Repeat the same for YAML files under hsqldb directory by replacing the placeholder with your DB image.

No need to do it for Solr because the configuration already contains a reference to a custom image. For details about Solr image, refer to this blog post. However, if you want to use a different Solr image, feel free to update Solr configuration.

Before:

After (example):

Be sure to include the image that you created from the previous post that is pushed to a registry as explained above. If you pushed the image to a public registry, GKE should be able to pull the image from the registry without the need for any further configuration. If you pushed the image to a private registry, see below on how to setup secrets for GKE to be able to pull images from a private registry.

Get billing account id

Before we begin the set up of the project, let's retrieve billing account id which needs to be associated with the project that we are about to create. To get billing account id, run the below command in cloud shell

$ gcloud alpha billing accounts listThe above command outputs one or more billing accounts that have a pattern like XXXXX-XXXXX-XXXXX. Copy the billing account id that you want to use for sap-commerce project that we create in the following section

Setup project

Run the below script to set up the project. You can set the timezone, the project name prefix of your choice. The timezone used in the script is 'us-east4-a'.

$ sh cluster/setup-project.sh "project name" "billing account id"

##example:

$ sh cluster/setup-project.sh valtech-sapcommerce XXXXX-XXXXX-XXXXXIf the script runs without errors, you should see that a project with the name "valtech-sapcommerce" is created. You can ignore warnings during the project setup, but, if you see errors, something went wrong, try to understand the error message and fix it.

Create cluster

Now that the project setup is complete, within the project scope, let's create a cluster by running the below script. You can update the machine type and the number of nodes if you want a different configuration. The machine type that is used is n1-standard-8 (8 vCPUSs, 30GB), and the number of nodes is 3, which the default.

$ sh cluster/create-cluster.shSetup secret to pull image from a private docker registry

To pull a docker image from the private registry, GKE need to be given access to the registry. The 'imagePullSecrets' field in the configuration file specifies the secret that is configured that GKE can use to gain access to the image in the registry.

The details on how to create a secret can be found here. Once YAML configuration is exported, make sure to commit in your source code, so that it is generated once and can be reused again and again.

For example, if the name of the secret file generated is docker-registry-secret.yaml, run the below command in the cloud shell for GKE to access images in the private registry.

$ kubectl apply -f docker-registry-secret.yamlNow that the GKE cluster setup is complete, let's look at the deployment of DB, Solr, and SAP Commerce, which is our final goal.

Deploy HSQLDB

Let's first deploy HSQLDB, for that, let's run the below script.

The deployment script does a few things at a high level - Kubernetes pulls image from the registry, creates and runs the container inside a pod, brings up the configured number of pods. Also, Kubernetes dynamically creates, allocates storage for each pod replica, creates and attaches service which exposes pods for internal/external communication. In the walkthrough section below, we will go through the configuration. Scripts do the same with the rest of the deployments (Solr, Hybris).

$ sh hsqldb/hsql.shIf you run the following command in cloud console - kubectl get all -l app=hsql, you should see something like below.

You can use a different DB of your choice like MySql.

Deploy Solr

Here we deploy a custom Solr image that allows the configuration of master-slave mode.

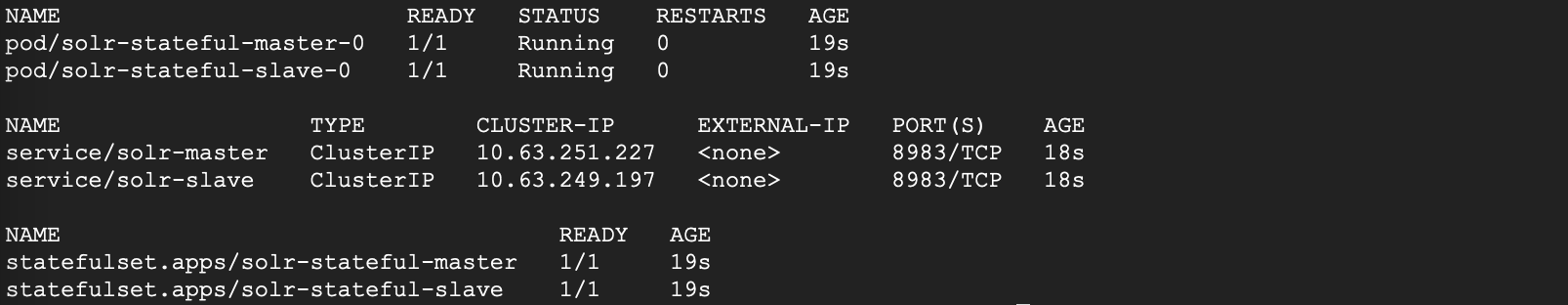

$ sh solr/solr.shIf you run the following command in cloud console - kubectl get all -l app=solr, you should see something like below.

For more details on Solr docker image, deployment, and scaling, refer to the blog post on Solr.

Deploy Hybris

Before we deploy Hybris, let's do the setup by running the setup script. The script includes -

- Export SSL cert files as https secret to run application on https

- ConfigMap to externalize configuration

Both of them deserve a brief explanation.

- SSL certs are base64 encoded, and a secret file is generated

- As we discussed in the previous blog posts, now we directly see benefits of using the custom recipe, all the configurations like DB URL, Solr URL's can be externalized, and Kubernetes provides a way for that called config maps. If you look at the deployment (YAML) files, you can notice that configuration is externalized multiple ways. (See hybris-stateful-hac.yaml, hybris-stateful-occ.yaml for variations)

The simple difference between ConfigMaps and Secrets is, sensitive data is put in secret files, and non-sensitive data goes into ConfigMaps. For ConfigMaps documentation, refer to this link, and for Secrets documentation, refer to this link.

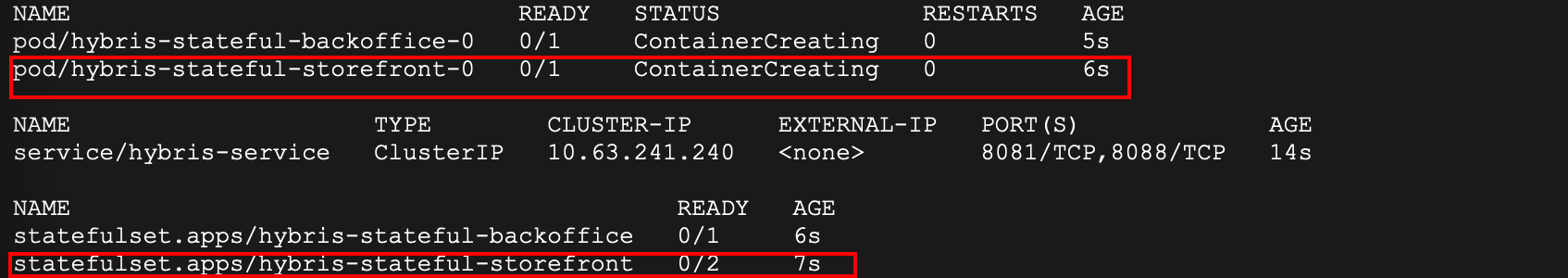

$ sh sap-commerce/hybris-setup.shNow that basic setup is done, let's create a cluster with 2 storefront nodes and 1 backoffice node. Just run the below script.

$ sh sap-commerce/hybris-cluster.shAdditionally, note that when we query for Hybris related services, initially, you will see that only one storefront node, but when you run the same query again after few minutes, you will see 2 storefront nodes. The reason is, we are running StatefulSets. One of the benefits of Statefulset is, sticky identity, ordered deployment and scaling, which means we can always access the same storefront node with the same name 'hybris-stateful-storefront-0', for more details on StatefulSets, refer to this link.

Below is the screenshot when you execute kubectl get all -l app=hybris

Expose Hybris application using load balancer

Now that the Hybris cluster is up and running, to access storefront and backoffice from the internet, we want to expose Hybris to the outside world, that can be exposed via the load balancer. Run the below script to create a load balancer for the Hybris cluster. All the script does is to update the Hybris service from default ClusterIP type to LoadBlanacer, which creates an external IP address

$ sh sap-commerce/hybris-loadbalancer.shRight after you run the above script, if you run kubectl get all -l app=hybris, you would see something like below

As you see, the pending status for external-IP as it would take a few minutes. If you check after a few minutes, you would see an IP address with which you can access the Hybris application.

https://<external ip>:8088/yacceleratorstorefront/?site=electronics

https://<external ip>:8088/backoffice

https://<external ip>:8088/hacWalkthrough of configuration

Let's have a walkthrough of configuration at a high-level. We look at the configuration of Hybris, and the same applies to DB and Solr.

Every time the deployment shell script is run, you would notice three of them being created -

- Pod - A basic execution unit in which the application containers are run

- StatefulSet - Responsible for keeping the pods running and ensures that the required number of replicas is always up. StatefulSet also ensures that pods have sticky identity, ordered deployment, scaling and stable network, storage

- Service - Responsible for exposing application running in pods to network

Apart from the above, you would need storage space to persist DB data, Hybris media, temp files, and Solr index data. The challenge is, how do you dynamically allocate storage for every pod and make sure that the storage is in sync with the scaling of the application? To elaborate further, whenever you scale the application, how do you make sure that there is a dedicated storage created corresponding to the newly created pod? This can be achieved by persistent volume claims. In this case, we are using GCP's standard persistent disk.

StatefulSet configuration

LoadBlancer service to expose the application

Below is the visualization of how Pods, Containers, StatefulSets, and Volumes are linked

Deploy Hybris Aspects

You might recall about aspects in the first part of this blog post series. Now that we have successfully brought up the application let's quickly see how to deploy different Hybris aspects on GKE. All you have to do is run a script to spin up Hybris instance for a particular aspect.

####aspect options####

#1-Default (everything - storefronts, hac, backoffice, etc)

#2-Backoffice only

#3-HAC only

#4-OCC only

#5-Platform only (for initialize, update or running cronjobs)

####usage####

sh hybris-aspects <aspect option>

####examples####

sh hybris-aspects 1 # 'creates a storefront node'

sh hybris-aspects 2 # 'creates a backoffice node'

sh hybris-aspects 3 # 'creates a HAC node'

sh hybris-aspects 4 # 'creates a OCC node'

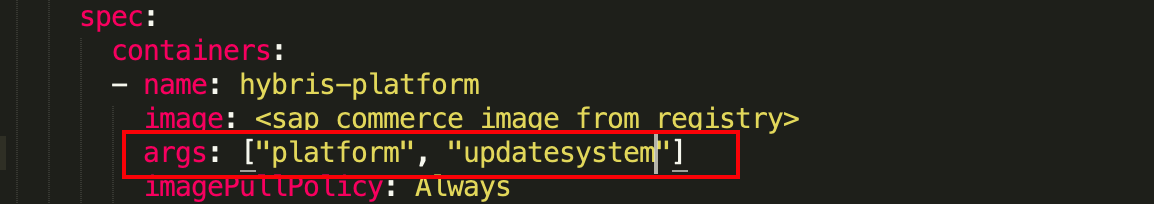

sh hybris-aspects 5 # 'creates a node to run a process like update, run cronjobs, etc'If you want to perform a system update, you can update args in hybris-stateful-platform.yaml as shown below and run 'sh hybris-aspects 5'

And, if you're going to execute a long-running cronjob, you can spin up a new Hybris instance by updating hybris-stateful-platform.yaml file with below args and run 'sh hybris-aspects 5'

With this platform aspect, the Hybris instance will be terminated after the processing (of system update or cronjob) is completed successfully.

Manual Scaling

One of the key strengths of Kubernetes is the ability to scale pods as an inbuilt feature. Whenever there is a need to handle more load, horizontal scaling can be performed to increase the number of pods by increasing the replica count. The best use case is, during the holiday season, it is common for e-commerce applications to experience a surge in traffic, you can increase storefront replica count from 2 to a higher number during the holiday season and scale it down right after the holiday season.

####aspect options####

#1-default (everything - storefronts, hac, backoffice, etc.)

#2-backoffice only

#3-hac only

#4-occ only

####usage####

sh hybris-scale <desired num of replicas> <ascpect option>

####examples####

sh hybris-scale 3 or sh hybris-scale 3 1 # 'scales storefronts statefulsets to 3'

sh hybris-scale 2 2 # 'scales backoffice statefulsets to 2'

sh hybris-scale 2 3 # 'scales hac statefulsets to 2'

sh hybris-scale 3 4 # 'scales occ statefulsets to 3'

Scaling is one of the areas where StatefulSets come in handy over deployments. During scaling, StatefulSets bring one node up at a time incrementally, that is, if storefront nodes are scaled from 2 to 4, 'pod/hybris-stateful-storefront-2' are created first followed by 'pod/hybris-stateful-storefront-3'. When storefront nodes are to be scaled down, the removal happens in the reverse order, that is, Kubernetes removes 'pod/hybris-stateful-storefront-3' first followed by 'pod/hybris-stateful-storefront-2'.

You would see the following output right after execution of the sale script sh 'sap-commerce/hybris-scale 4 '-

Final status -

Horizontal Autoscaling

Kubernetes provides a way to configure autoscaling horizontally based on certain metrics. For example, if you want to scale up Hybris application if the load on one of the storefront pods exceeds 60%, it can be done as below. Also, note that you can configure min and max number of replicas. For more on this, you can refer to this link.

$ kubectl autoscale statefulsets hybris-stateful-storefront --max 6 --min 2 --cpu-percent 60Monitoring and Logging

Finally, any production system needs monitoring, profiling and alerting. Google Kubernetes Engine (GKE) includes managed support for Stackdriver. Stackdriver monitors GKE clusters, manage the system and debug logs, and analyzes the system's performance using advanced profiling and tracing capabilities.

During cluster creation, we enabled stack driver logging by including '--enable-stackdriver-kubernetes' option.

In GCP UI, go to Stackdriver -> Monitoring; it navigates to stackdriver monitoring portal.

In the Stackdriver portal, go to Logging, and you can view the logging of different Kubernetes services like pods, nodes, containers, and applications. For example, if you want to see HSQL DB, Solr, or Hybris logs, you can select the options shown below.

Clean up

If you choose to delete the cluster execute the below script. If the purpose of this deployment is learning and training, make sure to delete the cluster to avoid incurring costs for the services.

$ sh cluster/delete-cluster.shNext steps

Having successfully deployed Hybris on the cloud, where can we go from here? We certainly have completed the most essential and critical piece of cloud deployment. Few things need to be done to make cloud instance production-ready, like, create a private network, set up firewall rules, access rights, readiness probes, liveliness probes, setup ingress in the place of the load balancer, sticky sessions for load balancing and finally configure monitoring and alerts.

Conclusion

In the three-part blog post series, in the first part, we looked at the introduction to the concept of recipes and walkthrough of custom recipe that avoids a need to create a separate image for every environment by externalizing configuration like DB connection, Solr and so on. In the second part, a detailed walkthrough of building an SAP Commerce docker image and examined how recipes are structured. Finally, in this series, we looked at the deployment of SAP Commerce, DB, and Solr on Google Kubernetes Engine.

About the Author

Ravi Avulapati - Specializes in Java, J2EE, and frameworks, SAP Commerce (Hybris), Search with Solr, Solution & Enterprise Architecture, Microservices, DevOps, Cloud solutions. Machine learning and deep learning enthusiast.

About Valtech

Valtech is a global full-service digital agency focussed on business transformation with offerings in strategy consulting, experience design & technology services. Valtech is an SAP partner and is an SAP recognized expert in SAP Commerce.

- SAP Managed Tags:

- SAP Commerce,

- SAP Commerce Cloud

- Apache Solr

- application autoscaler

- application deployment

- application logging

- automated monitoring

- cloud deployment

- cloud platform scaling

- Commerce Cloud

- Deploy Hybris to Cloud

- Deploy SAP Commerce to Cloud

- Docker Container

- Docker Hub

- docker-compose

- dockerfile

- ecommere

- google cloud platform

- Google Cloud Storage

- google kubernetes engine

- hsqldb

- Kubernetes

- load balancing

- stackdriver

4 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP

1 -

API Rules

1 -

c4c

1 -

CAP development

2 -

clean-core

2 -

CRM

1 -

Custom Key Metrics

1 -

Customer Data

1 -

Determination

1 -

Determinations

1 -

Introduction

1 -

KYMA

1 -

Kyma Functions

1 -

open SAP

1 -

RAP development

1 -

Sales and Service Cloud Version 2

1 -

Sales Cloud

1 -

Sales Cloud v2

1 -

SAP

1 -

SAP Community

1 -

SAP CPQ

1 -

SAP CRM Web UI

1 -

SAP Customer Data Cloud

1 -

SAP Customer Experience

1 -

SAP CX

2 -

SAP CX Cloud

1 -

SAP CX extensions

2 -

SAP Integration Suite

1 -

SAP Sales Cloud v1

2 -

SAP Sales Cloud v2

2 -

SAP Service Cloud

2 -

SAP Service Cloud v2

2 -

SAP Service Cloud Version 2

1 -

SAP Utilities

1 -

Service and Social ticket configuration

1 -

Service Cloud v2

1 -

side-by-side extensions

2 -

Ticket configuration in SAP C4C

1 -

Validation

1 -

Validations

1

Related Content

- SAP Commerce Cloud Q1 ‘24 Release Highlights in CRM and CX Blogs by SAP

- SAP Customer Data Cloud Integration with Commerce Cloud and Composable Storefront in CRM and CX Blogs by SAP

- How to Debug Commerce Cloud Pod with HAC in CRM and CX Blogs by SAP

- Deadlock found while doing data migration for few tables (eg pgrels ) in CRM and CX Questions

- Using Dynatrace to Manage and Optimize the Performance of your SAP Commerce Cloud Solution in CRM and CX Blogs by SAP