- SAP Community

- Products and Technology

- Technology

- Technology Q&A

- Custom planning function to append only changed re...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Custom planning function to append only changed records

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 07-30-2019 8:43 AM

Hi all,

I am using BPC 10.1 Optimised for S/4 Hana. I created a custom planning function type and I use ABAP Classes for all BPC calculations.I filter the data based on planning filter and see the data available on aggregation level by using table c_th_data.And I calculate stg and append the data to c_th_data.Everything works fine.

However, if I do not append a record which was originally available in c_th_data, then it is deleted automatically.I see the log after I run it from rsplan.(xx number of records deleted) This affects the code performance, I have to add the source data to c_th_data each time.It takes too much time to append the source data of c_th_data to adso after I run the calculation.If I appended less records, then it would take less time.

This is not the case in BPC classic version.If we do not append a record in ct_data in BPC classic, then it will not be cleared by system automatically although it was available in the scope of the code.Only appended data pattern was affected for ct_data ( bpc classic).

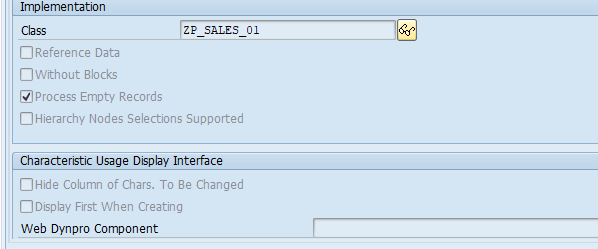

What I want to know is if this is standard behaviour of bw ip(bpc embedded) or is there any parameter like "process changed records" or stg like this?You can see the interface I use and my custom planning function type below:

Planning function type(properties tab) . There is nothing in parameters tab:

My class uses the interface :

IF_RSPLFA_SRVTYPE_IMP_EXEC

All I do is writing the code to EXECUTE method of that class and append records to c_th_data.

- SAP Managed Tags:

- SAP Business Planning and Consolidation, version for SAP NetWeaver,

- BW Planning

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Hi Gregor,

so why do you say you have to append 300K records?

Yes I append 100K to c_th_data, remaining 200K was already there.I didn't mean that.What I mean by append 300K records is that my c_th_data has to include 300K records. This 300K records are appended to adso back.And it takes too much time for standard SAP class to append 300K records back to adso instead of 100K records.Although remaining 200K was already there, the system itself still checks "if I append the same data pattern or not" for those 200K as well .Finally yes it appends 100K new records but the system checks the other 200K as well and it takes time.

In BPC standard , standard sap classes to append records were only checking 100K records.

Hope I am clear.If this is standard behaviour, it's clear.Thanks

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Hi Gunes,

in fact the system 'checks' the 300K records but only to find out the changed/deleted/new records; this is just a hash lookup (some microseconds per records); the real check is done only for changed/new records where data slices/characteristic relationships and may be master data (new records) are checked. The latter part might be time consuming depending on the complexity of the modeled planning constraints.

The planning buffers are updated only via 'delta records', i.e. here also only new/changed/deleted records are considered. When data is saved the planning buffer has the delta records and saves the delta records for cube-like aDSOs and computes the after-images with new/changed/deleted records to insert/update/delete records from direct-update aDSOs.

Regards,

Gregor

- LMS ODATA API Pagination Issue with /catalog-service Endpoint in Technology Q&A

- Consuming SAP with SAP Build Apps - adding S/4HANA Cloud in Technology Blogs by SAP

- Create a Multi Column List Item and Filter & Sort it via Dropdown Fields in SAP Build Apps in Technology Blogs by Members

- Best practices to follow during DTV implementation in Technology Blogs by SAP

- how to Implement complicated logic in planning function? in Technology Q&A

| User | Count |

|---|---|

| 75 | |

| 10 | |

| 8 | |

| 7 | |

| 6 | |

| 6 | |

| 5 | |

| 5 | |

| 4 | |

| 4 |

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.