- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Top 5 Reasons to Migrate SAP IQ Systems to SAP HAN...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

It’s pretty well known that SAP HANA Cloud, data lake is built on the foundation of on-premise SAP IQ which makes it the perfect choice if you’re thinking about migrating your existing SAP IQ systems to the cloud. You don’t have to move, on-premise SAP IQ continues to be fully supported with SAP having committed to keeping version 16.1 in mainstream maintenance through at least 2027-12-31. However, it takes a lot of effort to maintain an on-premise database system and migrating to a cloud deployment provides the opportunity to offload a lot of critical but time consuming administration and maintenance tasks to the cloud service, which then frees up your database team to focus more time on higher value database development activities.

If you are thinking about migrating your on-premise SAP IQ system to the cloud, here are my picks for the top 5 benefits of moving to SAP HANA Cloud, data lake.

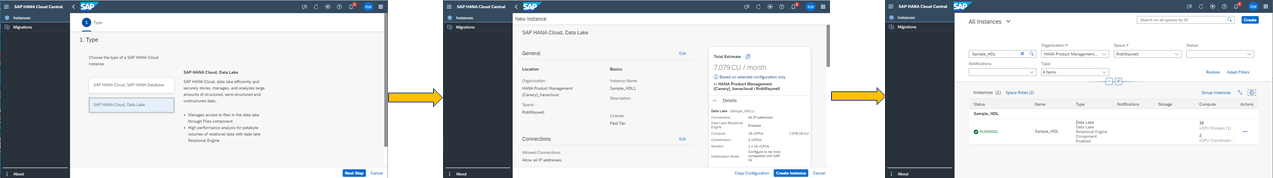

Ease of provisioning

Provisioning a new database in an on-premise environment is never a simple task. Even if you have software licenses already available you still have to go through the hardware procurement process, getting multiple quotes from vendors, waiting for delivery once a purchase order is submitted, and waiting for the hardware to be set up. The overall process usually takes a few months and depending on your hardware refresh cycle, it has to be repeated every 3-5 years.

With SAP HANA Cloud, data lake it takes just a few minutes from clicking Create Instance to having a running database, and perhaps best of all – you NEVER have to upgrade the hardware.

Near Zero Downtime Upgrades (NZDU)

Speaking of upgrades, hardware isn’t the only thing that needs to be upgraded when running your on-premise system. The database software has to be upgraded too, and that certainly happens more frequently than the hardware upgrades.

One of the bigger pain points in the execution of software upgrades of mission critical systems is scheduling and managing the system downtime, often having to align with very tight maintenance windows.

This is an area where SAP HANA Cloud, data lake provides real innovation leveraging control of the cloud infrastructure along with the fact that every SAP HANA Cloud, data lake instance is a multi-node scale out or multiplex system to execute a rolling NZDU that maintains read access to the system throughout the process.

Flexible scaling of compute

Coming back to the topic of provisioning, one of the challenging aspects of any on premise system is sizing. Hardware purchases, for both the server hardware and the storage hardware, are pretty inflexible. Sure, you can use virtualization software to deploy multiple smaller systems on a single server, but when you are deploying a database system that could scale to 10’s or 100’s of TB, or even PBs then sharing servers really isn’t an option.

If you are working with a mature on-premise database, then you likely have a good idea of how the data volume will grow over the next 3-5 year hardware cycle. However, if you’re standing up a new system your data volume and workload estimates might be solid for the first year but get less and less reliable the further out you look.

With an on-premise system you usually end up buying and provisioning a system that is over sized for the first year, that you hope the business will grow into over the hardware lifecycle, but which could end up being undersized before the end of the cycle.

Migrating your SAP IQ system to an SAP HANA Cloud, data lake system provides the ability to scale your compute resources – vCPU and memory – up or down as needed. Scaling can be done vertically or horizontally, either increasing the size of worker nodes or adding more worker nodes to the system.

Automatic storage resizing

One of the characteristics of SAP IQ that has felt outdated to me for a long time is the need to pre-allocate storage space in the form of dbspaces made up of multiple dbfiles. Monitoring the available space, and adding dbfiles as needed to grow the storage volume isn’t a difficult task but it is “one more thing” you have to do when administering an on-premise SAP IQ system.

That task goes away when you migrate to SAP HANA Cloud, data lake with the required storage volume being automatically allocated as you add data to the database. This also benefits your cost of ownership compared to an on-premise SAP IQ system because with SAP HANA Cloud, data lake you are only charged for the storage volume that you are currently using.

<Sorry, no picture for this one because you don’t

have to manage your storage volume. 😊>

Another cost benefit of migrating your on-premise SAP IQ system to SAP HANA Cloud, data lake Relational Engine is reducing the per unit cost of the storage volume. The performance of any disk based database is directly impacted by the throughput of the disk I/O and on-premise SAP IQ systems are normally provisioned on SSD storage to optimize throughput. In contrast, SAP HANA Cloud, data lake Relational Engine uses cloud native object storage, storing each database page as an object. This takes advantage of the huge bandwidth reading from cloud native object storage to greatly reduce the cost of storing TBs or PBs of data in SAP HANA Cloud, data lake Relational Engine while maintaining, or in some cases even improving query performance.

If eliminating the task of managing your dbspaces and reducing your storage costs isn’t enough, SAP HANA Cloud, data lake has another big storage enhancement over on-premise SAP IQ. With SAP HANA Cloud, data lake the storage volume automatically shrinks when you delete or truncate data from the system. This is done in 1 TB increments so you have to be removing a meaningful volume of data, but it means that your storage volume, and therefore your storage cost, will adapt over time as you data volume grows and shrinks.

Cost effective backups at scale

Managing database backups for an on-premise database system like SAP IQ can be a complicated and time consuming administrative task. The storage cost for maintaining a rotating set of full and incremental backups for an SAP IQ database of 10’s or 100’s of TB, or even PBs could also be overwhelming, especially if you had to keep duplicate copies of the backups at another physical data center for disaster recovery.

The use of cloud native object storage by SAP HANA Cloud, data lake Relational Engine revolutionizes the way that backups are made. With cloud native object storage SAP HANA Cloud, data lake takes advantage of a ‘copy on write’ feature that makes a snapshot of each object whenever it is updated or overwritten. Since each database page is written to storage as a unique object, that means each database page is individually backed up whenever a change is written out. SAP HANA Cloud, data lake still uses a combination of full and incremental backups for the catalogue database but there is no longer any need to make a full backup of the “user_main” dbspace and your total backup storage volume will consist of just the changed database pages plus the catalogue database backups.

Cloud native object storage also provides disaster recovery support since the storage volume is redundant across multiple availability zones, and therefore multiple data centers, within a hyperscalar region.

- SAP Managed Tags:

- SAP HANA Cloud,

- SAP IQ,

- SAP HANA Cloud, data lake

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

99 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

68 -

Expert

1 -

Expert Insights

177 -

Expert Insights

317 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

362 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

450 -

Workload Fluctuations

1

- User centricity opens the door to a successful SAP S/4HANA Cloud implementation in Technology Blogs by SAP

- SAP BW/4 - revamp and true to the line 2024 in Technology Blogs by Members

- SAP BTP, ABAP in the Cloud Custom Code Transformation using abapGit and gCTS in Technology Blogs by Members

- JMS driver based on jakarta.jms available for SAP Netweaver in Technology Q&A

- App to automatically configure a new ABAP Developer System in Technology Blogs by Members

| User | Count |

|---|---|

| 21 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 7 | |

| 6 | |

| 6 | |

| 6 |