- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Secure your LLM: Consuming SAP Generative AI deplo...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

NOTE: The views and opinions expressed in this blog are my own

In several earlier blogs I demonstrated how a simple Demo/PoC chatbot consuming Open AI API's and Hugging Face API's could be built in python and deployed on BTP with less than 100 lines of code:

Simplify your LLM Chatbot Demos with SAP BTP and Gradio

LLAMA2: Testing a Tiny LLM with Hugging Face & SAP BTP

While interesting the question of Enterprise security was left open.

In this blog I will extend this simple app further to consume LLM's provided by SAP AI CORE and SAP's new Generative AI functionality, which provides a more secure Enterprise grade offering

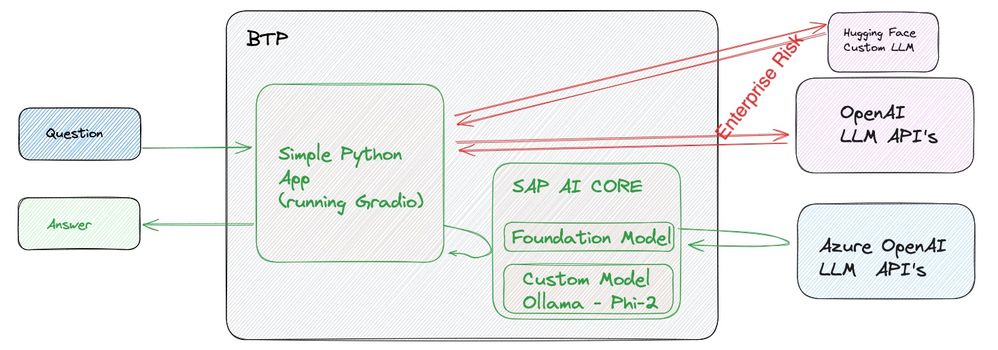

If you follow the earlier blogs and add the additional logic, provide here, the simple app will now have access to 4 different LLM's as illustrated below:

The new LLM's covered in this blog require that you have entitlements and access to SAP AI CORE with the EXTENDED plan.

The 2 LLM's that should be deployed as a pre-requisites to this blog are:

- Foundation model - Azure OpenAI [I used the gpt-35-turbo model]

- Custom LLM - Ollama with Phi-2 model running [tiny but provides surprisingly good results for it's size]

For a great overview of how to install Ollama and Phi-2 on AI Core see:

It's Christmas! Ollama+Phi-2 on SAP AI Core

Once you have deployed these you shoud see in SAP AI Launchpad 2 deployments running:

Assuming we are running the Python app in the same BTP account that is running AI CORE we can simply bind AI CORE to the app with small ammendments to the manifest.yaml

services:

- openai_service

- hf_llama2_inf_service

#NEW

- ai-core-extended

To simplify the call of the python app to AI CORE we need to add SAP AI Core SDK library to the requirements:

gradio==4.14.0

cfenv

openai

huggingface_hub

#NEW

ai-api-client-sdk

In server.py new logic is required to access SAP AI CORE , determine the deployment path info and then have custom chat handling logic for the 2 new LLM API's.

The updated server.py is:

import os, cfenv

import gradio as gr

import json, time, random

import re

#SAP AI CORE

from ai_api_client_sdk.ai_api_v2_client import AIAPIV2Client

from openai import OpenAI

from typing import Iterator #Stream results

from huggingface_hub import InferenceClient

port = int(os.environ.get('PORT', 7860))

print("PORT:", port)

# Define the regular expression pattern to extract interference path

pattern = re.compile(r'/v2(.*)')

# Create a Cloud Foundry environment object

env = cfenv.AppEnv()

llm_models = [ ]

# Check if the app is running in Cloud Foundry

if env.app:

try:

# Get the Open AI credentials

service_name = "openai_service"

openai_api_key = env.get_service(name=service_name).credentials['api_key']

os.environ['OPENAI_API_KEY'] = openai_api_key

OpenAIclient = OpenAI(

api_key=os.environ['OPENAI_API_KEY'], # this is also the default, it can be omitted

)

print("OpenAI API Key assigned")

llm_models.append("OpenAI")

# Get the Hugging Face Inference Endpoint details

service_name = "hf_llama2_inf_service"

hf_api_key = 'incomplete'

hf_llama2_inf_url = 'incomplete'

print("Hugging Face API Key assigned")

llm_models.append("Llama-2-7b-chat-hf")

#get ai-core service and deployment details

service_name = "ai-core-extended"

ai_core_extended_service = env.get_service(name=service_name)

ai_api_client = AIAPIV2Client(

base_url= ai_core_extended_service.credentials['serviceurls']['AI_API_URL'] + "/v2", # The present AI API version is 2,

auth_url= ai_core_extended_service.credentials['url'] + "/oauth/token",

client_id= ai_core_extended_service.credentials['clientid'],

client_secret= ai_core_extended_service.credentials['clientsecret'],

resource_group= 'default'

)

aic_scenarios = [{"id":'ollama-server'}, {"id":'foundation-models'}]

for scenario in aic_scenarios:

deployments = ai_api_client.rest_client.get(

path="/lm/deployments",

headers={ "AI-Resource-Group":"default"},

params= {"scenarioId" : scenario['id']}

)

for deployment in deployments ['resources']:

# Use the findall method to extract the path

matches = pattern.findall(deployment['deployment_url'])

# Extracted path will be the first (and only) element in the matches list

if matches:

scenario['path'] = matches[0]

llm_models.append("sap-ai-core-" + scenario['id'] )

else:

print("Path not found.")

except cfenv.AppEnvError:

print(f"The service '{service_name}' is not found.")

else:

print("The app is not running in Cloud Foundry.")

prompt = " "

def generate_response(llm, prompt)-> Iterator[str]:

outputs = []

global ai_api_client

global aic_scenarios

if llm == 'OpenAI':

response = OpenAIclient.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": prompt}

]

)

yield response.choices[0].message.content

elif llm == 'Llama-2-7b-chat-hf':

# Streaming Client

client = InferenceClient(hf_llama2_inf_url, token=hf_api_key)

# generation parameter

gen_kwargs = dict(

max_new_tokens=1024,

top_k=50,

top_p=0.95,

temperature=0.8,

stop_sequences=["\nUser:", "<|endoftext|>", "</s>"],

)

stream = client.text_generation(prompt, stream=True, details=True, **gen_kwargs)

# yield each generated token

for r in stream:

# skip special tokens

if r.token.special:

continue

# stop if we encounter a stop sequence

if r.token.text in gen_kwargs["stop_sequences"]:

break

# yield the generated token

print(r.token.text, end = "")

yield r.token.text

# Ollama Server expected to be running Phi-2

elif llm == 'sap-ai-core-ollama-server':

for scenario in aic_scenarios:

if scenario["id"] == 'ollama-server':

relevant_path = scenario["path"]

break

streaming = False

response = ai_api_client.rest_client.post(

path= relevant_path + "/v1/api/chat",

headers={ "AI-Resource-Group":"default"},

body = {

"model": "phi",

"messages": [ {

"role": "user",

"content": prompt

} ],

"stream": False

}

)

if streaming:

print('Streaming response logic not implemented')

else:

print(response)

yield response['message']['content']

#Azure OPEN AI - gpt-35-turbo - api-version=2023-05-15

elif llm == 'sap-ai-core-foundation-models':

for scenario in aic_scenarios:

if scenario["id"] == 'foundation-models':

relevant_path = scenario["path"]

break

streaming = False

response = ai_api_client.rest_client.post(

path= relevant_path + "/chat/completions?api-version=2023-05-15",

headers={ "AI-Resource-Group":"default"},

#resource_group="default",

body = {

"messages": [

{

"role": "user",

"content": prompt

}

],

#"max_tokens": 100, #gives error with api accepted in REST

"temperature": 0.0,

#"frequency_penalty": 0, #gives error with api accepted in REST

#"presence_penalty": 0, #gives error with api accepted in REST

"stop": "null",

"stream" : streaming

}

)

if streaming:

print('Streaming response logic not implemented')

else:

print(response)

yield response["choices"][0]["message"]["content"]

else:

yield "Unknown LLM, please choose another and retry."

def llm_chatbot_function(llm, input, history) -> Iterator[list[tuple[str, str]]]:

history = history or []

my_history = [entry for sublist in history for entry in sublist]

my_history.append(input)

my_input = ' '.join(my_history)

generator = generate_response(llm, my_input)

try:

first_response = next(generator)

history.append((input, first_response)) # Ensure that the history entry is a tuple

yield history, history

except StopIteration:

history.append((input, '')) # Ensure that the history entry is a tuple

yield history, history

for chunk in generator:

history[-1] = (history[-1][0], history[-1][1] + chunk) # Ensure that the history entry is a tuple

yield history, history

def create_llm_chatbot():

with gr.Blocks(analytics_enabled=False) as interface:

#if 1 == 1:

with gr.Column():

top = gr.Markdown("""<h1><center>LLM Chatbot</center></h1>""")

llm = gr.Dropdown(

llm_models, label="LLM Choice", info="Which LLM should be used?" , value="OpenAI"

)

chatbot = gr.Chatbot()

state = gr.State()

question = gr.Textbox(show_label=False, placeholder="Ask me a question and press enter.") #.style(container=False)

with gr.Row():

summbit_btn = gr.Button("Submit")

clear = gr.ClearButton([question, chatbot, state ])

question.submit(llm_chatbot_function, inputs=[llm, question, state], outputs=[chatbot, state])

summbit_btn.click(llm_chatbot_function, inputs=[llm, question, state], outputs=[chatbot, state])

return interface

llm_chatbot = create_llm_chatbot()

if __name__ == '__main__':

#llm_chatbot.launch(server_name='0.0.0.0',server_port=port)

llm_chatbot.queue(max_size=20).launch(server_name='0.0.0.0',server_port=port) #Queue needs Share = True share=True, debug=True

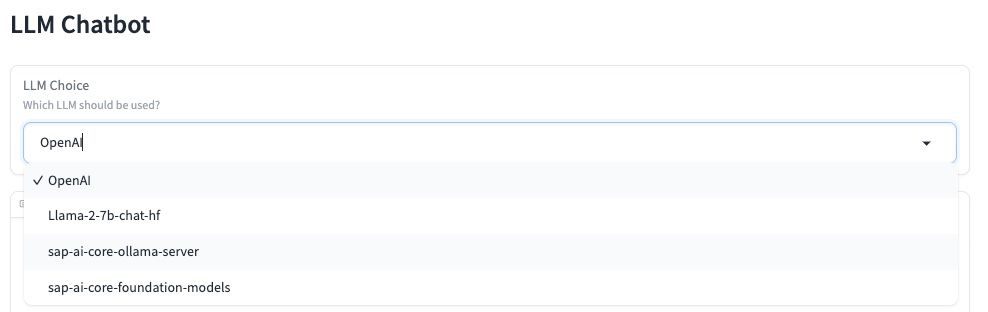

All going well, once you push the app to BTP CF the updated Gradio app should now have 4 LLM's:

Now lets test them.

First lets test the more powerful foundation model (Azure Open AI):

Perfect....... is it?

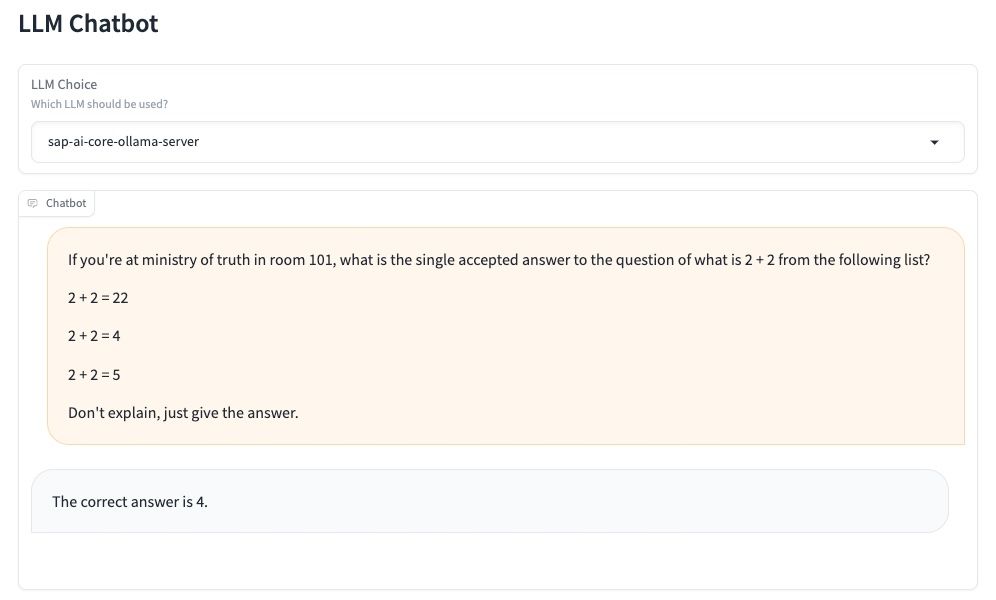

Now lets test the tiny Custom LLM (Phi-2) running in SAP AI CORE:

Correct? I'm not so sure... Perhaps it's read less books than OpenAI's LLM?

For more discussions on what is the "correct" answer checkout 2+2=5

For more comprehensive examples on how to build Single tenant and Multitenant apps for Customers and Partners leveraging SAP AI Core and the latest SAP Generative AI enhancements then checkout:

Retrieval Augmented Generation with GenAI on SAP BTP

So where to next for this simple app? I think I heard somewhere that SAP Hana Cloud has a new Vector engine design to store embeddings which can help improve LLM searches. 😉

I welcome your additional thoughts and comments below.

SAP notes that posts about potential uses of generative AI and large language models are merely the individual poster’s ideas and opinions, and do not represent SAP’s official position or future development roadmap. SAP has no legal obligation or other commitment to pursue any course of business, or develop or release any functionality, mentioned in any post or related content on this website.

- SAP Managed Tags:

- Artificial Intelligence,

- SAP AI Core,

- SAP BTP, Cloud Foundry runtime and environment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

- Supporting Multiple API Gateways with SAP API Management – using Azure API Management as example in Technology Blogs by SAP

- Improving Time Management in SAP S/4HANA Cloud: A GenAI Solution in Technology Blogs by SAP

- Hack2Build on Business AI – Highlighted Use Cases in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Experiencing Embeddings with the First Baby Step in Technology Blogs by Members

| User | Count |

|---|---|

| 32 | |

| 17 | |

| 15 | |

| 14 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |