- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Landscape Management (LaMa) Cloud Manager for ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Update May 17, 2022: As of SP23, support has been added for Amazon EBS gp3 volumes

Update May 3, 2021: As of SP19, support has been added for SUSE Linux Enterprise Server (SLES) 15 and Red Hat Enterprise Linux (RHEL) 7.6 or later including RHEL 8.x

AWS Cloud Manager Introduction

This blog covers the use of the AWS cloud manager (also called AWS Adapter) for SAP Landscape Management (LaMa). Information here is based on SAP Note 2574820. This note should always be consulted for the most up to date information.

Note: Some diagrams may appear too small or blurry to view. In this case please click on the diagram to enlarge it.

Before delving into the cloud manager, I would like to point out that the adapter is not a prerequisite to using LaMa to manage SAP landscapes residing in AWS. It can discover and manage systems that are running in AWS without the adapter. However, the adapter provides significant additional capabilities that leverage the cloud infrastructure such as storage and network to perform advanced operations.

LaMa together with the cloud manager gives you:

- Greater operational continuity through centralized management, visibility and control of your entire SAP landscape residing in AWS Cloud using a single console.

- Reduced time, effort and cost to manage and operate your SAP systems through automation of time-consuming SAP Basis administration tasks.

- Increased operational agility through acceleration of application life cycle management operations and fast response to workload fluctuations.

Many SAP customers are managing SAP workloads in AWS with LaMa. The SAP Product Availability Matrix (PAM) should be consulted to get up to date information on what operating systems are supported in AWS for the corresponding SAP application and what SAP Note is needed. The blog covers what is supported at the time of writing.

The AWS cloud manager is shipped with SAP Landscape Management Enterprise Edition by default but needs to be configured to make it operational. The following components are provided as of LaMa SP11:

- Virtualization adapter (EC2)

- Storage adapter for EBS

- Storage adapter for EFS

As of SAP Landscape Management Enterprise Edition SP15, these are the features available:

- Operations on AWS virtual machines

- Activate (Start)

- Deactivate (Power Off & Stop)

- Resize

- Reboot

- Destroy (Terminate)

- SAP System Relocate

- Provisioning Scenarios (please see complete list in SAP Note 2574820)

- System Snapshots

- System Clone

- System Copy

- System Rename

- System Refresh

- Support for Nitro based AWS instances

- Includes NVMe support with the Storage Adapter for EBS

LaMa & AWS Cloud Manager (click on image to enlarge)

Disclaimer

This blog is published “AS IS”. Any procedures shown are only examples and should be validated in a test environment before using in productive system environment. The procedures are only intended to better explain and visualize the features of the SAP Landscape Management. Some of the procedures used may differ from other publications as there are multiple ways to achieve the same result. If you use any of the procedures shown, you are doing it at your own risk.

Information in this blog could include technical inaccuracies or typographical errors. Changes may be periodically made.

Sections

- Compatibility/Prerequisites

- Notes and Limitations related to the EBS Storage Adapter

- Notes and Limitations related to the EFS Storage Adapter

- Cloud Manager Configuration

- SAP Landscape Setup - Adaptive Design Principles

- New SAP System

- Network Environment

- EFS Storage Setup

- Installation of SAP Netweaver ASCS

- Installation of SAP HANA

- Installation of SAP Primary Application Server (PAS)

- Install Empty Virtual Machines (Targets)

- Discover/Configure Systems in LaMa

- Scenarios

- SAP System Snapshot

- SAP System Clone

- SAP System Copy

- SAP System Refresh

- Provision New SAP Application Server

- SAP Instance Relocate

Compatibility/Prerequisites

In order to use the the full capabilities of the AWS cloud manager (especially important for copy/clone/relocate) your systems must meet the following minimum requirements:

- Up to SP18, the only supported OS is SUSE Enterprise Linux 12 (SLES 12)

- SLES 15 is not yet supported

- Red Hat is not yet supported

- Note that some functionality is possible such as standalone Post Copy Automation (PCA), stop, start, custom processes with automation studio etc.

- As of SP19, Red Hat Enterprise Linux (RHEL) 7.6 and later as well as SUSE Linux Enterprise Server (SLES) 15 are supported.

- SUSE package cloud-netconfig-ec2 must not be installed. If you already have it, either remove the package or disable it (to properly handle virtual IP addresses). Refer to SUSE KB 7023633

- For EBS storage adapter, your application data volumes need to be Logical Volumes (e.g. /sapmnt, /usr/sap/<SID>, /hana/shared/<HANASID> etc. residing on /dev/<volume group>/<logical volume>)

- In other words use Logical Volume Manager

- Attached EBS block devices for SAP volumes must be /dev/xvd[f-z]. xvd[a-e] should only be used for OS, swap and other non-SAP application purposes (does not apply to Nitro instances)

- Latest version of SAP Host Agent on all systems

- Latest version of SAP Adaptive Extensions 1.0 EXT

- For copy/refresh operations, PCA needs to be activated on source system

- AWS metadata service access must be available for all managed systems

- Access key and security key of an IAM user with SDK rights and without 2-factor authentication and one of the following 2 choices for AWS policies:

- Full access without policy restrictions

- EC2FullAccess

- S3FullAccess

- ElasticFileSystemFullAccess

- Minimal access with set of policies

- ec2_policy.json (Obtain as attachment download)

- efs_policy.json (Obtain as attachment download)

Notes and Limitations related to the EBS Storage Adapter

If you create a tag with the key "Name" the value will be shown as volume name in Advanced Operations -> Storage instead of the EBS volume id. This is recommended as it helps to more easily identify volumes.

Volume type gp2 and Io1 are supported. Target volume after copy will have the same volume type and in case of io1 type the same IOPS rate as the source volume.

SAP Landscape Management system snapshot is supported ONLY on EBS. If you have a filesystem layout that includes both EBS and EFS, then be aware that snapshot does not cover the latter.

Notes and Limitations related to the EFS Storage Adapter

This adapter supports EFS directory based copy for SAP Landscape Management system provisioning functionality. This means that directories are copied when needed for provisioning such as copy and clone because EFS does not support snapshot capabilities.

Refer to SAP Note 2574820 for more info.

LaMa Communications

It should be helpful to understand how the various components of LaMa and the adapters communicate with the managed systems in AWS. Please refer to the diagram below.

The green and orange lines from the AWS adapter communicate via the AWS API endpoints. Therefore LaMa should either have direct internet access or via a proxy.

LaMa AWS Cloud Manager (Adapter) Communications

Metadata Service

On each system ensure that the metadata service is available. It should be enabled by default but it is important for the cloud manager to operate so we must test it. This can be verified by running the command below. If you get an output without errors then this is working.

TOKEN=`curl -X PUT "http://169.254.169.254/latest/api/token" -H "X-aws-ec2-metadata-token-ttl-seconds: 21600"` \

&& curl -H "X-aws-ec2-metadata-token: $TOKEN" -v http://169.254.169.254/latest/meta-data/Cloud Manager Configuration

It is assumed that LaMa has been installed in AWS for this example though not required. LaMa can be located at a customer premises or another hyperscaler.

- To access the Cloud Managers' configuration, go to Infrastructure -> Cloud Managers.

- To configure a new Cloud Manager, choose Add.

- From the list of Installed Cloud Manager Types, choose Amazon (Amazon AWS Cloud Adapter).

- Choose Next.

- Enter the Cloud manager properties, Label and Monitoring Interval -- 300 seconds are recommended

- Enter the additional properties as follows:

- Access Key: Enter the key used for connecting to the Amazon account.

- Secret Key: Enter the key used for connecting to the Amazon account.

- Region: Enter the region where your instances are running.

- Enter Proxy Host, Port, User, and Password*.

* If the SAP Landscape Management system does not have internet access, HTTP proxy can be set for the Amazon Cloud Manager.

To make sure that all provided details are correct, choose Test Configuration.

After clicking on save the virtualization and storage adapters are configured automatically.

Validate them by going to LaMa Operations -> Cloud and Storage views. You can drill down the view by clicking on the adapters.

SAP Landscape Setup - Adaptive Design Principles

Now we will go through an example of setting up the SAP landscape environment. We will cover both EBS and EFS storage.

First let's look at the SAP Landscape Environment we will end up with:

- LaMa 3.0 SP15 Patch Level 2

- AWS Cloud Manager

- Local NFS Storage Manager (needed to handle NFS mounts from ASCS server)**

- **Not needed if using EFS for shared file systems

- Managed system with distributed servers

- SAP NetWeaver 7.5 SP18, HANA 2.0 SPS5

- ASCS, HANA, Application Server

- SAP Host Agent patch 48

- SAP Adaptive Extensions 1.0 patch 56

- Software Provisioning Manager 1.0 SP29

- Software Update Manager 2.0 SP8

- PCA license activated

- OS: SLES 12 SP5

Hostname and SID information for this example is as below:

| TYPE | SID/HANASID | VM Hostname | Virtual Hostname |

| ASCS | A4H | a4h-vm1 | a4h-ascs |

| HANA DB | HDB | hdb-vm1 | hdb-db |

| PAS (Primary Application Server) | A4H | a4h-vm2 | a4h-app-0 |

| LaMa | J2E | nm-lama | n/a |

| Target for ASCS | a4h-vm1-c | a4h-ascs-c | |

| Target for HANA | hdb-vm1-c | hdb-db-clone | |

| Target for PAS | a4h-vm2-c | a4h-app-clone |

Note that there is a 13 character limit for hostname in LaMa.

New SAP System

In this example we will set up the landscape using the Adaptive Design Principles. This is also referred to as Adaptive Computing and in LaMa you will see terms like AC-enabled.

The Adaptive Design has three principles:

- Virtual Hostname & IP

- Virtual hostnames for SAP are bound to virtual IPs

- Network connections are to virtual hostname and not primary hostname of server

- Note: DNS entries need to be A records and not CNAME records

- Dynamic Storage Layout

- Shared connectivity to storage devices

- SAN technologies (EBS) - can be detached and attached to different hosts but one host use at a time

- NAS (Local NFS or EFS) - can be shared simultaneously on multiple hosts

- Central User Management

- User accounts are created in a nameserver such as LDAP

- Virtual Hostname & IP

The most important are the first two and these are needed to work with the adapter. So in our setup we focus on these. The central user management is recommended but we can get by with local accounts as an LDAP setup was not readily available to perform the below exercises.

As noted earlier, the EFS storage only supports a limited number of filesystem mount points. We have decided that all HANA filesystems will use EBS.

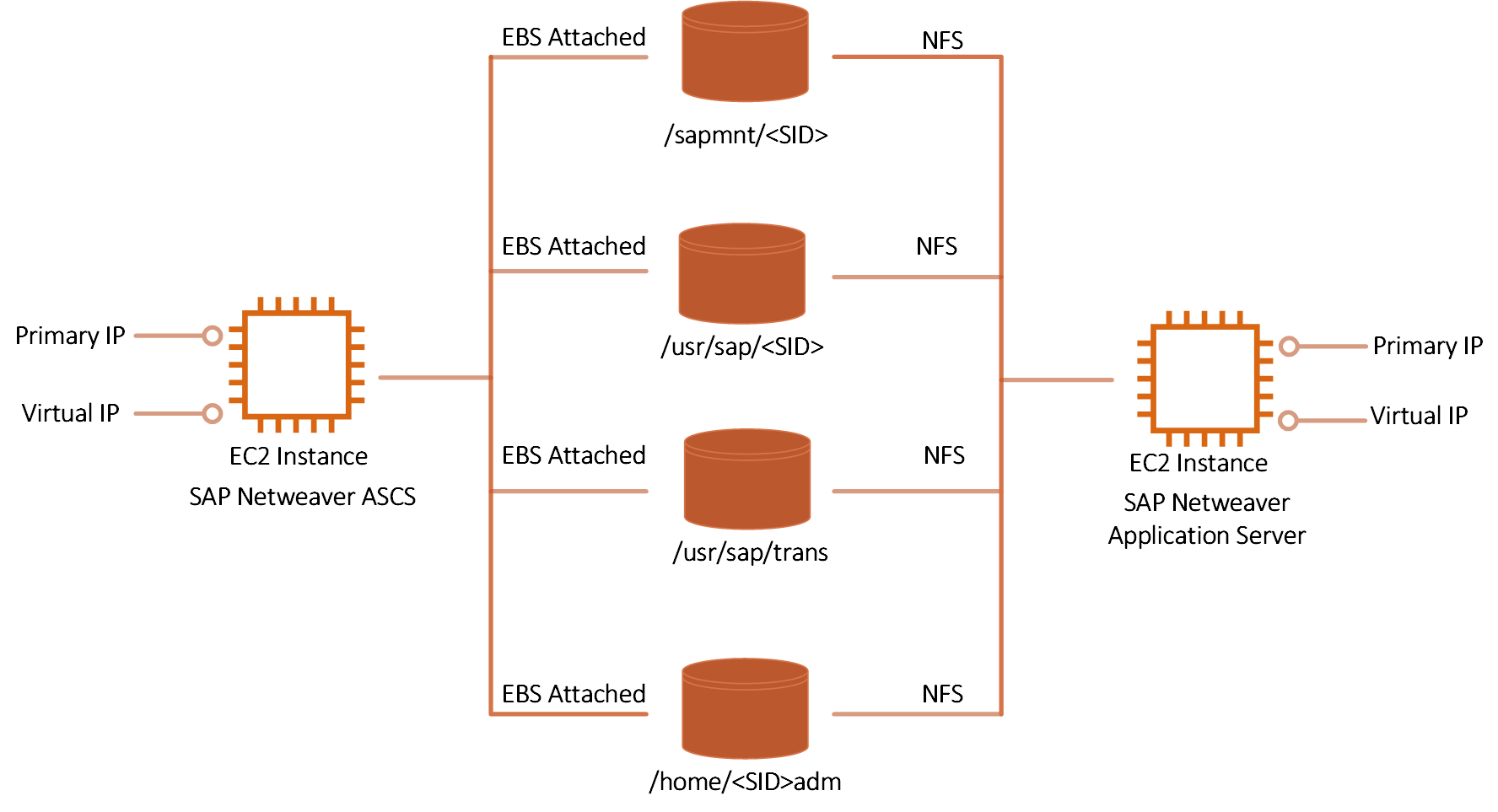

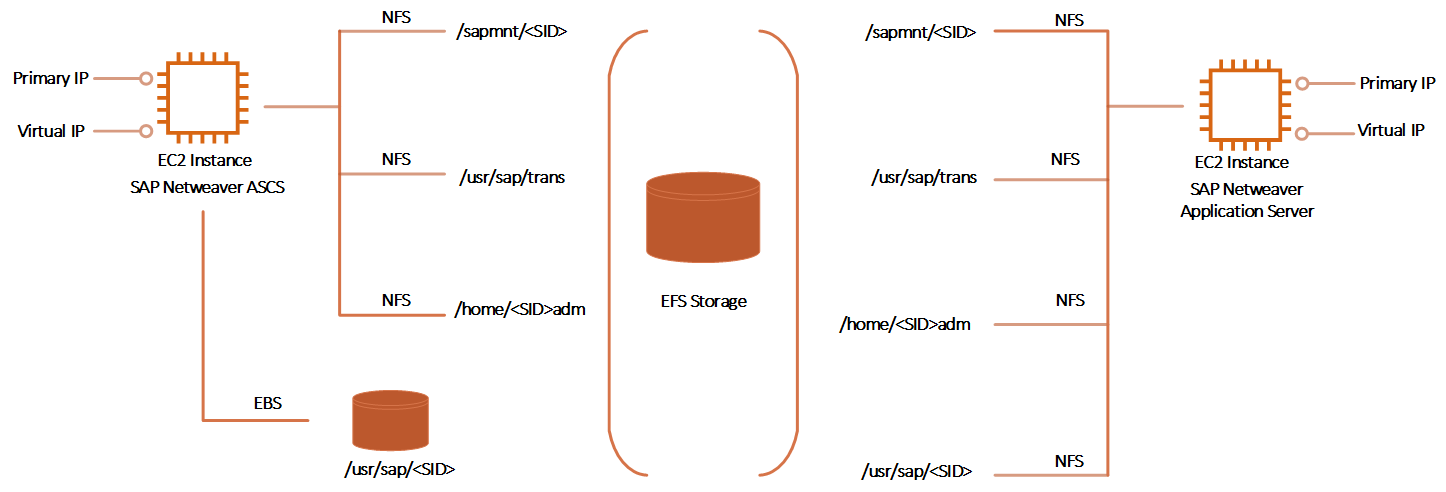

For the ASCS and PAS, we will demonstrate two options.

Option 1: We use EBS attached volumes for the SAP ASCS server and the filesystems will be exported and mounted via NFS (using Local NFS adapter) by the SAP primary application server. Any additional SAP application servers will also mount via NFS from the ASCS server.

Option 2: We can use AWS EFS as the central NFS storage to be mounted on ASCS and PAS. Any additional application server will also mount EFS storage. This is the recommended option as it is more suited for scaleout systems and reduces bottleneck and redundancy issues.

Option 1 could be considered obsolete since AWS has EFS. It is included here as this may be more familiar to those coming from on-premise environments where they had AC-enabled setups with SAN storage adapters and Local NFS adapter. It also helps to show the difference between using only EBS and EBS+EFS. Lastly, taking system snapshots is not supported with option 2 and that may be important to some. If EFS directories are large then the copy process can take some time where as a snapshot is a lot faster.

- Make sure to enable "Automatic Mountpoint Creation" in Setup -> Settings -> Engine of LaMa (described further down)

- If SAP LaMa mounts volumes using the SAP Adaptive Extensions on a virtual machine, the mount point must exist if this setting is not enabled.

- In this example SID is A4H and HANASID is HDB

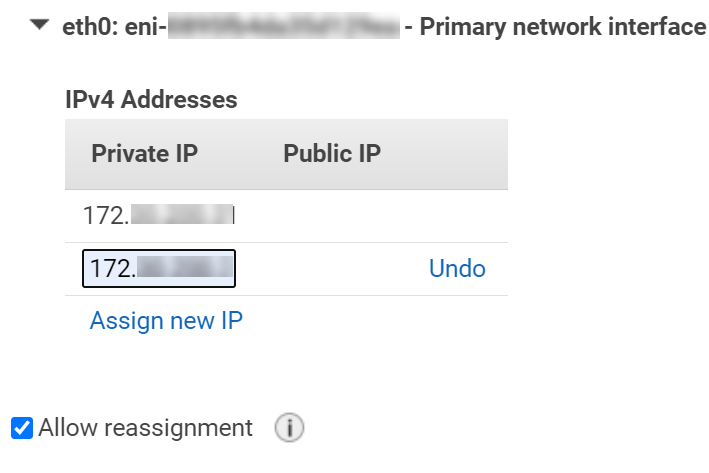

Each server must be configured with a primary IP address (hostname of server) and a secondary IP address (virtual hostname of server).

In the case of Option 1, the SAP NetWeaver ASCS instance needs logical volumes for /sapmnt/<SID>, /usr/sap/<SID>, /usr/sap/trans, and /home/<sid>adm. The SAP application servers will get the additional data via NFS from the ASCS server.

/usr/sap/<SID> does not normally need to be shared but in an AC-enabled set-up this is needed and should be mounted via NFS for the application servers. Without this, additional application servers can not be provisioned.

Adaptive Design Layout Option 1 (EBS + Local NFS) - SAP ASCS and Application Servers (click on image to enlarge)

Adaptive Design Layout Option 2 (EBS + EFS) - SAP ASCS and Application Servers (click on image to enlarge)

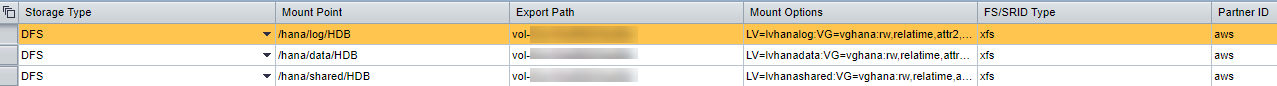

For SAP HANA we will use only EBS and it needs logical volumes for /hana/shared/HANASID, /hana/data/HANASID, and /hana/log/HANASID. Note that /hana/shared/HANASID can also reside on EFS.

Optionally you can also add /hana/backup as a separate volume and filesystem. This also has the option of either residing on EBS or EFS storage.

Please NOTE: /usr/sap/HANASID should not be a separate filesystem and must always remain as part of the OS filesystem.

Adaptive Design Layout - HANA (click on image to enlarge)

Network Environment

The following was done to set up the environment. This is only one way of doing it as there are many different options of setting up the environment.

- A new VPC was created for this landscape environment and a subnet with network of /24 netmask

- Route 53 DNS with private zones for forward and reverse address resolution (or a DNS server of your choice). This is optional and not a mandatory step. If you do not use DNS then make sure to populate the /etc/hosts files of all the systems including LaMa.

- Examples:

- nslookup hdb-db should resolve to correct virtual IP address

- nslookup hdb-db.<domain address> should resolve to correct IP address

- nslookup <IP address> should resolve to hostnames

- Examples:

- Only private addresses were used for the managed systems

- Within the subnet there is open communication between systems

- Optional: A jump server allowing access to the systems (may not be needed for your setup)

- This can also act as the proxy for the LaMa AWS Cloud Manager

- LaMa was also installed in the same VPC and subnet

- You can have LaMa in another VPC, on-premise datacenter or another hyperscaler but would need to be able to communicate with managed servers on specific ports.

Adaptive Design Layout - Network (click on image to enlarge)

Installation sequence used for a distributed HANA environment was as shown below. There are other ways to install so below is one way to do it.

- SID:A4H / ASCS with SWPM (sapinst on this server)

- HANASID:HDB / HANA with hdbclm (on this server)

- A4H / HANA database with SWPM (sapinst on application server)

- Application Server with SWPM (sapinst on application server)

- SUM on application server

EFS Storage Setup

This section will cover how to setup EFS storage in AWS and how to mount it on your ASCS and PAS. The same EFS filesystem can be used for the repositories.

You have the option of using CLI or the web portal. I will cover the portal procedure.

Go to Amazon EFS --> FileSystems

Click on Create File System

Give it an optional name

Enter the VPC where your SAP systems are residing

- It is not mandatory to use the same VPC as your mount target takes care of this. You can use a different VPC at this point but in this example we kept it simple

Optional: Click on customize to add tags or make other changes such as higher performance. In my case I chose the defaults.

Click on Create

After File System is created click on filesystem

Go to tab “Network”

Click on Create Mount Target

Enter the VPC where your SAP systems are located. This part is mandatory.

Click on "Add Mount Target"

Choose the availability zone of your SAP systems

Enter the subnet where the SAP systems are located

Enter the Security Group of the SAP systems

IP Address can be blank and it will be assigned automatically

Click Save

Note down the file-system-id (and IP address for reference) of your EFS mount target.

FQDN (Fully Qualified Domain Name) is of the format:

- file-system-id.efs.aws-region.amazonaws.com

- In our case it is: fs-xxxx.efs.us-east-1.amazonaws.com

Now we are ready to create the directories on the EFS filesystem to be mounted on the SAP systems

Go to your ASCS SAP system on the above subnet.

Create a temporary mount point

#mkdir /a

Mount the EFS filesystem

#mount -t nfs -o nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2 <FQDN>:/ /a

If the mount command fails check the security group of your SAP systems to ensure NFS traffic is allowed on the "inbound" tab. Source should be the security group of the EFS.

Create the directories on EFS

#mkdir -p /a/sapshares/trans /a/sapshares/sapmnt/SID /a/sapshares/home/<SID> /a/sapshares/sap/<SID>

Create mount points

#mkdir -p /usr/sap/trans /sapmnt/<SID> /home/<sid>adm /usr/sap/<SID>

Unmount the temporary mount from EFS

#umount /a

Mount EFS on the new mount points

#mount -t nfs -o nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2 <fqdn>://sapshares/trans /usr/sap/trans

#mount -t nfs -o nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2 <fqdn>://sapshares/sapmnt/A4H /sapmnt/A4H

#mount -t nfs -o nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2 <fqdn>://sapshares/home/a4hadm /home/a4hadm

(PAS only) #mount -t nfs -o nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2 <fqdn>://sapshares/sap/A4H /usr/sap/A4H

Important: You MUST use "//" after "<FQDN>:". Even though the mount command works with the single "/", you will run into issues during copy/clone operations. Also the double "//" must be configured in LaMa when doing the "Retrieve Mount List" as shown in the configuration section.

Enter the above mounts in /etc/fstab for automatic mount on boot (shown in sections for ASCS and PAS installation).

- Do this only on the source systems

- LaMa will handle the mounts on the target systems

Installation of SAP Netweaver ASCS

Wherever you see a double asterisk (**), it means there is an alternative step to use EFS or a general note regarding EFS.

Create a new virtual machine

- SLES 12

- t2.large (Not a recommendation; ok for a test; refer to link for minimum sizing)

- OS and swap are the only disks added during the installation

- Only primary IP address is assigned initially

Perform these additional steps after virtual machine has been created:

- Test metadata service as described above

- Perform all OS related prerequisites for Netweaver

- Disable cloud-netconfig-ec2 as described in the prerequisite section

- Create an EBS volume of approximately 50GB size and attach to VM**

- **Smaller size of about 10G if using EFS since we only need it for /usr/sap/SID as non-shared filesystem. All other filesystems will be on EFS

- On the OS, add the attached device to logical volume manager**

- Volume Group of vgascs

- Logical volumes of sapmnt (5G), sap (10G), trans (30G), home (5G)

- **For EFS only create logical volume "sap" (10G)

- Create xfs filesystem on each volume and mount as**:

- **For EFS only do /usr/sap/<SID>

- /sapmnt<SID>

- /usr/sap/<SID>

- /usr/sap/trans

- /home/<sid>adm

- Add above to /etc/fstab**

- **For EFS, only /usr/sap/<SID> is added

- Note: Do this only on source systems. On target systems (e.g. copy/clone) this is not needed as LaMa will mount the filesystems

/dev/vgascs/sapmnt /sapmnt/A4H xfs defaults,nofail 0 2

/dev/vgascs/sap /usr/sap/A4H xfs defaults,nofail 0 2

/dev/vgascs/trans /usr/sap/trans xfs defaults,nofail 0 2

/dev/vgascs/home /home/A4H xfs defaults,nofail 0 2

- **If using EFS (option 2) instead of EBS on ASCS, go to section EFS setup and return to this step

- For EFS add the mounts in /etc/fstab

- Note: Do this only on source systems. On target systems (e.g. copy/clone) this is not needed as LaMa will mount the filesystems

- For EFS add the mounts in /etc/fstab

- **If using EFS (option 2) instead of EBS on ASCS, go to section EFS setup and return to this step

/dev/vgascs/sap /usr/sap/A4H xfs defaults,nofail 0 2

<EFS ID>.efs.<aws region>.amazonaws.com://sapshares/home/a4hadm /home/a4hadm nfs nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport 0 0

<EFS ID>.efs.<aws region>.amazonaws.com://sapshares/sapmnt/A4H /sapmnt/A4H nfs nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport 0 0

<EFS ID>.efs.<aws region>.amazonaws.com://sapshares/trans /usr/sap/trans nfs nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport 0 0

- Note the use of double slash "//" right after the EFS fully qualified domain name. This is important.

- Add one additional IP address to the virtual hostname via AWS console or CLI

- Make sure to Check or use the option “Allow reassigmnent” so that LaMa can move it during a relocate operation

- Ensure that you can communicate with the host using the secondary host -- e.g. from LaMa

- Enable NFS server - since the primary app server will mount from this host**

- **Skip for EFS

- Add to /etc/exports**

- **Skip for EFS

- Note: Not needed on target hosts as handled by LaMa

/home/a4hadm *(rw,no_root_squash,sync,no_subtree_check)

/sapmnt/A4H *(rw,no_root_squash,sync,no_subtree_check)

/usr/sap/A4H *(rw,no_root_squash,sync,no_subtree_check)

/usr/sap/trans *(rw,no_root_squash,sync,no_subtree_check)

- Export all filesystems above with "exportfs -a"**

- **Skip for EFS

- Export all filesystems above with "exportfs -a"**

- Install SAP Host Agent - latest version

- Install SAP ADAPTIVE EXTENSION 1.0 EXT -- latest version (reference)

Run Software Provisioning Manager (SWPM, on this host) and use the virtual hostname a4h-ascs for the ASCS Instance Host Name

sapinst SAPINST_USE_HOSTNAME=a4h-ascsAdd the following profile parameter to the SAP Host Agent profile, which is located at /usr/sap/hostctrl/exe/host_profile. For more information, see SAP Note 2628497.**

- **Skip for EFS

Note that we did not include SID in the paths. This is because when LaMa re-exports and mounts during a copy operation, the new SID directories need to be created one level higher in the directory structure.**

- **Skip for EFS

acosprep/nfs_paths=/home/,/usr/sap/trans,/sapmnt/,/usr/sap/Note: The location of the host_profile file is not part of the filesystem that gets cloned when performing a clone or copy operation. Therefore this entry will need to be added by hand on the target ASCS host. If you do not then you may get an error such as "Path 'xyz' is not allowed for nfs reexports".

Installation of SAP HANA -- HANASID=HDB

We will use only EBS for HANA.

Create a new virtual machine

- SLES 12

- t2.2xlarge (Not a recommendation; ok for a test; refer to this doc and AWS for sizing)

- OS and swap are the only disks added during the installation

- Only primary IP address is assigned initially

Perform these additional steps after virtual machine has been created

- Test metadata service as described above

- Perform all OS related prerequisites for HANA install (refer to HANA installation guide)

- Disable cloud-netconfig-ec2 as described in the prerequisite section above

- Create a volume of approximately 210GB size and attach to VM

- On the OS add the attached device to logical volume manager

- Volume Group of vghana

- Logical volumes of lvhanashared (80G), lvhanadata (80G), lvhanalog (50G)

- Note that if needed you can do expansion of EBS volumes, logical volumes and filesystems (online without a reboot)

- Create xfs filesystem on each volume and mount as:

- /hana/shared/HANASID

- /hana/data/HANASID

- /hana/log/HANASID

- Add to /etc/fstab

- Note: Only on source systems. LaMa will handle this on target systems

- Add one additional IP address to the virtual hostname (refer to ASCS install above)

- Verify forward and reverse lookups for all the systems listed in table

- Install SAP Host Agent - latest version

- Install SAP ADAPTIVE EXTENSION 1.0 EXT -- latest version (reference)

In this example we install SAP HANA using the command-line tool hdblcm

- Using parameter "-- hostname" to provide the virtual hostname

hdblcm --hostname hdb-dbInstallation of SAP HANA -- SID=A4H

Now we will install HANA database (tenant) for SID A4H. This will be done with SWPM on the primary application server.

Proceed to next section.

Install SAP NetWeaver Application Server for SAP HANA

Wherever you see a double asterisk (**), it means there is an alternative step to use EFS or a general note regarding EFS.

Create a new virtual machine

- Same steps as ASCS

Perform these additional steps after virtual machine has been created

- Test metadata service as described above

- Perform all OS related prerequisites for Netweaver

- Disable cloud-netconfig-ec2 as described in the prerequisite section above

- Create the mount points for /home/a4hadm,/usr/sap/trans,/sapmnt/A4H,/usr/sap/A4H

- Note that you do not need additional EBS volumes**

- **This is applicable for both option 1 and 2

- Append following to /etc/fstab**

- Note: Do this only on source systems. On target systems (e.g. copy/clone) this is not needed as LaMa will mount the filesystems

a4h-ascs:/home/a4hadm /home/a4hadm nfs defaults 0 0

a4h-ascs:/sapmnt/A4H /sapmnt/A4H nfs defaults 0 0

a4h-ascs:/usr/sap/trans /usr/sap/trans nfs defaults 0 0

a4h-ascs:/usr/sap/A4H /usr/sap/A4H nfs defaults 0 0

- ** For EFS the fstab will look like:

- Note: Do this only on source systems. On target systems (e.g. copy/clone) this is not needed as LaMa will mount the filesystems

<EFS ID>.efs.<aws region>.amazonaws.com://sapshares/sap/A4H /usr/sap/A4H nfs nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport 0 0

<EFS ID>.efs.<aws region>.amazonaws.com://sapshares/home/a4hadm /home/a4hadm nfs nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport 0 0

<EFS ID>.efs.<aws region>.amazonaws.com://sapshares/sapmnt/A4H /sapmnt/A4H nfs nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport 0 0

<EFS ID>.efs.<aws region>.amazonaws.com://sapshares/trans /usr/sap/trans nfs nfsvers=4.1,rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport 0 0

- Mount the above

- Add one additional IP address to the virtual hostname (refer to ASCS install above)

- Verify forward and reverse lookups for all the systems listed in table

- Install SAP Host Agent - latest version

- Install SAP ADAPTIVE EXTENSION 1.0 EXT -- latest version

Make sure that UID for sapadm and GID for sapsys created by the host agent installations match what you have on the ASCS server. If not make changes to ensure they match.

- Start SAP Software Provisioning Manager (SWPM) using the virtual hostname of the primary application server. Also use the parameter to specify the HDB user store.

SWPM Run 1 - On the Application Server:

sapinst HDB_USE_IDENT=SYSTEM_A4H SAPINST_USE_HOSTNAME=a4h-app-0In the first run use the option to install HANA as part of the distributed system install

- Database connection details

- Database Host = hdb-db

- Instance # of SAP HANA Database (same as initially set during hdbclm)

- HANASID = HDB

- Database connection details

SWPM Run 2 - On the Application Server

After above is completed, run SWPM again but this time install the application server

- Use the same directory structure as ASCS (i.e. mounted from ASCS)**

- **Same approach for EFS

Check HDBUSERSTORE for Hana Client:

To avoid issues later with provisioning such as clone, make sure that hdbuserstore is setup correctly. If the database connection does not point to the virtual hostname then you need to fix it. As <sid>adm on PAS do the following:

To Check:

a4hadm% hdbuserstore list

DATA FILE : /home/a4hadm/.hdb/SYSTEM_A4H/SSFS_HDB.DAT

KEY FILE : /home/a4hadm/.hdb/SYSTEM_A4H/SSFS_HDB.KEY

KEY DEFAULT

ENV : hdb-db:30013

USER: SAPABAP1

DATABASE: HDB

To Fix (if not correct):

a4hadm% hdbuserstore -i SET DEFAULT hdb-db:30013@HDB SAPABAP1In HANA 2.0 which is multi-tenant by default, port 3<Insance #>13 is for SystemDB. Single-tenant is typically 3<instance #>15.

Install Empty/Shell Virtual Machines (Targets)

We will clone and copy to existing hosts. Therefore we need additional machines that are similar in spec to the source.

- Create virtual machine for ASCS, HANA, Application Server

- Hostnames and virtual hostnames per table

- All of the above with same OS as source and meeting prerequisites for SAP

- Install same version of SAP Host Agent and Adaptive Extensions

- Test metadata service as described above

- Ensure <sid>adm accounts are created with same UID and GID as source

- Ensure sapadm are created with same UID and GID as source

- Ensure <hanasid>adm is created with same UID and GID as source

- Make sure that the home directory is created: /usr/sap/<HANASID>/home

- Ensure NFS server is enabled on ASCS**

- **Not needed if using option 2 EFS on source

- On the HANA target system ensure /usr/sap/sapservices file exists and identical to source

- Create virtual machine for ASCS, HANA, Application Server

Discover/Configure Systems in LaMa

Automatic Mount Point Creation

- Enable "Automatic Mountpoint Creation"

- Go to Setup -> Settings -> Engine

- Enable "Automatic Mountpoint Creation"

- Mountpoint Deletion is optional

Pool Configuration

- Configure Pool

Repository Creation

- Create Repository for Software Provisioning Manager (needed for System Copy and Rename)

- First step is to ensure that your NFS server has the below directory structure. Important ones are the two sub-directories InstMaster and Archives. The parent directory can be anything. In my case it is /Repository

- /Repository/InstMaster

- /Repository/Archives

- In my case I used AWS EFS** as my NFS server. The above directory structure is created after you have mounted ip_address_of_efs:/ on temp_mount_point on any host.

- You can use FQDN instead of IP address

- Refer to section for creating EFS filesystem

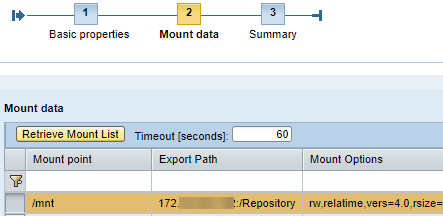

- Mount nfs-server:/Repository on /mnt of ascs server (a4h-vm1)

- Populate InstMaster with latest SWPM (e.g. SAPCAR -xvf SWPM10SP29_2-20009701.SAR -R /mnt/InstMaster)

- Populate Archives with the SAP Kernel and Netweaver Installation Export for your ABAP application server

- It is good idea to also put SAPHOSTAGENT and SAP Adaptive Extensions

- Add the nfs server mount in /etc/fstab of the ASCS server so that it is mounted on next reboot

- Infrastructure -> Repositories -> Add Repository -> Software Repositories -> Add Repository

- First step is to ensure that your NFS server has the below directory structure. Important ones are the two sub-directories InstMaster and Archives. The parent directory can be anything. In my case it is /Repository

- Create Repository for Software Provisioning Manager (needed for System Copy and Rename)

- We added host name of ASCS server that has the mount point for the NFS server. This will provide the necessary information regarding the NFS server to LaMa in order to use for repository mount on the target servers. The actual mount will be done using EFS NFS mount target

- Select the mount point from the list.

- In the end you will have something like this:

- We now have the repository created. Next create the configuration for the above. Highlight the repository and click on "Add Configuration". Fill in the info as below.

- Repeat repository creation process for Dialog Instance Install (Application Server Install)

- Also repeat configuration process for Dialog Instance Install

- In the end you will see your repositories as below:

Network Configuration

- Add Network: Infrastructure -> Network Components -> Network -> Add Network

- Mandatory fields should be enough for this exercise. Example:

- Name: us-east-1d

- Subnet Mask: 255.255.255.0

- Broadcast Address: 172.xx.xx.255

- Mandatory fields should be enough for this exercise. Example:

- Add network assignment: Infrastructure -> Network Components -> Network -> Assignment

- Optional: If you have a nameserver such as LDAP then you configure it. This is useful for creating needed accounts in target systems during provisioning scenarios (e.g. system copy). However, in this example we are using local accounts.

- We will need to create accounts on target hosts manually

- Add Network: Infrastructure -> Network Components -> Network -> Add Network

Discovery

- Discover hosts and assign to pool

- Detect using host agent and account sapadm

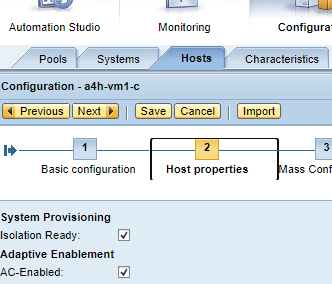

- For source hosts mark them as AC-Enabled

- For the target hosts for copy or clone, mark them both for AC-enabled and isolation ready. This means the host can be fenced (uses iptables).

- Discover hosts and assign to pool

- In LaMa Operations -> Hosts you should see something like below

- Discover Instances

- Detect using a4hadm for ASCS and Application Server

- Detect using hbdadm for HANA and SYSTEM for database

- Edit instance ASCS and under instance properties ensure AC-Enabled is selected and verify that the virtual hostname is used for communication

- Discover Instances

- Next go to Mount point and uncheck "Automounter" followed by click on "Retrieve Mount List". Only keep the below**:

- **If using EFS, your mount list will differ. It will show NETFS as storage type with different export path and mount options

- Next go to Mount point and uncheck "Automounter" followed by click on "Retrieve Mount List". Only keep the below**:

- **If using EFS, export path will differ as shown below.

- Note the double slash which must be included.If not retrieved automatically then edit the line and enter the extra "/"

- Repeat for PAS instance. This time you will get NFS list (Storage Type = NETFS).**

- **If using EFS, the list will be similar to ASCS except for /usr/sap/A4H which also be mounted from EFS. In this case as well do not forget the double slash

- Repeat for HANA instance. Only keep the below:

- In LaMa Operations -> Systems, you should see something like below:

Local NFS Adapter

- Configure Local NFS Storage Adapter (needed as we are using ASCS as NFS server). Configuration is straightforward.**

- **Skip the "Local NFS" if using EFS - Refer to EFS setup section to use option 2

- Infrastructure -> Storage Managers -> Add and select "SAP Local NFS Server Support"

- Enter Information as below and click on Test Configuration. If successful, click on save.

- LaMa automatically tests the ASCS NFS server already discovered above

- Configure Local NFS Storage Adapter (needed as we are using ASCS as NFS server). Configuration is straightforward.**

Configure Source System Provisioning & RFC

- Setup source system for copy, clone, rename and application server installation (plus anything else you want to use it for, such as HANA Replication)

- Once you have configured the RFC destination, you should retrieve the version of the system. Initially it displays the kernel version (e.g.7.53), once you have RFC it gets the Product version, e.g. 7.50

PCA Activation

- Install PCA License on source system

- Operations -> Systems Tab -> System -> Install ABAP PCA License

- Install PCA License on source system

Scenarios

Now that our environment is built and configured using Adaptive Design, we are ready to execute some use cases. The scenarios to be covered are:

- Snapshot the System A4H -- Option 1 storage only (not applicable if using EFS)

- Clone System A4H

- Copy System A4H

- Provision additional Application Server for A4H

- Relocate an instance of A4H to another virtual machine

Snapshot System A4H

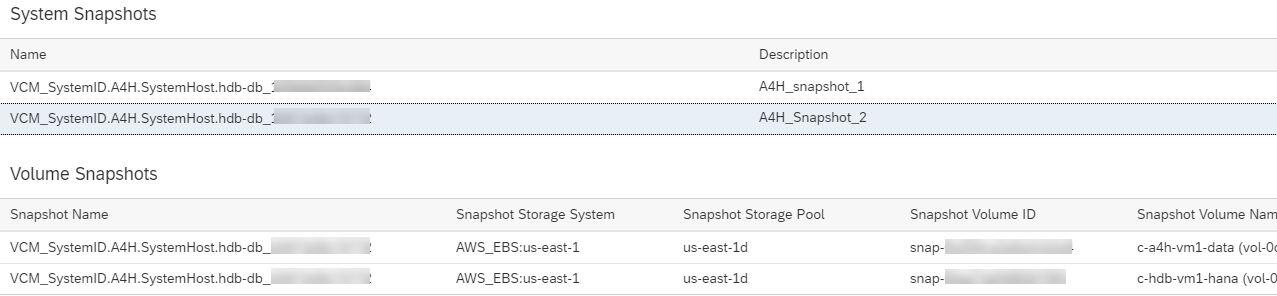

Amazon EBS adds ability to take point-in-time, crash-consistent snapshot across multiple EBS volumes**.

** If using EFS for shared filesystems, no system snapshot can be performed. This scenario is only applicable if using option 1 (i.e. EBS with Local NFS).

The sequence of the snapshot for our distributed system:

- Snapshot of volumes on the HANA system containing /hana/shared/HANASID, /hana/data/HANASID, /hana/log/HANASID and if applicable, /hana/backup

- In parallel snapshot of ASCS volumes containing /sapmnt<SID>, /usr/sap/<SID>, /usr/sap/trans, /home/<sid>adm

Obviously, the application server (PAS) mounts from the ASCS server do not need a snapshot.

Follow the steps below to perform the system snapshot.

Provisioning -> Systems -> A4H -> Provisioning -> Manage System Snapshots -> Take System Snapshot

With HANA you can perform online snapshot

After completion go to Provisioning -> Systems -> A4H -> Provisioning -> Manage System Snapshots -> Display or Remove Snapshots

If you click on the image to enlarge, you will see that snapshot of volumes attached to HANA and ASCS instances were taken. You have the option to delete the snapshots.

These snapshots can be used as the source when cloning the system rather than taking a new snapshot in order to speed up the process a bit.

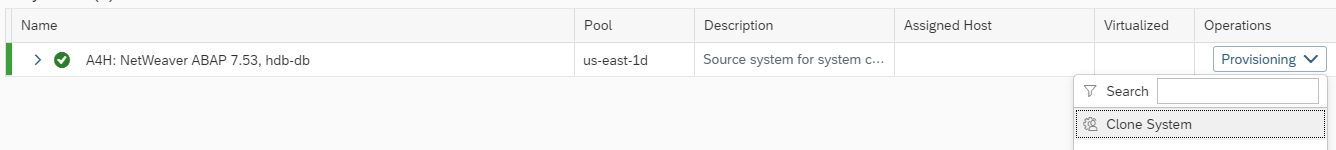

System Clone

We can now perform a system clone operation. We have the option of using a pre-existing snapshot or performing a new snapshot as part of the clone operation**

**In case of EFS you can not use pre-existing snapshot as it is not applicable

The basic high-level sequence of the clone for our distributed system:

- Snapshot of volumes on the HANA system

- In parallel snapshot of ASCS**

- Convert the snapshots to new volumes

- Attach the new volumes to HANA and ASCS

- Create mount points and mount the filesystems on the above attached volumes

- Attach virtual IP addresses to target servers and plumb the interfaces

- Fence the servers to prevent any connection to source system

- Make changes to hostname resolution (on target hosts) such that the source virtual hostnames point to the virtual IP on the cloned servers (example: hdb-db will point to the same IP as hdb-db-clone). This is done to successfully start the SAP application without going through a lengthy rename process.

- Export the attached volumes via NFS from ASCS host**

- Mount filesystems on application server host via NFS

- Start SAP application

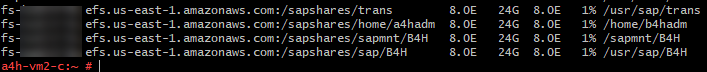

**For EFS, a copy of the applicable directories is done by LaMa and then mounted on ASCS and PAS. See screenshot below for target PAS where /sapmnt/A4H is copied to A4H_clone_* and mounted as /sapmnt/A4H. Also /usr/sap/A4H is copied to new directory (for PAS only).

Follow the steps below to perform the system clone.

Provisioning -> Clone System

Choose your target hosts. Make sure to match the target to the source.

Change the hostnames to match your target's fully qualified domain name (will work without FQDN as well). Do not press Next yet.

Click on "validate step" and if you selected the target hosts correctly, then the IP addresses will be filled in automatically. Now press Next.

In this example we will choose the snapshot that we took in the earlier exercise**

**For EFS it would look like below. Two of the source hosts has an EBS volume so these will be snapshotted. The EFS directories will be copied

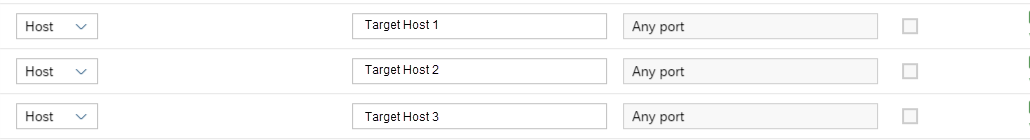

The fencing configuration is predefined in LaMa (and additional rules added as part of the system configuration in LaMa). You can add ssh port to the list as shown or any other rule that you think is needed for communication with the cloned system. However, it is very important to ensure that any communication back to the source is not opened up**

**You will see LaMa add additional rules for EFS

Consider adding the following fencing rules as sometimes communication could be needed at the VM primary IP (in addition to virtual IP) that may be blocked by fencing.

Next you will get a summary screen and when you continue the clone process will start and you can monitor the progress. If all steps complete then you will get the 100% complete progress bar.

Now if you go to operations view, you should see the cloned system with the same SID as the source.

To Destroy the newly cloned system, first stop & unprepare from the Mass Operations menu:

Now you can destroy it from the provisioning menu.

System Copy

The basic high-level sequence of copy operation by LaMa for our distributed system (the actual list of steps is longer):

- Snapshot of volumes on the HANA system

- In parallel snapshot of ASCS**

- Convert the snapshots to new volumes

- Attach the new volumes to HANA and ASCS**

- Create mount points and mount the filesystems on the above attached volumes

- Attach virtual IP addresses to target servers and plumb the interfaces

- Fence the servers to prevent any connection to source system

- Make changes to hostname resolution (on target hosts) such that the source virtual hostnames point to the virtual IP on the cloned servers (example: hdb-db will point to the same IP as hdb-db-clone). This is done to successfully start the SAP application without going through a lengthy rename process.

- Export the attached volumes via NFS from ASCS host**

- Mount filesystems on application server host via NFS

- Mount SWPM repository

- Run SWPM on ASCS

- Run HANA Lifecycle Management on HANA

- Run SWPM on Application Server

- Run PCA

- Enable SAP application

**For EFS, a copy of the applicable directories is done by LaMa and then mounted on ASCS and PAS. See screenshot below for target PAS where /sapmnt/A4H is copied to B4H and mounted as /sapmnt/B4H. Similar case for /usr/sap/A4H - copied and mounted as /usr/sap/B4H

Follow the steps below to perform the system copy. It is quite similar to the clone process but there are a few extra steps.

Provisioning -> Copy System

The target SID will be B4H but we are keeping the HANA SID the same as source though this can also be changed.

Some steps are not shown where we use the default settings.

Select the existing hosts as below.

Change the virtual host names to the ones listed in the table at beginning of the blog. Click on "Validate Step" and the IP addresses should populate.

Unlike the clone exercise where we used an existing snapshot, here we decide to perform a new snapshot**. Check the Full Copy boxes. The rest will be default settings.

**For EFS it would look like below. Two source hosts have EBS volumes so these will be snapshotted. The EFS directories will be copied.

We will perform an online snapshot of database volume.

The user accounts already exist on the target hosts. As we are not using LDAP in this environment, LaMa cannot provision new users. So these accounts were prepared ahead of time (including home directory for <hanasid>adm).

When you click on Validate Step you will get a warning error. You can ignore this as we know that the target user ID already exists with a home directory. Refer to section covering target system setup.

When you validate the step, the release version will update. The defaults should be fine at this step unless you want to change some fields.

The fencing step is important to verify and add any missing entries. Although nfs ports are already opened by LaMa, we still add the NFS server for the repository. We also add the 3 target hosts' primary host names or IP addresses. Lastly we add ssh port 22 to prevent troubleshooting issues.

**You will see LaMa add additional rules for EFS

For PCA, you can add any task list variant that you may have created as well as any other task list you would like to execute, for example the BDLS task list with its improvements. In this example we kept the default.

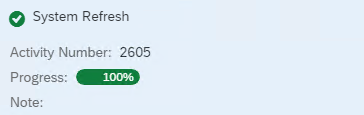

The activity should now run and progress bar will eventually reach 100% completion.

You should now see the new copied system in the operations view of LaMa.

To Destroy the newly copied system, first stop & unprepare from the Mass Operations menu:

Now you can destroy it from the provisioning menu.

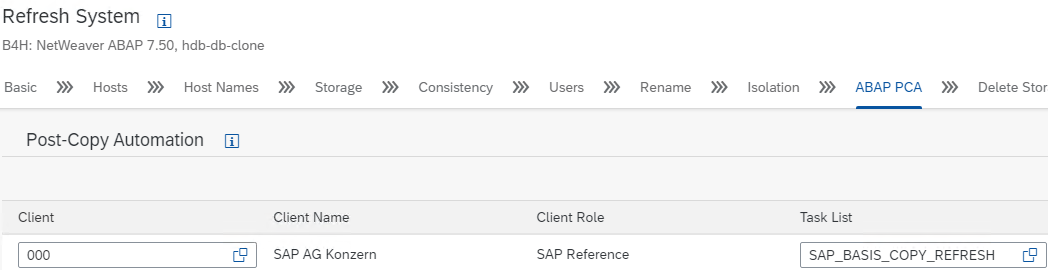

System Refresh

There are three types of refreshes supported by LaMa - Refresh System, Refresh Database, and Restore-Based Refresh. Since this blog is about the capabilities of the AWS adapter, we will perform a Refresh System operation that relies on storage volume snapshots. The process is very similar to System Copy and the key differences are:

- We trigger it from the target system that is already running

- We use a different PCA task list (SAP_BASIS_COPY_REFRESH)

For this scenario I am going to use a target that is previously created via system copy. In addition I will only show the use of EBS storage. EFS storage is supported and can be adapted as shown earlier for system copy.

From the target system B4H, perform the steps below.

Provisioning -> Provisioning Processes -> Refresh System

Some screenshots are not shown where we use the default settings.

1.

2.

3. Select defaults for the Hosts page

4. On the Host Names page verify the correct hostnames and IP addresses

5. On the Storage page, check mark the Full Copy boxes. The rest will be default settings.

6. For Consistency, accept the default of "Online: Clone Running DB"

7. For Users, same as system copy scenario

8. For Rename, same as system copy scenario

9. For Isolation, it can be the same as system copy but I decide to use "Unfence target system without confirmation" and accept the warning

10. On the ABAP PCA page you will notice the task list contains SAP_BASIS_COPY_REFRESH.

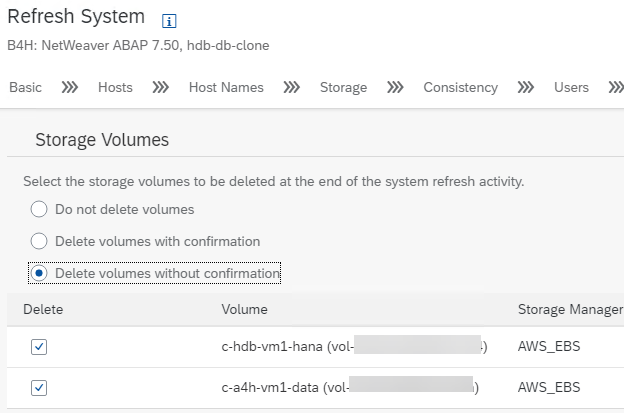

11. For Delete Storage Volumes, the appropriate option needs to be selected depending on the situation. The volumes to be deleted are those on the target system (the system being refreshed) that are being replaced by the refresh process. As new volumes are attached (created from the snapshot of the source) to the refreshed system, the old volumes are no longer needed. However, a user may decide to not delete the volumes as a way to revert back if needed. I chose "Delete volumes without confirmation"

12. You will now reach the summary page to verify your inputs. Click Execute

Provision New Application Server

We will provision a new application server using an existing server as target. We will use one of the same servers that we used for the clone and copy exercise.

Procedure is identical for both option 1 (EBS + Local NFS) and option 2 (EBS + EFS) storage.

Make sure to select the option with "AC-Enabled". Not all the screenshots of the road map will be shown as they are self-evident or default settings.

Choose the target server

Change the hostname to the virtual hostname we want to use. In my case I used a4h-app-clone.

Fill in any missing passwords, choose the correct release configuration and press "Validate Step". You will see the release version updated.

Below are the completed activity steps.

In Operations View you will now see the additional application server.

System Relocate

We will relocate the above provisioned additional application server (on a4h-vm2-c) to another server - a4h-vm1-c

Procedure is identical for both option 1 (EBS + Local NFS) and option 2 (EBS + EFS) storage.

From Operations -> Systems:

You should now see in the operations view that the application server is on another host. It was moved from a4h-vm2-c to a4h-vm1-c

The blog has demonstrated some key capabilities of the AWS Cloud Manager and how to setup your SAP landscape environment using Adaptive Design Principles.

References

- SAP Note 2574820 - SAP Landscape Management Cloud Manager for Amazon Web Services (AWS)

- SAP Landscape Management Enterprise 3.0 User Guide

- SAP Adaptive Extensions

- SUSE KB 7023633

- AWS Snapshots

- AWS Device Naming

- AWS Meta Data

- AWS Service Endpoints

- Principles of SAP HANA Sizing

- SAP Quicksizer

- SAP HANA on AWS

- SAP Managed Tags:

- SAP Landscape Management,

- SAP Landscape Management, enterprise edition

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

430 -

Workload Fluctuations

1

- Recording position management: Error during distribution SURE 100000Message no. FTR_TRD012 in Technology Q&A

- SAP BW/4 - revamp and true to the line 2024 in Technology Blogs by Members

- Govern SAP APIs living in various API Management gateways in a single place with Azure API Center in Technology Blogs by Members

- Enhance your SAP Datasphere Experience with API Access in Technology Blogs by SAP

- Supporting Multiple API Gateways with SAP API Management – using Azure API Management as example in Technology Blogs by SAP

| User | Count |

|---|---|

| 29 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |