- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Datasphere with Confluent --> Part 2

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This is the second Blog regarding the SAP Datasphere and Confluent integration. Here is the link to the first one, where I show how to connect both tools.

I'm working at SAP as Customer Advisor since 2015. Previously I was a Consultant for SAP Data Services. So I'm familiar with the SAP Integration tools. Since March 8th 2023, with the announcement of SAP Datasphere, there is a new aspect in Data Integration. It's the Business Data Fabric approach. The benefits of Business Data Fabric is described in this Blog

So the idea is not to have lot's of ETL jobs in between, rather let the data inside of the source applications and only if necessary, store it in SAP Datasphere and use the capabilities inside. Data Products modeled and created inside of SAP Datasphere can be accessed by external tools. In some cases, this is not enough and customers just need to push their data to external systems too. One way is to use the "Replication Flow" in SAP Datasphere which enables you to replicate the data from SAP Datasphere to specific targets or directly from SAP Source Systems to several targets.

Here you can find the corresponding information about how to create a Replication Flow on SAP Help

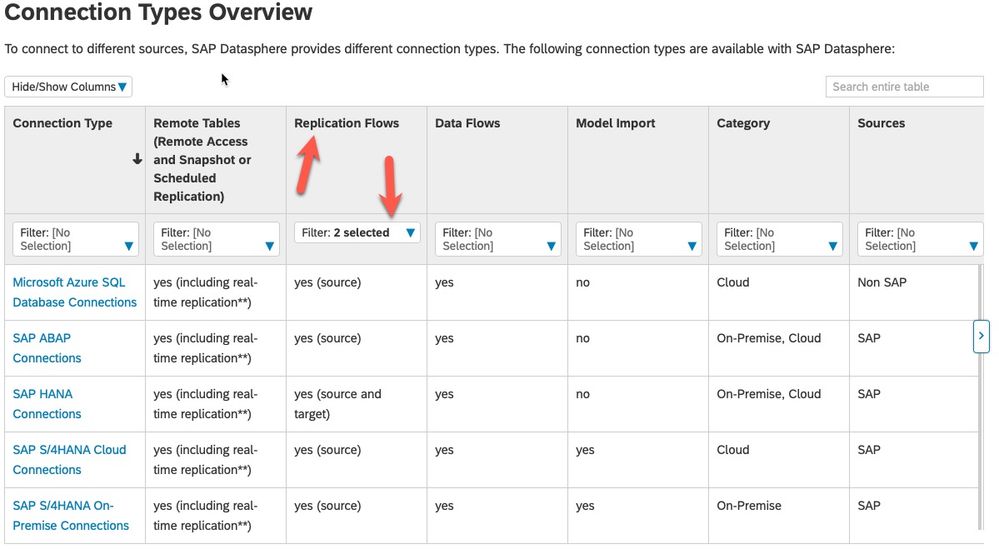

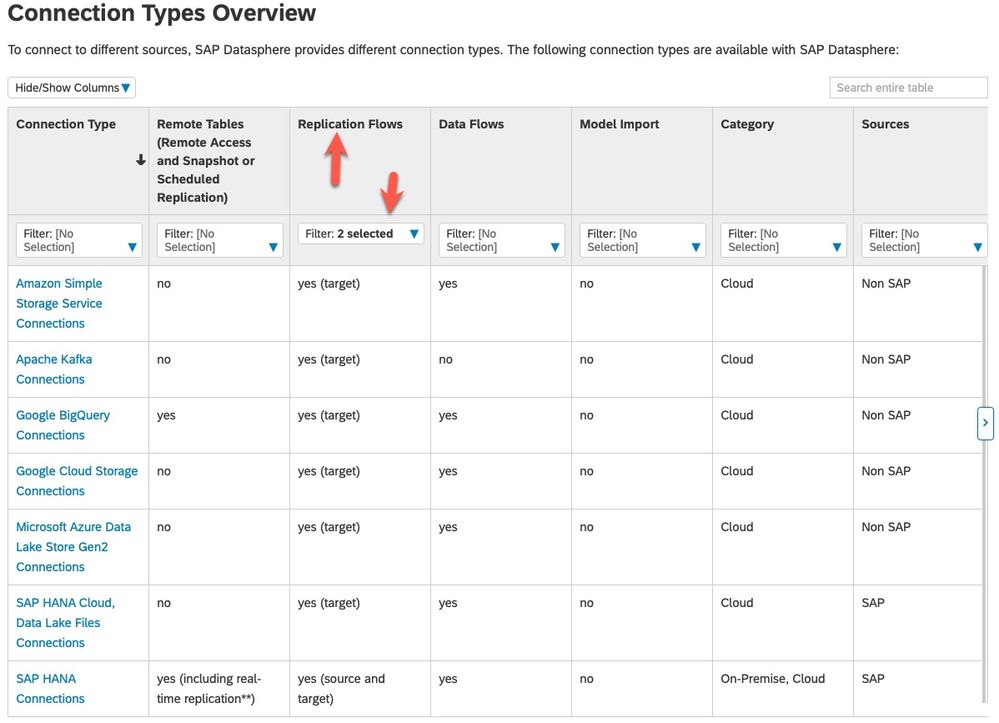

The list of available Replication Flow sources are shown on SAP Help

And this is the overview of the currently available Replication Flow targets (SAP Help)

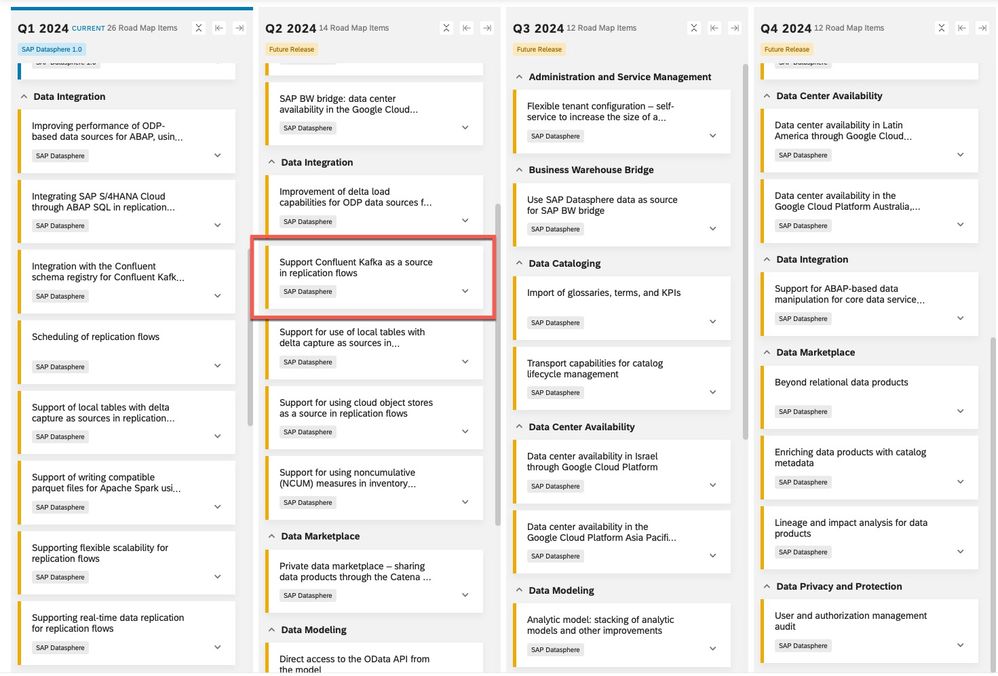

So this is the actual status and can and will change. For everyone who wants to see, what comes next, please have a look in the SAP Datasphere Roadmap Explorer:

There you can see, that Confluent is planned to be available as a source in Q2 2024! This means that you even can get your streams FROM Confluent INTO SAP Datasphere, which allows you lot's of more possible sources for ingesting data into SAP Datasphere!

When you now want to know the difference between Confluent Cloud and Apache Kafka, just have a look here.

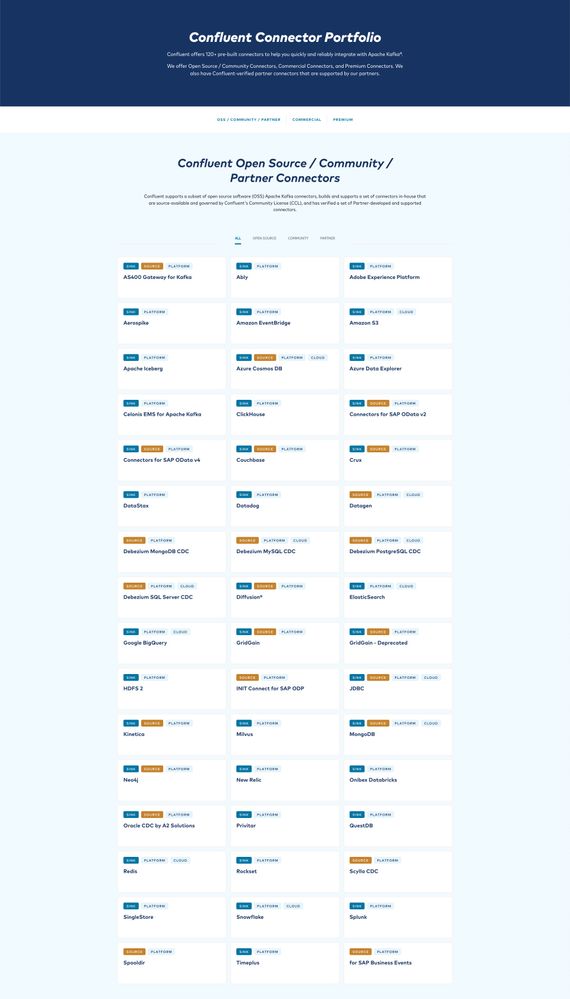

So back to Confluent as target. On their website you can see all possible target's they offer themself:

What you can see is, that there are lot's of targets available, including several ones, I often hear from customers, which want to connect with SAP data. Mainly they want to get the SAP data into these targets:

- Amazon (S3, Dynamo DB, Redshift)

- Azure (Blog Storage, Data Lage Storage)

- Google (BigQuery, Cloud Storage)

- InfluxDB

- Salesforce

The following targets were requested as well from some customers:

- JDBC

- OData (v2 and v4)

- HTTP / HTTPS

| As you can see, the hyperscalers are most common as targets for SAP data. With SAP Datasphere customers are already able to connect the 3 Hyperscalers within the Replication Flow. But there are still some targets, which are not available in SAP Datasphere. Luckily we already have the possibility to replicate the data in realtime and with CDC functionality in combination with Confluent for all the missing targets, so there is no need anymore to use an additional tool in between, but directly SAP Datasphere with Confluent to feed all relevant systems with SAP data. |

So why using SAP Datasphere in combination with Confluent?

- 120+ preconfigured targets available, so more then 115 additional targets to SAP Datasphere!

- On prem and cloud targets, or hybrid landscapes can be connected

- Either the SAP data is moved into SAP Datasphere, or to any other landscape via Confluent

- perfect if a customer already has Confluent in place, because it can be easily adapted

- Confluent cloud is available via SAP store

- Ensuring security-, compliance-, and governance-standards with security-features for data streaming on enterprise-level and industry-wide full managed governance-suite for Kafka!

- Flexible realtime SAP data usage

- in Q2 2024 (actual plan), sources from Confluent can be used to push data INTO SAP Datasphere

- SAP Managed Tags:

- SAP Datasphere

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

430 -

Workload Fluctuations

1

- Enhance your SAP Datasphere Experience with API Access in Technology Blogs by SAP

- Expanding Our Horizons: SAP's Build-Out and Datacenter Strategy for SAP Business Technology Platform in Technology Blogs by SAP

- Exploring Integration Options in SAP Datasphere with the focus on using SAP extractors - Part II in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- What’s New in SAP Datasphere Version 2024.8 — Apr 11, 2024 in Technology Blogs by Members

| User | Count |

|---|---|

| 29 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |