- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- SAP Datasphere Space and Lifecycle Management

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This article is ideal for those starting out with SAP Datasphere and wishing to understand, at least at a high level, how life-cycle management works, and what ‘Spaces’ are all about. For example, can Spaces be used for life-cycle purposes (dev, test, prod), what are the limitations that Spaces have for life-cycling management and what are the best practices? These and other commonly asked questions are answered in this article, which is also available as a video.

In this article, I refer to another presentation on Datasphere Security. I'm currently working on this presentation and hope to make it available soon. Please stay tuned for updates.

Feel free to post a comment, but if you'd like a reply please instead post a question. (Since only 'questions' enable replies and keep the context of the question)

Matthew Shaw

Resources

| Download or Preview presentation | .pptx, Version 1.0, February 2024 |

| Watch this presentation (37 minutes) | .mp4, Version 1.0, February 2024 |

Tenants and Spaces

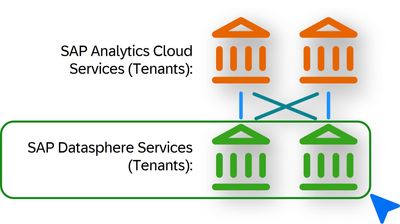

SAP Datasphere Services (Tenants):

- Subscription-Based Tenant

- Provisioning is performed by SAP, only 1 per subscription

- Consumption-Based Tenant (Standard Plan or Free Plan*)

Relationships with SAP Analytics Cloud Services (tenants):

- No real limitations, each SAP Analytics Cloud Service can connect to multiple Datasphere Services

- However, the ‘Product Switch’ in Datasphere can only point to a single Analytics Cloud Service. It’s just a ‘button’, not a limitation per see

Within each Datasphere Service (tenant):

Within each Datasphere Service (tenant):

- System Owner, and other ‘DW Administrator’ users, can:

- create 1 or multiple Spaces

- perform other tasks, such as creating users, and roles, and assigning users to Spaces

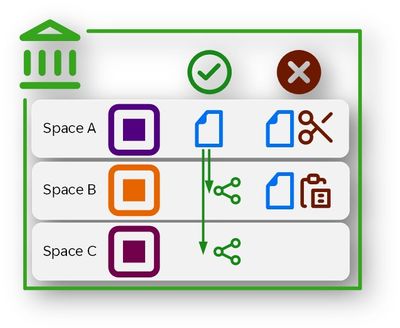

Each Space

- Is a secured container

- Users need access to a Space to access the resources held within it

- Content can be shared with other Spaces allowing additional access

- Holds all content:

- Tables, views, models, data flows, replication flows, task chains, etc.

- Grants resources:

- CPU, memory, disk

- Priority, statement limits

- Space Administrators can also grant users access to their Spaces

Forethought is required when creating Spaces

- Need maximum reusability, for example:

- Avoid the need to replicate data into more than 1 Space

- Enforce security with minimum effort

- It is possible for a single user to have different access rights in different Spaces

- (see the next presentation on ‘scoped roles’ for more)

- Only 1 Space can have SAP HANA Cloud data lake access

- Though read access can be shared with other Spaces

- Only the original Space can write to data lake

- Even when using Open SQL Schema, the Space grants access

- Blog provides an example of data lake access by multiple Spaces

Spaces – boundary conditions

Content within Spaces:

- No means to ‘move’ or ‘copy’ content across Spaces

- Can only move or copy within a Space

- Most content can be shared across Spaces within the same Service (tenant)

- Enables segregation and security controls (see later)

- For example, tables, views and models can be shared across Spaces

- Some things cannot be shared across Spaces within the same Service (tenant) including:

- Connections, Data flows, Replication Flows etc.

- Service-wide (tenant) configuration settings including:

- Authentication Configuration (SAML SSO) setup

- OAuth Clients (API) access

- BTP Sub Account for use with the SAP Cloud Connector

- SAP Data Provisioning (DP) Agent

- Import and Export of content is possible and needed for lifecycle management (see next)

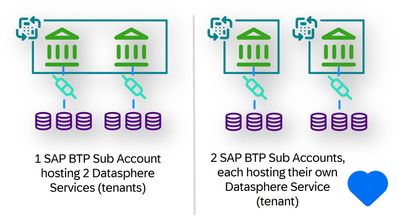

Tenants and Spaces, Sub Accounts

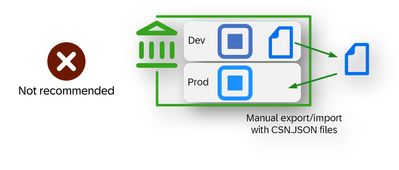

- Best to avoid using different Spaces within the same Datasphere Service (tenant) for different lifecycle use

- All objects (models, tables, connections etc.) relate to each other by ‘ID’. It means any manual export/import is technically possible, but prone to error and somewhat complex to ensure IDs are consistent

- Some customers have used Spaces for lifecycle use in this way, knowing this limitation, but it can be challenging to do so

- For BTP ‘Consumption-Based Tenants’:

- Single BTP Sub Account has no significant advantage compared to multiple Sub Accounts

- However it is best practice for ‘dev’ and ‘prod’ to have their own BTP Sub Account, so this is often the preferred choice

- We recommended a SAP Cloud Connector per environment (dev, test, prod) regardless of which option you pick

- Single BTP Sub Account has no significant advantage compared to multiple Sub Accounts

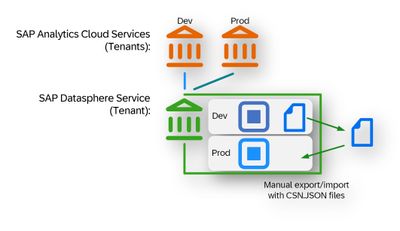

Lifecycle management

Best practices

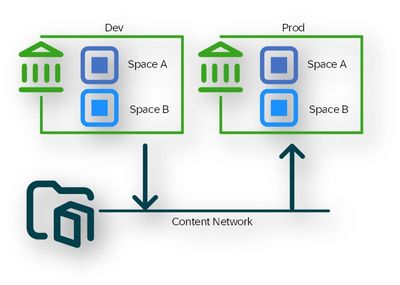

- Use a Datasphere Service (tenant) per environment (dev, test, prod)

- Use the Content Network to transport content, a cloud-based file system

- Some content can also be exported/imported via CSN* JSON files

- (* Core Data Services Schema Notation) either manually or via command line

- Do not name content by its lifecycle phase

- instead, keep all names generic such as ‘Sales’ avoiding ‘SalesDEV’

- Do not name content by its version

- Instead, avoid the version number, add that to the description or use ‘Packages’ (see later)

- Not all content can be transported (export/imported)

- Need to manually recreate some content ensuring it matches by ‘ID’

- Includes Spaces and connections*. Spaces must be recreated manually in each Service before any content can be imported into it

- * Roadmap item for wave 2024.03 subject to change

- Where possible create content once, then transport it to ensure IDs are consistent across the landscape

- Exportable/Importable content:

| Definition of | Package via Content Network | CSN/ JSON file | Notes |

| Local tables | Yes | Yes | |

| Remote tables | Yes | Yes | Ensure connection in source and target match |

| Flows (data, replication, transformation) | Yes | Yes | Includes source and target tables |

| Views | Yes | Yes | |

| Intelligent Lookups | Yes | Yes | Includes inputs and lookup entities |

| Analytic Models | Yes | Yes | |

| E/R Models | Yes | Yes | Does not include dependencies, requires manual selection |

| Data Access Controls | Yes | Includes permissions entity | |

| Task Chains | Yes | Includes all objects it automates | |

| Business Entities / Business Entity Versions | Yes | Includes all its versions, source and authorisation entities | |

| Fact Models | Yes | Includes all its versions and business entities | |

| Consumption Models | Yes | Includes all its perspectives, source fact models and business entities | |

| Authorization Scenarios | Yes | Includes data access control |

Lifecycle management - Content Network best practices

Best Practices when using Content Network

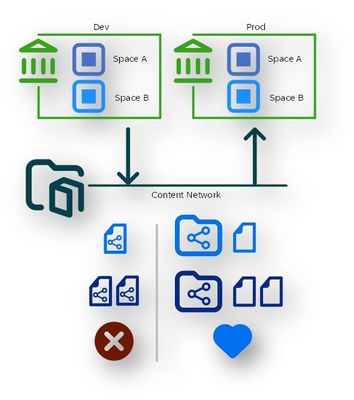

- Avoid sharing individual export packages

- Instead

- Create folders and share the folder with the other Services (tenants)

- Store the export packages in pre-shared folders

- Naming convention is important to help understand dependencies and responsibilities

- See guidance on a naming convention. Consider including Space name, data source

Useful insights

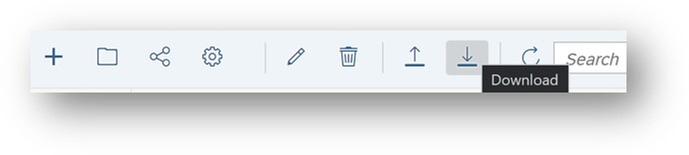

- To re-capture any changed content:

- Edit the existing export package

- Enable ‘Modify content’

- Re-export the export package

- This loses the previous delivery package unless manually downloaded beforehand

Dependencies are typically identified

- An export package can be downloaded and re-uploaded

- Whilst not intended and not ideal, it enables a form of version control or backup

- Manual download/upload is required when transporting across Data Centres

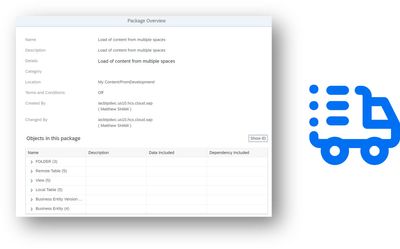

Lifecycle management – 2 types of Packages

Delivery Packages

Delivery Packages

- The transport mechanism transports these types of packages

- Export process creates an export package based on a delivery package

- Can contain content from multiple Spaces

- Whilst dependencies are automatically detected, it could become complex or confusing when mixing multiple shared contents from a ‘matrix’ of dependencies

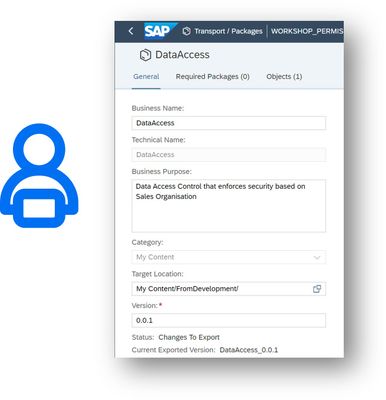

Repository Package

Repository Package

- Means to package content within 1 Space only

- a kind of ‘sub-Space’ idea

- Has a version concept which should be used

- Modellers can add certain objects to packages without the need to be a Space administrator

- Space administrator still needed to export/import

- Modeller mandates all dependencies within a single package

- Not across packages, as that’s done automatically

- These Packages can be added to Delivery Packages as a single object

- thus, removing some complexity for other users

- helps to reduce unnecessary redundancy for complex use cases

- More about Package dependencies next…

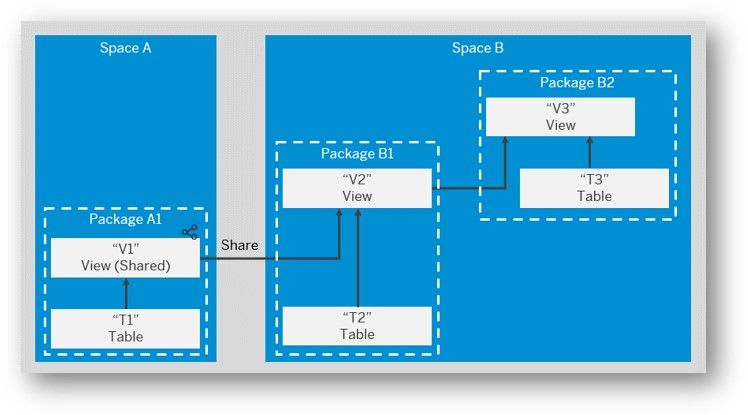

Lifecycle management – Packages and dependencies

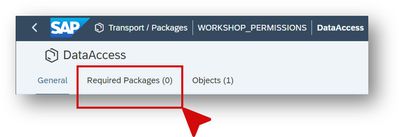

Packages

- Can contain many objects from a single Space

- A single object can only exist in 1 Package

- Packages can contain other Packages, they are identified as ‘Required Packages’

- Required Packages can be from other Spaces

- Requires Packages example (doc)

- Package A1 is a required Package of B1

- An example of a shared object from a Package in a different Space

- Package B1 is a required Package of B2

- Package A1 is a required Package of B1

Lifecycle Management Summary

- Most customers start with a single Datasphere Service, even if they have multiple Analytics Cloud Services

- Avoid naming Spaces that you expect to last with any lifecycle naming reference

- Avoid ‘ProdSalesSpace’ for example

- As adoption increases, multiple Datasphere tenants become increasingly needed to manage lifecycle content

- SAP Managed Tags:

- SAP Analytics Cloud,

- SAP Datasphere,

- Data and Analytics

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

- Sapphire 2024 user experience and application development sessions in Technology Blogs by SAP

- SAP BW/4 - revamp and true to the line 2024 in Technology Blogs by Members

- Enhance your SAP Datasphere Experience with API Access in Technology Blogs by SAP

- SAP Datasphere test tenant ? in Technology Q&A

- Introducing Blog Series of SAP Signavio Process Insights, discovery edition – An in-depth exploratio in Technology Blogs by SAP

| User | Count |

|---|---|

| 34 | |

| 17 | |

| 15 | |

| 14 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |