- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- PostgreSQL, Hyperscaler Option Now Available for C...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Hyperscaler Option

In July 2019, we witnessed an evolution of the SAP BTP strategy with a strong focus on building differentiating business service capabilities and clear intentions to partner with hyperscale cloud providers like Amazon, Microsoft, AliCloud and GCP for commodity technical services like open-source databases and data stores; where these hyperscalers are already market leaders.

In February 2020, considering customer/partner feedback about challenges with the BYOA (Bring Your Own Account) approach, we announced an update to the backing service strategy, with plans to deliver a comprehensively managed backing services offering:

- PostgreSQL on SAP BTP, hyperscaler option

- Redis on SAP BTP, hyperscaler option

For more details please check SAP BTP Discovery Center and the service documentation on SAP Help Portal.

- SAP Discovery Center - PostgreSQL on SAP BTP, hyperscaler option

- SAP Help Portal - PostgreSQL on SAP BTP, hyperscaler option

- SAP Discovery Center - Redis on SAP BTP, hyperscaler option

- SAP Help Portal - Redis on SAP BTP, hyperscaler option

PostgreSQL on SAP BTP, hyperscaler option

PostgreSQL on SAP BTP, hyperscaler option is globally available via CPEA and PAYG commercial models, for China is available via subscription commercial model. Now, PostgreSQL on SAP BTP, hyperscaler option is available for customers on BTP@China!

Here are the SKUs for the PostgreSQL on SAP BTP, hyperscaler option on BTP@China:

| Service Plan | Description |

| PostgreSQL on SAP BTP, hyperscaler option, standard compute | PostgreSQL service for small scale production use cases |

| PostgreSQL on SAP BTP, hyperscaler option, premium compute | PostgreSQL service for large scale production use cases |

| PostgreSQL on SAP BTP, hyperscaler option, storage | Storage for PostgreSQL service |

| PostgreSQL on SAP BTP, hyperscaler option, HA storage | HA Storage for PostgreSQL service |

Compute

- Standard

- Defines compute resources in the ratio of 1 CPU Core: 2GB RAM.

- Provides baseline performance for application workloads.

- Suitable for development and small scale production use cases.

- Available in Blocks of 2GB Memory (RAM)

- Supported block sizes – 1 or 2.

- Premium

- Defines compute resources in the ratio of 1 CPU Core: 4GB RAM.

- Provides high performance for application workloads.

- Suitable for medium to large scale production use cases.

- Available in Blocks of 4GB Memory (RAM)

- Supported block sizes – 1,4,8 or 16.

Storage

- Storage

- Defines general-purpose disk storage for PostgreSQL.

- Used for Single-AZ/non-HA storage use cases.

- Available in Blocks of 5GB Storage (Disk).

- HA Storage

- Defines general-purpose disk storage for PostgreSQL with bandwidth considerations for High Availability (Multi-AZ).

- Used for Multi-AZ/HA storage use cases.

- Available in Blocks of 5GB Storage (Disk).

Usage

Sizing Suggestion

Constructing a PostgreSQL on SAP BTP, hyperscaler option instance requires a combination of Compute and Storage entitlements (compute and storage units).

You cannot create an instance with only compute or storage materials.

There are two types of instance configurations supported.

- Single node PostgreSQL instance created within an Availability Zone (AZ):

Price of service = x + y- x blocks of compute will be charged – either Standard or Premium

- y blocks of storage will be charged

- 2 node PostgreSQL instance with Primary and Secondary nodes distributed between two Availability Zones (AZs):

Price of service = 2x + z- 2x blocks of compute will be charged – either Standard or Premium (x for primary node + x for secondary node)

- z blocks of HA storage will be charged

Examples:

- For development and testing: 2 standard (4GB RAM) + 4 storage (20GB)

- For medium scale production: 4 premium (2 premium * 2 AZ, 16GB RAM) + 45 HA-storage (225GB)

For more details: Sizing

Create PostgreSQL on SAP BTP, hyperscaler option instance via CF CLI (Cloud Foundry Command Line Interface) with Parameters

After making sure that you have sufficient entitlements quota assigned for your instance, then you can create an instance with CLI. Alternatively, you can create via SAP BTP Cockpit.

cf marketplace -e postgresql-db

cf create-service SERVICE PLAN SERVICE_INSTANCE [-c PARAMETERS_AS_JSON]

For example:

cf create-service postgresql-db standard devtoberfest-database -c '{"memory": 2, "storage": 20, "engine_version": "13", "multi_az": false}'Which needs the following entitlements: 1 unit of standard plan (1x2GB) + 4 units of storage plan (4x5GB).

Retrieve Configurations of Instance If Required Once the Service Instance is Created

cf service <service-instance-name> //Note the service instance id

cf curl /v2/service_instances/<service-instance-id>/parameters

Sample output:

{

"memory": 2,

"storage": 20,

"engine_version": "13",

"multi_az": true,

"locale": "en_US",

"postgresql_extensions": [

"ltree",

"citext",

"pg_stat_statements",

"pgcrypto",

"fuzzystrmatch",

"hstore",

"btree_gist",

"btree_gin",

"pg_trgm",

"uuid-ossp"

]}

Use PostgreSQL on SAP BTP, hyperscaler option Extension APIs

With backing services extension API, customers will be able to:

Create a monitoring admin user for the PostgreSQL instance

API: /postgresql-db/instances/:id/monitoring-admin, PUT

Fetch all the deleted PostgreSQL instances

API: /postgresql-db/org/:orgId/space/:spaceId/deleted-instances, GET

Create a database extension supported by the hyperscaler

API: /postgresql-db/instances/:id/extensions, PUT/DELETE

Note: The base URL of PostgreSQL in China is https://api-backing-services.cn40.data.cn40.platform.sapcloud.cn/.

For more details: Using the ‘PostgreSQL, hyperscaler option’ Extension APIs

Export Data from PostgreSQL Service Instance

cf enable-ssh YOUR-HOST-APP

cf restart YOUR-HOST-APP

cf create-service-key MY-DB EXTERNAL-ACCESS-KEY

cf service-key MY-DB EXTERNAL-ACCESS-KEY

cf ssh -L 63306:<hostname>:<port> YOUR-HOST-APP

psql -d <dbname> -U <username> -p 63306 -h localhost

pg_dump -p 63306 -U <username> <dbname> > /c/dataexport/mydata.sql

psql -p 63306 -U <username> -d <dbname> -c "COPY <tablename> TO stdout DELIMITER ',' CSV HEADER;" > /c/dataexport/<tablename>.csv

For more details: Export Data from PostgreSQL Service Instance

Backup and Restore

For PostgreSQL database instances deployed on AWS:

- Full snapshot/backup of data is taken daily for standard and premium service plan instances.

- DB transaction logs (WAL logs) are archived continuously to support Point-In-Time Recovery (PITR).

- Backup retention period is 14 days.

Restore to a specified time:

cf create-service postgresql-db <service_plan> <service_instance_name> -c '{"source_instance_id": < >, "restore_time":< >}'For more details: Restore for PostgreSQL, Hyperscaler Option

Run and Deploy CAP with PostgreSQL

The domain model in CAP (Cloud Application Programming) is crucial for defining domain entities using CDS (Core Data Services), allowing seamless integration with external services or databases. CAP, along with its associated tools, automates the translation of CDS models into database-supported schemas. CAP provides native support for various databases such as SAP HANA (Cloud), PostgreSQL, SQLite, and H2. To learn more about CAP’s database support, please refer to CAP – Database Support.

- For Java, CAP Java SDK is tested on PostgreSQL 15 and supports most of the CAP features.

- For Node.js, before we use the CAP-community-provided adapter cds-pg in combination with cds-dbm to consume PostgreSQL adapter. Since cds 7, CAP Node.js has natively supported PostgreSQL by releasing new database services and its implementation @cap-js/postgres. @cap-js/postgres provides the functionalities to translate the incoming requests from CDS model to PostgreSQL during runtime, and analyze the delta between the current state of the database and the current state of the CDS model, deploy the changes to the database, load CSV files, etc.

For more details, please read: Run and Deploy SAP CAP (Node.js or Java) with PostgreSQL on SAP BTP Cloud Foundry.

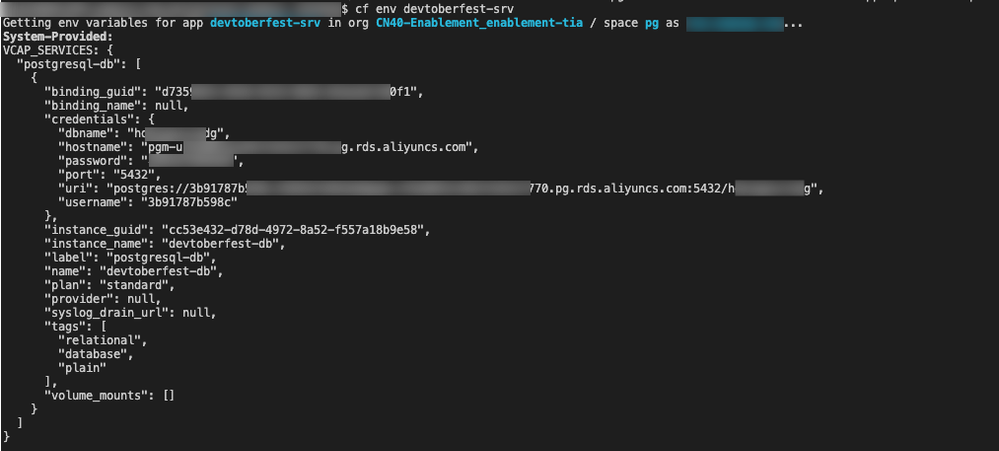

Connect to PostgreSQL in Non-CAP Application

Without CAP, you have to connect to PostgreSQL and manage the database schema by additional effort of coding. Anyway, you can do it in the same way as the BYOA (Bring Your Own Account) approach. You can consume the PostgreSQL instance via app binding and the app runtime environment variable.

For example:

Relevant Sources of Information

- For more information on PostgreSQL on SAP BTP, hyperscaler option service, please check SAP Help Portal.

- For information on PostgreSQL pricing and commercial options and regions, please check SAP Discovery Center.

- SAP Managed Tags:

- SAP Business Technology Platform

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

- IoT - Ultimate Data Cyber Security - with Enterprise Blockchain and SAP BTP 🚀 in Technology Blogs by Members

- SAP BTP, Kyma Runtime internally available on SAP Converged Cloud in Technology Blogs by SAP

- Trustable AI thanks to - SAP AI Core & SAP HANA Cloud & SAP S/4HANA & Enterprise Blockchain 🚀 in Technology Blogs by Members

- PostgreSQL on SAP BTP, hyperscaler option - Credentials for db owner in Technology Q&A

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

| User | Count |

|---|---|

| 34 | |

| 17 | |

| 15 | |

| 14 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |