- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- New Git integration in SAP Data Intelligence Cloud

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Associate

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-02-2022

9:44 AM

Last update of the Blog: 3rd of November 2022

It is the purpose of this blog to describe the Git Terminal Application in SAP Data Intelligence Cloud that is included in release 2022.08 mid of August 2022 (see the What’s New Blog of my colleague Eduardo Schmidt Haussen)

Here is the table of contents for this blog.

Appendix: Blog History

Section 3.iii also contains additional details on the structure of an SAP Data Intelligence Solution which can be seen as a short excursus. Nevertheless, it is also shown how the Git Terminal Application can be leveraged to manage such solutions in a SAP Data Intelligence user workspace.

The Appendix is going to contain a history for changes and updates on the Blog. The Blog is subject to change in order to keep it up to date.

Examples presented in this blog are only meant to be “Hello World” examples that serve the purpose to showcase certain aspects/features of SAP Data Intelligence Cloud. Although we are using examples of SAP Data Intelligence Cloud Generation 1 Pipelines the Git capabilities of the Git integration also remain valid for SAP Data Intelligence Cloud Generation 2 Pipelines.

The screenshots and strips in this blog are based on SAP Data Intelligence Cloud 2022.08 and SAP Cloud Connector 2.14.2.

In this chapter we provide an overview of the Git integration in SAP Data Intelligence Cloud.

During this blog we assume the reader to be familiar with SAP Data Intelligence Cloud regarding System and File Management as well as pipeline modeling (see the official SAP Help Documentation). We also expect the reader to have a basic understanding of the Git version control software (see the official Git documentation for a detailed exposure of the technology) as well as basic Linux bash commands and the concept of (Docker) container technology (see the official Docker documentation).

There already exist very useful blogs that describe possible CI/CD processes for productive usage/development of SAP Data Intelligence Cloud and the tools that are leveraged for this purpose as well as guidance how to use Git version control in connection with SAP Data Intelligence Cloud that were created prior to the native Git integration inside the SAP Data Intelligence Cloud Modeler Application.

These guides and resources remain valid and have proven to be useful for different use cases. This blog is intended to serve as a complementation to the above resources and focuses on the new Git Terminal application that was released with the SAP Data Intelligence Cloud 2022.08 update.

The Git integration in SAP Data Intelligence Cloud is provided via a terminal application that is visible as a tab at the bottom of the SAP Data Intelligence Cloud Modeler application. Throughout the blog we refer to it as the Git Terminal Application.

The Git Terminal Application is a user application meaning that for every user in SAP Data Intelligence Cloud a separate instance of the application is started.

The Git Terminal Application itself enables comprehensive support of the Git command line client for Linux inside a user workspace and also standard Linux commands are available. With the upcoming SAP Data Intelligence Cloud release 2022.08 version 2.34.1 of the Git command line client for Linux is shipped.

The artifacts of the Git Terminal Application are in the /project folder that is created during the Docker container creation for the application. It is a user-specific folder which is only accessible from within the Git Terminal Application. The lifetime of this folder is bound to the Git Terminal Application itself. In case of a restart of the application the content of the folder is cleared. The latter is important to know in conjunction with Git credential management (see section 2.1).

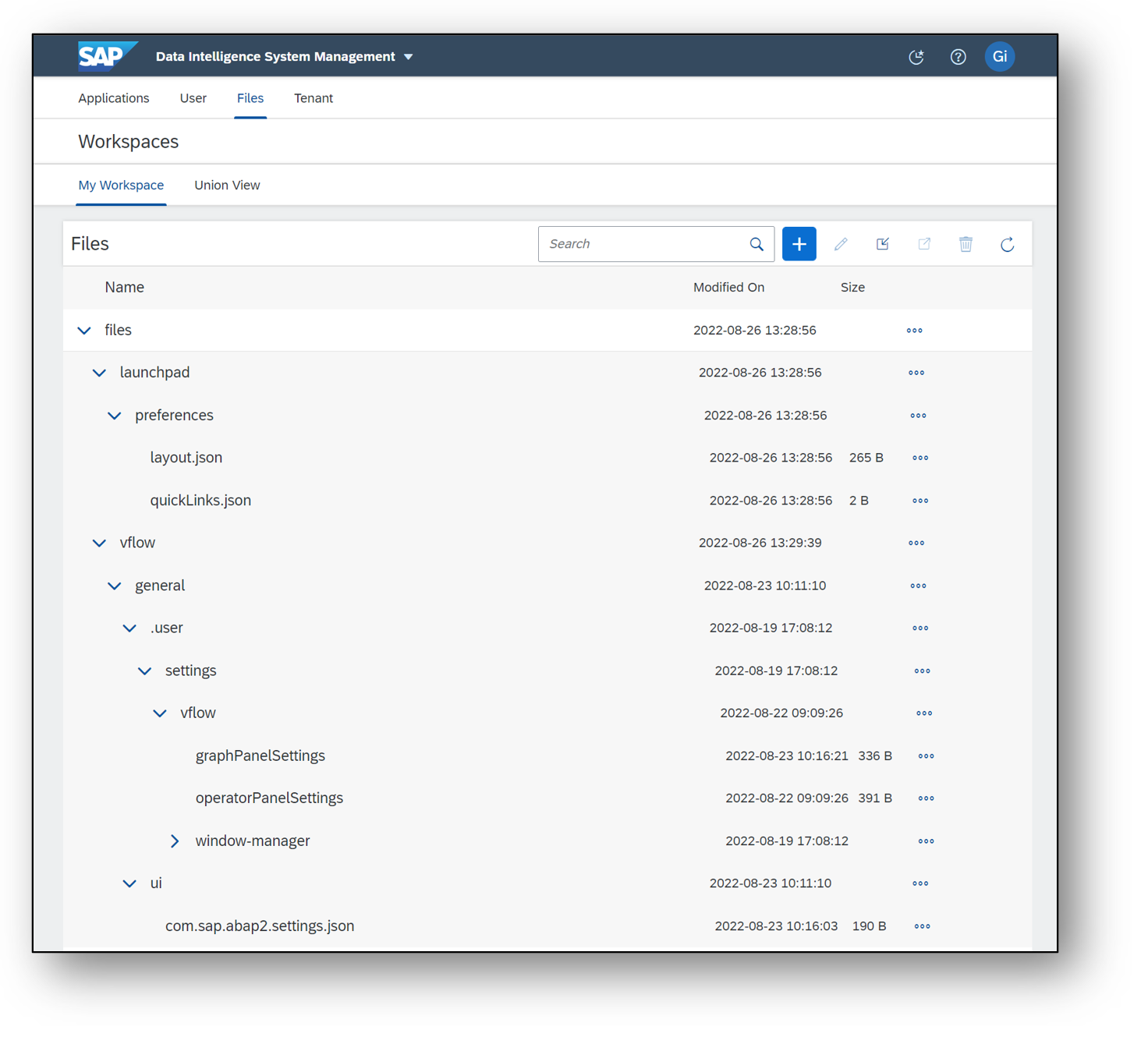

The Git Terminal Application also has access to the vhome directory of a user. In the Docker container where the Git Terminal Application is running this directory is a mounted. This directory contains the user workspace specific SAP Data Intelligence artifacts and is exposed in the Files tab of the SAP Data Intelligence Cloud System Management Application.

In order for a user to be able to see and use the Git Terminal Application inside the SAP Data Intelligence Cloud Modeler application the user needs to have the dedicated policy sap.dh.gitTerminal.start:

This policy cannot be assigned directly to a user but instead needs to be added to another policy as a child policy. The sap.dh.gitTerminal.start policy is included out-of-the-box in the exposed policies sap.dh.admin (via sap.dh.applicationAllStart) and sap.dh.member.

Sometimes it can be the case that a user is not able to see the Git Terminal application in the Modeler although he has the sap.dh.gitTermial.start policy assigned. A possible reason is that the required vflow user settings that are stored in the user workspace were not set because those files already existed prior to the SAP Data Intelligence Cloud release 2022.08 update. The problem can be solved by following the steps in the SAP Note 3235169. Alternatively, logout off the SAP data Intelligence Cloud tenant (if you are still logged in with the user that is not able to see the Git Terminal Application) and exactly follow the steps described in the below screenshot.

These steps trigger the recreation of the layout file in the vflow/general/.user/settings/vflow/window-manager directory and add the following component to the bottom stack of the SAP Data Intelligence Cloud Modeler user interface.

If the Git Terminal Application is still not showing up execute the following 5th step after the above sequence while having the browser window showing the SAP Data Intelligence Modeler Application in focus.

On Mac, you can achieve an Empty Cache and Hard Reload by pressing Command + Shift + R.

With the Git Terminal Application, it is possible to connect to both Git Server which are exposed to the internet and such that are not exposed to the internet. For the latter case there is a dedicated chapter at the end of this blog that describes how to connect an on-premise corporate Git server to SAP Data Intelligence Cloud by using SAP Cloud Connector. Depending on the configuration of the Git server, password as well as token-based authorization is possible. In chapter 2 and 3 we are using a Git Server that is exposed to the internet, so no special configuration outside of the Git Terminal Application regarding the connectivity is necessary.

In this section we describe how to manage user specific Git credentials and explain how to create a local Git repository and/or consume a basic remote Git repository in the modeler application.

If not stated otherwise, we make the following assumptions going forward:

To manage Git credentials one can use the following two credential helpers that are provided by Git out of the box:

We refer the reader to the corresponding Git documentation for a detailed exposure of Git credential helpers and in the following only highlight the relevant commands and configurations that are needed in our case.

Before configuring a Git credential helper via the Git Terminal Application, we need to set the HOME variable to the project folder (see section 1.2) in the user workspace (in order to copy & paste the commands in this blog into the Git Terminal Application, please use the context menu by right clicking into the Git Terminal application, since shortcuts are not supported yet).

You can view and inspect the project folder by using the git terminal.

(assuming your are in the vhome folder)

The cache credential helper can be configured by executing the following command.

where <seconds> needs to be replaced by an integer that determines for which period (in seconds) the Git credentials (once entered for the first time during the first operation with a remote Git repository) will be cached by the Git Terminal Application.

The credentials will be cached in memory in a daemon process that is communicating via a socket located in the hidden folder /project/.git-credential-cache.

The store credential helper can be configured by executing the following command.

Please see the official Git documentation for details. The credentials will be persisted in the hidden file /project/.git-credentials.

Keep in mind that the lifetime of the project folder is bound to the lifetime of the Docker Container where the Git Terminal Application is running. If the Git Terminal Application is restarted (e.g. the Docker container is recreated) then the credentials need to be configured again.

Without the configuration of a Git credential helper, Git will ask the user to enter valid Git credentials whenever one executes a Git command that communicates with a remote Git repository (e.g. git push, git clone, …).

Depending on the configuration of the Git server instead of a password you might need to use a personal access token instead. We refer to the documentation of the Git server how to set up a personal access token for a Git user account. For example, GitHub or GitLab for public Git servers.

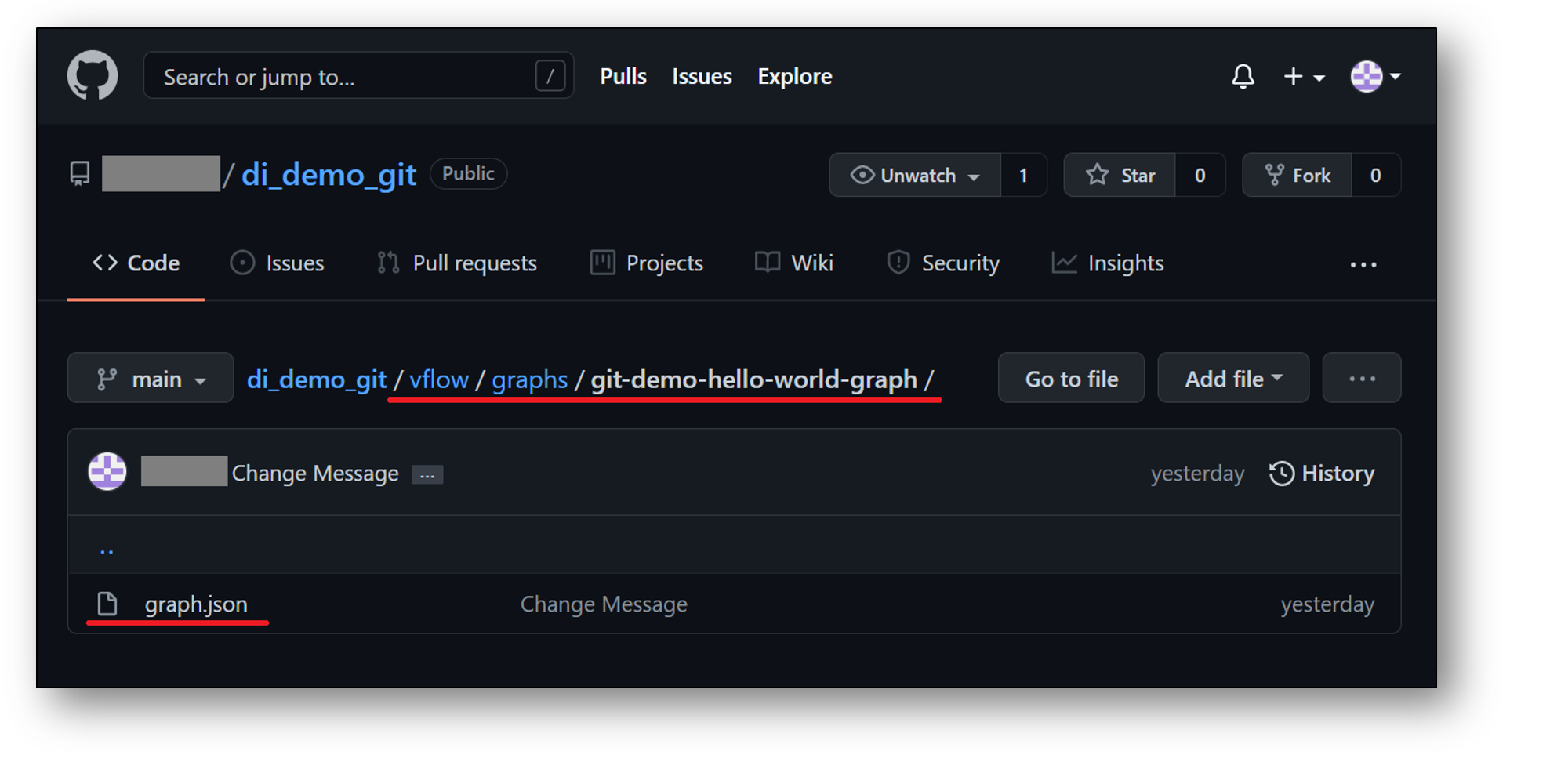

We assume that a Git credential helper has been properly initialized. For this demo, I have set up a basic DI pipeline that sends a “Hello World!” string to a Wiretap operator and pushed the graph to a remote Git repository di_demo_git:

In the SAP Data Intelligence Cloud Modeler the design time artifact of the above git-demo-hello-world-graph is highlighted in the following image.

The graph.json can be found in the following code block:

The folder structure inside the remote repository is set up in such a way that the graph.json can be found by SAP Data Intelligence Cloud as soon as we clone the content of the repository into a user workspace (see highlights in red in the above screen of the remote Git repository). We will have a closer look on the folder structure in a user workspace in a later chapter (see sections 3.i and 3.iii).

vflow/graphs/git-demo-hello-world-graph/graph.json

Now we can clone the content of the remote Git repository in the vhome folder with the following command.

Watch out for the dot at the end of the clone command that tells Git to directly put the artifacts of the remote Git repository into the vhome folder of the user workspace instead of creating a di_demo_git folder inside vhome first:

Let us suppose, we have created some artifact via the SAP Data Intelligence Modeler in a user workspace and want to push it to a new remote Git repository with name di_demo_git.

We assume that a Git credential helper has been properly initialized. For the sake of simplicity, we take the git-demo-hello-world-graph graph from the previous section and assume in addition that there are no other artifacts in the user workspace.

First we initialize the local Git repository, set the remote link and add user.email and user.name to the Git config via the Git Terminal Application.

Next, we commit the demo graph into the local branch main and push the changes to the remote branch main.

In the Getting Started chapter we assumed the user workspace to be empty for our Git commands to work. Usually, it is not the case that the user workspace is completely empty. Another tacit assumption that we made above was the functional integrity of the SAP Data Intelligence Cloud artifacts in a Git repository. But this might also be not the case. In the following section we will drop those assumptions and provide some material and guidance how certain scenarios could be handled with the Git Terminal Application. This section is just a summary of ideas and concepts regarding Git usage in SAP Data Intelligence Cloud. We will not cover all Git capabilities that might come in handy in certain circumstances and we assume the reader to be familiar with the Git concepts used in this chapter.

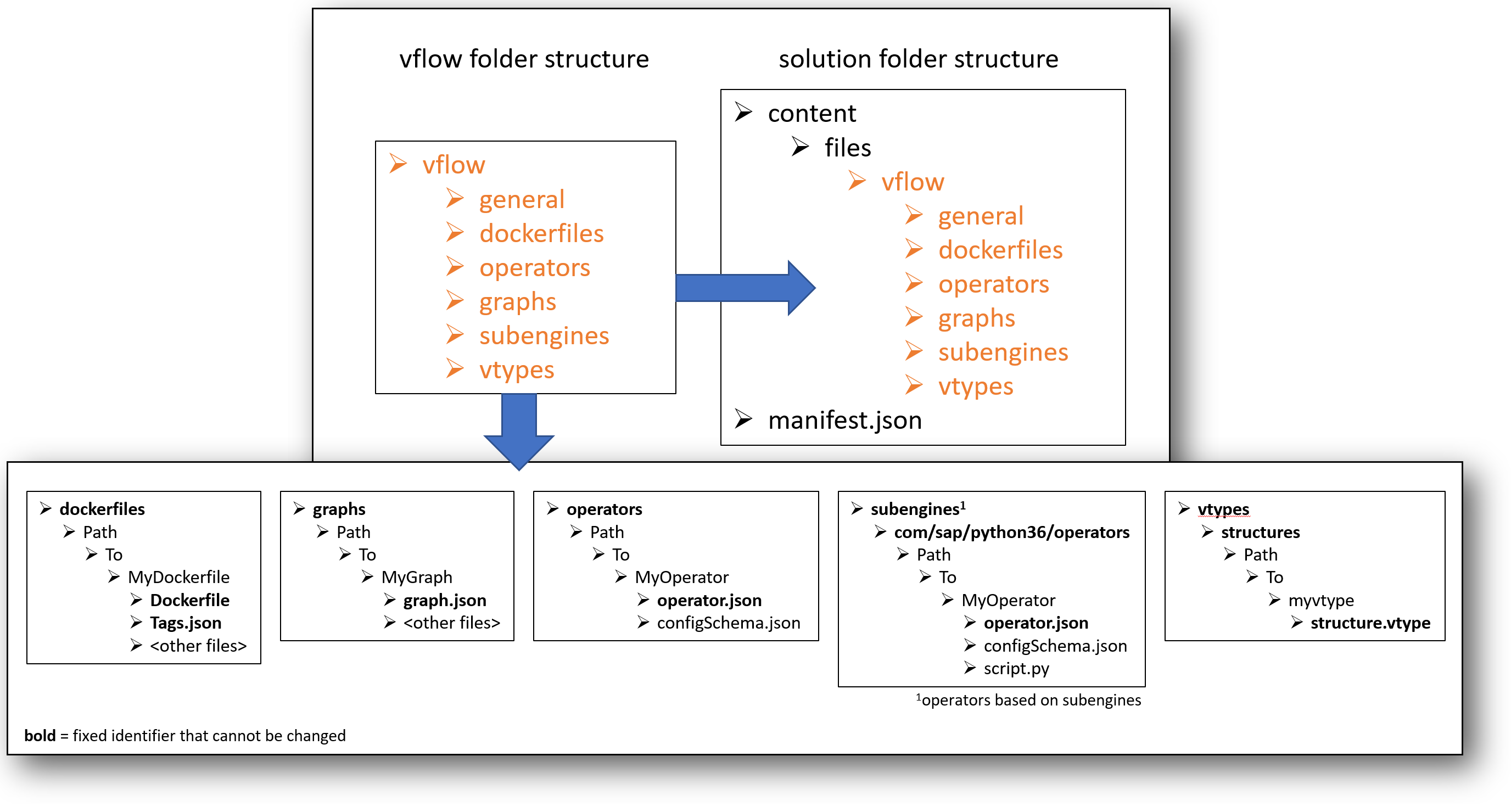

The following screenshot summarizes the vflow and solution folder structure from a design time artifacts point of view. The solution folder structure contains a manifest.json file at the root of the structure that contains additional metadata. The vflow folder structure is used in SAP Data Intelligence Cloud user workspaces to structure the different SAP Data Intelligence Cloud artifacts and the structure is important for SAP Data Intelligence Cloud to properly find and list the artifacts in the SAP Data Intelligence Cloud Modeler Application. We refer the reader to the blog about Transportation and CI/CD with SAP Data Intelligence of my colleague Thorsten Hapke for more information on solution handling in the context of CI/CD.

We will shed more light on the structure of SAP Data Intelligence solutions in section 3.iii.

There exist several possibilities to tell Git to not track certain files in a Git work tree. We briefly summarize them in the following table, the artifacts listed in sequence of their precedence.

We refer the reader to the official Git documentation for gitignore files for more details on the syntax and capabilities of the functionality. We only highlight two use cases in our context.

Let’s again look at the remote Git repository di_demo_git from the last section and start with the following non-empty user workspace that contains artifacts that are created automatically when a user logs in to an SAP Data Intelligence Cloud tenant and opens the SAP Data Intelligence Modeler application.

Since these artifacts are created in every user space during login, we can add these artifacts to a .gitignore file and add it to the remote Git repository. First, we initialize a local Git repository, set the remote origin and create the .gitignore file with the corresponding content.

When adding all content of the user workspace to the Git staging area we see that only the .gitignore file as well as the content of the vflow/general/ui subfolder is tracked by Git.

Please be aware that the vflow/general/ui subfolder contains artifacts that contain information about graph and operator categories. Therefore, in general, it is not recommended to exclude this directory from Git repositories that contain graphs and operators. We will have a closer look on these category artifacts when we talk about SAP Data Intelligence solutions in section 3.iii.

Now we add the remote origin and checkout the main branch of the remote repository.

Assume that we have a non-empty user workspace with graphs, operators and other artifacts. Assume in addition that we want to check out our di_demo_git remote Git repository into such a user workspace to do some tests (e.g. replacing the Python operator in the git-demo-hello-world-graph with a new custom one that we currently keep in the user workspace). In such a case it is possible to configure a local Git repository in the user workspace in such a way that all artifacts are ignored by Git except the ones that we want to be tracked by Git. Since, in general, one does not want to have such a configuration stored in the remote Git repository as well, one can use the .git/info/exclude file of the local Git repository.

The following commands achieve what we want:

By suitable manipulations of the fourth command one can let Git track additional files (e.g. the custom operator that we wanted to test) of the user workspace.

In case of large remote Git repositories with many different artifacts this approach might not be practicable.

To complement the above Sections, we now recap the structure and content of SAP Data Intelligence solutions and briefly highlight how to leverage the Git Terminal Application in conjunction with such solutions. By doing so, we will not explain every artifact that can be included in such a solution but rather highlight the most frequently used artifacts in the context of SAP Data Intelligence Cloud pipeline development. Hence this section is not supposed to be considered comprehensive regarding development of SAP Data Intelligence Solutions.

As outlined in section 3.i, an SAP Data Intelligence solution consists of a manifest.json file that contains the metadata, like the solution name and version, and a content directory with a vflow folder structure in it:

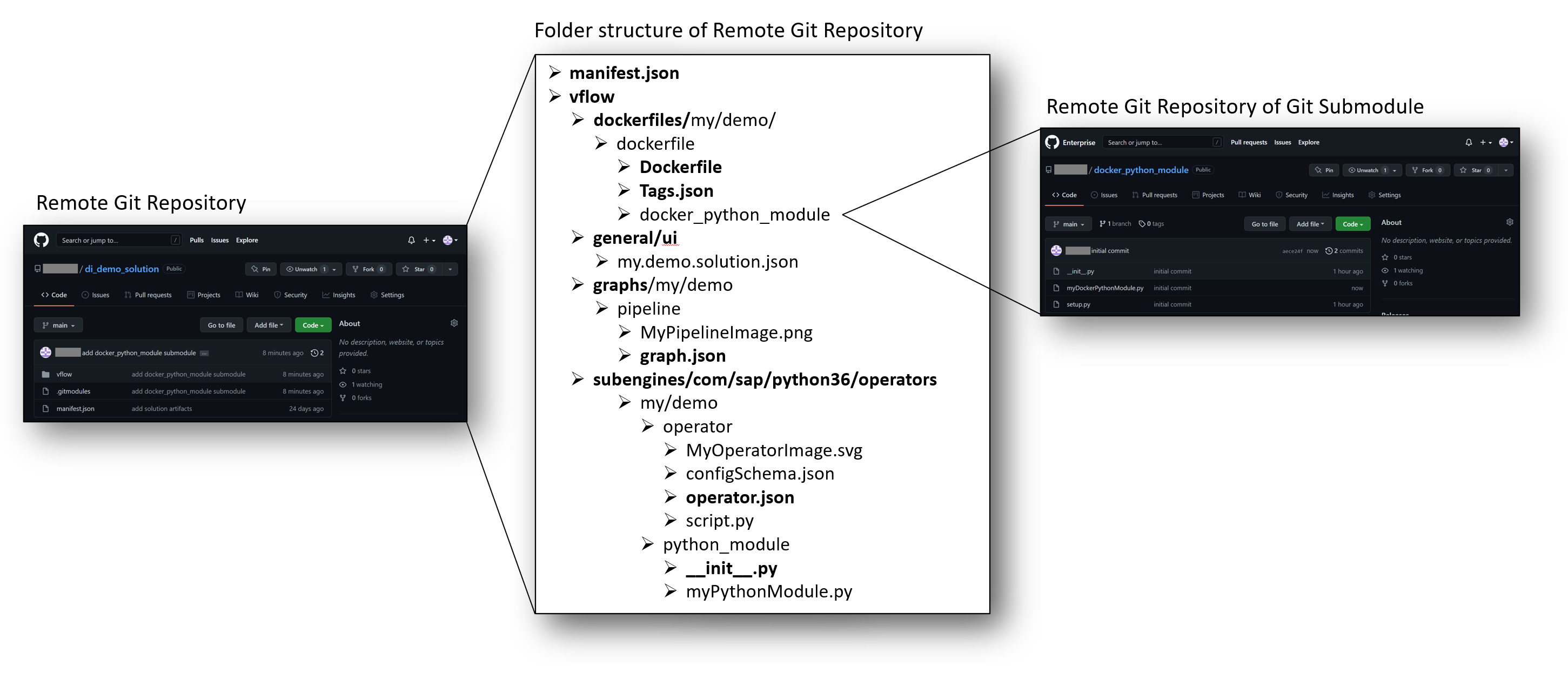

Additional vflow artifacts like vtypes and templates will not be explained in this section. We will now explain the main artifacts of an SAP Data Intelligence solution like graphs, Docker files and custom operators with a sample solution. Since the vhome directory of a user workspace only contains a vflow folder structure to store the artifacts, a possible remote Git repository that holds a solution should have a manifest.json file as well as a vflow directory at its root level. Let’s assume we have the following structure of an SAP Data Intelligence solution available in a remote Git repository di_demo_solution:

We have marked such paths, directories and files in bold whose name cannot be chosen freely but is strictly defined either by the vflow structure (e.g., dockerfiles, general/ui, …) or by programming languages (e.g., __init__.py).

We are not going to judge Git submodules and evaluate their up- and downsides but instead will simply use them to highlight how to use the Git Terminal application in conjunction with SAP data Intelligence Solutions. We refer the reader to the official documentation of git submodules for more comprehensive guidance on how to use Git Submodules. We will only provide the commands that are needed to follow this blog. To achieve the above Git setup, that is, including the remote docker_python_module Git repository as a Git submodule inside the di_demo_solution remote Git repository one needs to locally checkout the remote Git repository di_demo_solution and then executing the following commands.

We now explain the three main building blocks (Docker files, graphs, custom operators) of the above example.

Docker files are used to add additional resources to the Docker image that is used as a basis for the runtime container of pipelines and operators (see also the ).

The dockerfiles directory of an SAP Data Intelligence Solution contains the Docker file artifacts. Only the dockerfiles root directory has a fixed name. Apart from that Docker files can be organized in arbitrary folder structures (e.g., my/demo/dockerfile in the above example).

In the SAP Data Intelligence context such Docker images are used to provide the proper runtime environments for operators and pipelines that run, for instance, custom code or execute compiled binaries. Selection of Docker files and, hence, the resulting Docker images for certain pipeline operators is achieved via tag annotations in pipelines and operators (see the corresponding SAP Help documentation). The following picture highlights this in case of the above sample SAP Data Intelligence Solution.

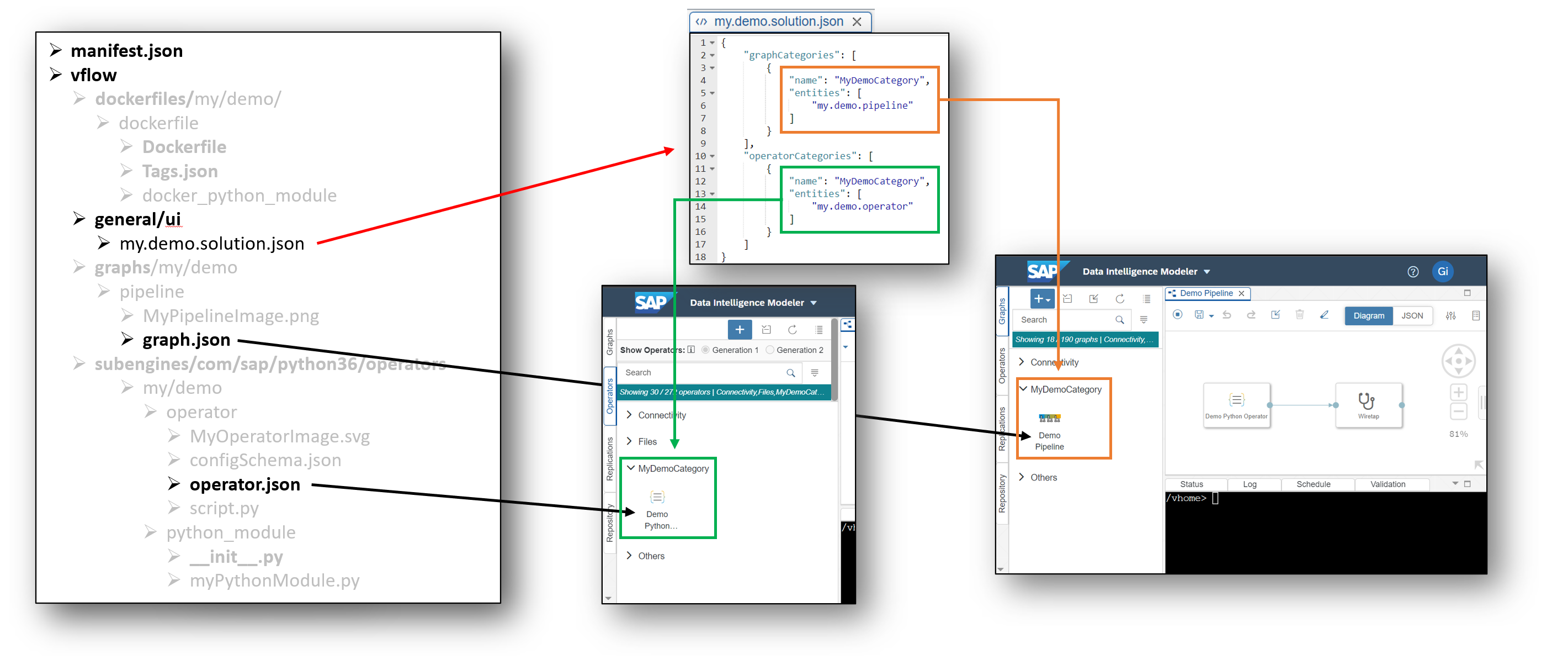

The general/ui directory contains .json files that are used to define custom categories for pipelines and operators to group these artifacts in the SAP data Intelligence Modeler user interface. The following picture shows the JSON structure of the my.demo.solution.json file and how the category grouping is reflected on the user interface.

In case the general/ui directory contains more than one .json file, they will be merged.

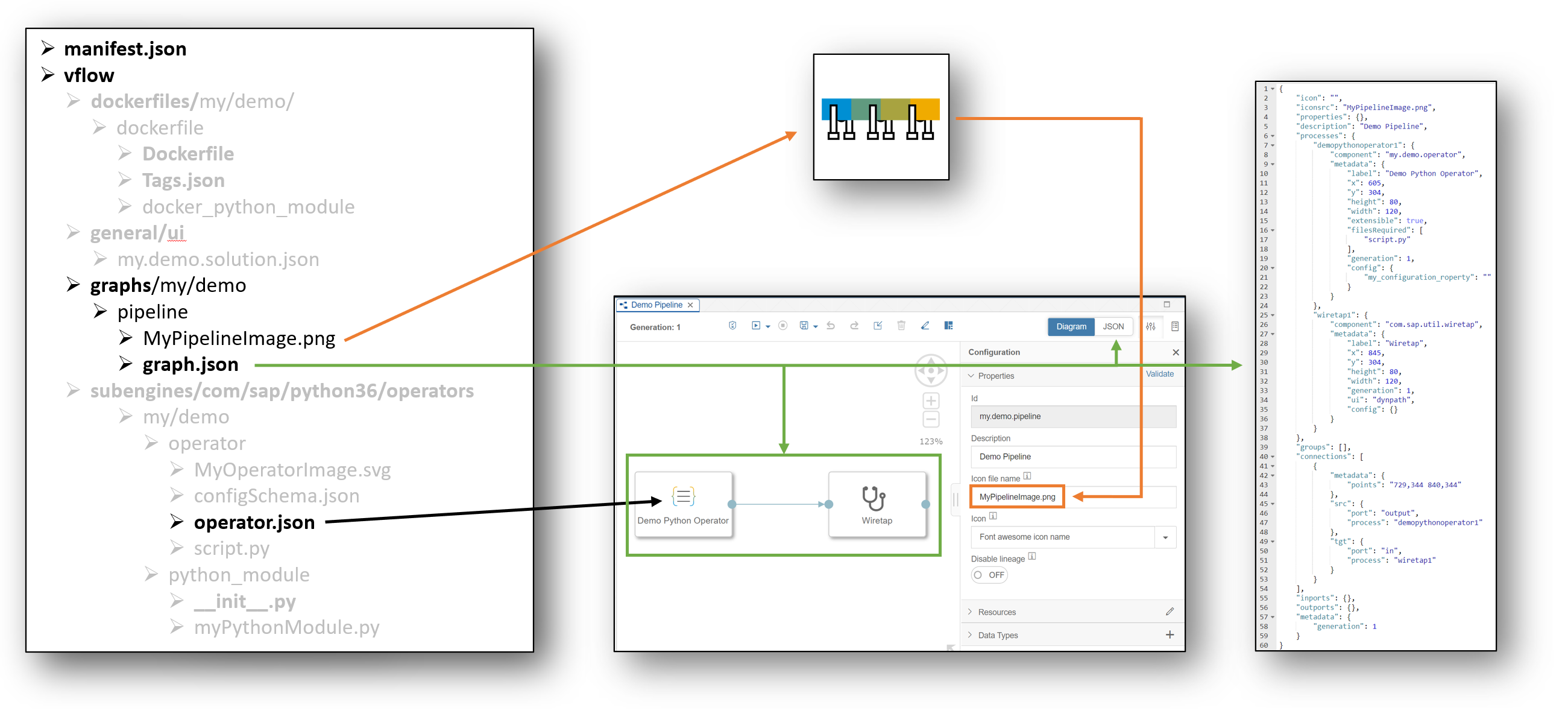

The graphs directory of an SAP Data Intelligence Solution contains the graph design time artifacts. Only the graphs root directory has a fixed name. Apart from that, graphs can be organized in arbitrary folder structures (e.g., my/demo/pipeline in the above example).

The directory of a graph definition can also contain an additional launchConfigs folder that contains default configurations for substitution parameters that are used in operator configurations in the graph. We did not include such a folder in the above example and will not dive deeper into the topic of launch configurations. We refer the reader to the SAP Help documentation on graph creation. For the sake of completeness, the following image highlights how such a launchConfigs directory could look like in case of our example.

The subengines/com/sap/python36/operators directory contains the artifacts of custom python operators as well as possible additional custom python modules that are used in custom python operators. Only the above path has a fixed name. Apart from that, custom python operators and modules can be organized in arbitrary folder structures (e.g., my/demo/operator and my/demo/python_module in the above example). The following image illustrates such a custom operator setup.

The python_module is recognized by the Python runtime of corresponding Docker container since the PYTHONPATH was extended with the subengines/com/sap directory in the past. Regarding when to use this approach or the inclusion of Python modules via custom Docker images one can stick to the following rough-and-ready rule. If the Python module is only used by a specific operator or by a set of operators, then one can include the module directly with the custom operator design time artifacts. The idea of extending the PYTHONPATH with the above directory is to give pipeline/operator developers the possibility to structure their Python code that is meant to be used in custom Python operators in convenient packets to improve maintainability. If the module is of a more general nature and can be considered independent of SAP Data Intelligence artifacts and is maybe also developed independently of SAP Data Intelligence pipelines and located in a dedicated Git repository, then one can ingest the Python module via a custom Docker image.

With the Git Terminal Application it is now possible to also bundle the artifacts in the user workspace as a SAP Data Intelligence solution following the approach outlined at the end of the Blog of my colleague Christian Sengstock about Git Workflow and CI/CD Process. For the latter you need vctl installed in the container that runs the Git Terminal Application. To achieve this, import the vctl command line client file into the user workspace and then execute the following commands in the Git Terminal Application:

You can then check if vctl is working by executing vctl in the command line. The result should look similar to the one in the screen below.

One is then able to bundle a solution out of the artifacts that are currently present in the user workspace by following the steps outlined at the end of the blog of my colleague christian.sengstock on SAP Data Intelligence: Git Workflow and CI/CD Process.

When renaming the Git directory .git (e.g., first.git) and using the capabilities of ignoring files in Git (see Section 3.2) it is also possible to setup/manage multiple Git repositories (e.g., adding a second.git repository as well) at the same time in one SAP Data Intelligence Cloud user workspace that share the vhome directory as their worktree. To switch between the different git repositories one would need to use the variables GIT_WORK_TREE and GIT_DIR and one needs to manually (and additional .gitignore configuration) control which artefacts of the joint worktree directory is tracked by which Git repository. We are not going to tackle this rather complicated and error-prone approach in this blog and refer the reader to the official Git Documentation on environment variables used by Git and Git worktrees. We do not recommend to use such an approach unless you encounter a justified exceptional case.

We assume that a SAP Cloud Connector is installed in the on-premise landscape and that there exists a SAP Business Technology Platform Subaccount with a SAP Data Intelligence Cloud instance. The following Cloud Connector configurations need to be performed with a user that has the rights to add SAP BTP Subaccounts to SAP Cloud Connector. For more details on SAP Cloud Connector, we refer the reader to the official SAP Help Documentation.

The SAP Cloud Connector Location Id is typically used, when you setup multiple SAP Cloud Connectors for a single BTP subaccount. For this blog, we assume that the SAP Cloud Connector is configured with a Location Id. The Cloud Connector Location Id is used later on when connecting SAP Data Intelligence Cloud to the SAP Cloud Connector. Depending on the configuration of the identity provider the string in the Password field might consist out of several pieces apart from the actual user password.

The SAP Cloud Connector Location Id is typically used, when you setup multiple SAP Cloud Connectors for a single BTP subaccount. For this blog, we assume that the SAP Cloud Connector is configured with a Location Id. The Cloud Connector Location Id is used later on when connecting SAP Data Intelligence Cloud to the SAP Cloud Connector. Depending on the configuration of the identity provider the string in the Password field might consist out of several pieces apart from the actual user password.

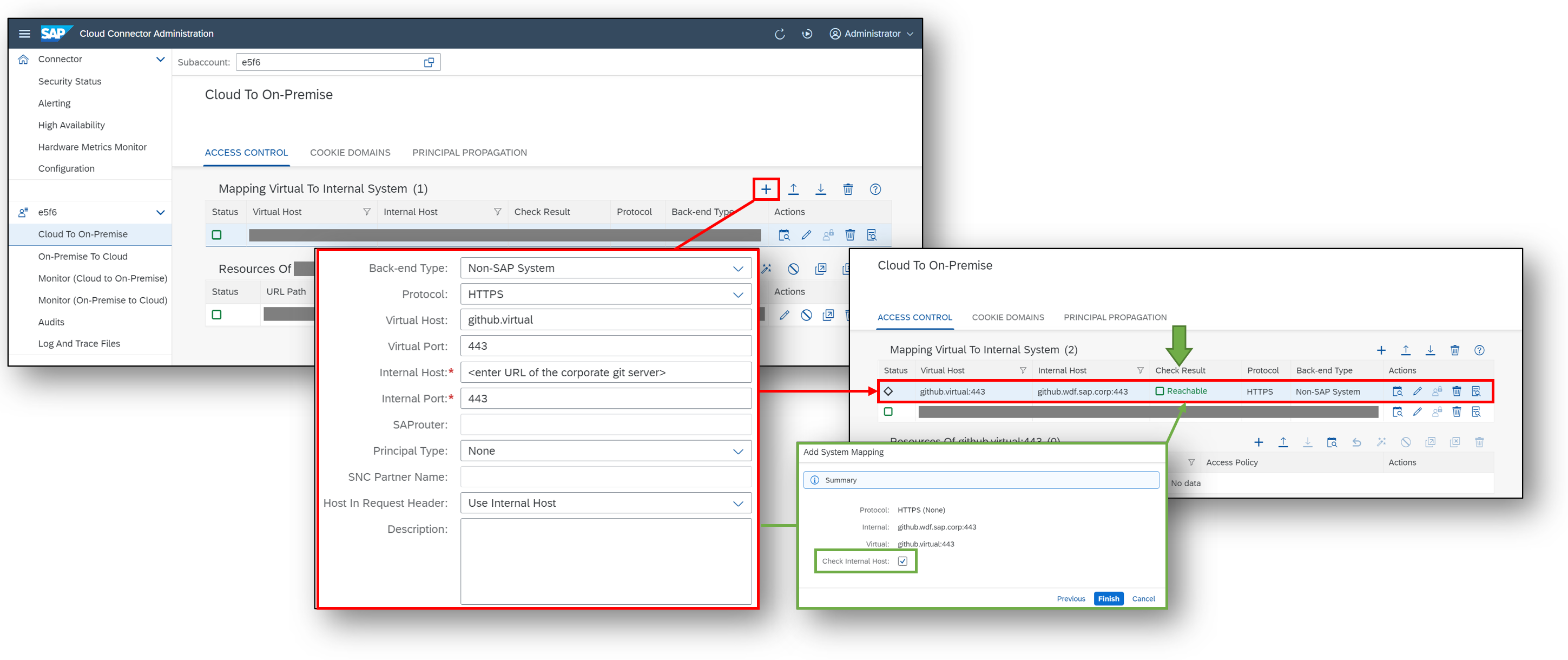

Next, we create a Cloud To On-Premise mapping to the on-premise corporate Git server from the SAP Cloud Connector. To immediately perform a connectivity check against the internal host during the creation process, one can optionally mark the corresponding checkbox on the summary popup of the creation process. As recommended by the SAP Cloud Connector, we use “github.virtual” as the virtual hostname. This hostname is later used, when interacting with the Git server from within the Git Terminal Application.

The Check Result should be reachable. Next, we add the resources that shall be accessible via the mapping to the on-premise corporate Git server that we just created. In the image below we allowed access to all paths on the on-premise corporate Git server.

Switching to the SAP Business Technology Platform Subaccount that we used in the last section we should see the following status in the Cloud Connectors section:

We now switch to a SAP Data Intelligence Cloud tenant that is deployed in the SAP Business Technology Platform Subaccount that we used above. For the following steps that are performed in SAP Data Intelligence Cloud, a user with write access to the SAP Data Intelligence Connection Management as well as access to the SAP Data Intelligence Modeler and Git Terminal Application is needed. We create a new connection via the SAP Data Intelligence Cloud Connection Management application. For the example in the screenshot and in the following steps, we use “Corporate_Git” for the connection id of the new connection.

If a SAP Data Intelligence Cloud user -that has access to the SAP Data Intelligence Cloud Modeler and the Git Terminal Application in it- wants to connect to the on-premise corporate Git server the following commands need to be executed in the Git Terminal application. The script interacts with the SAP Data Intelligence Cloud Connection Management and retrieves the necessary configuration parameters. Last it configures Git to use the SAP Cloud Connector for all following commands.

Hint: After a while this configuration script may need to be executed again, as git configuration parameters may change. For example, the authorization token is valid for only one hour.

The execution should look like the following with no errors occurring.

Of course, it is also possible to save the above bash script in a file (e.g., script.sh) and executing it via the source command in the Git Terminal application (e.g., source script.sh).

If we check the global Git configuration which is located in /project/.gitconfig it should look similar to the following:

In case of any errors regarding the http.extraHeader and http.proxy properties in the config file you can always remove the properties with the following commands:

A more detailed explanation of the http section in the Git config file as well as the --unset flag can be found in the official Git documentation.

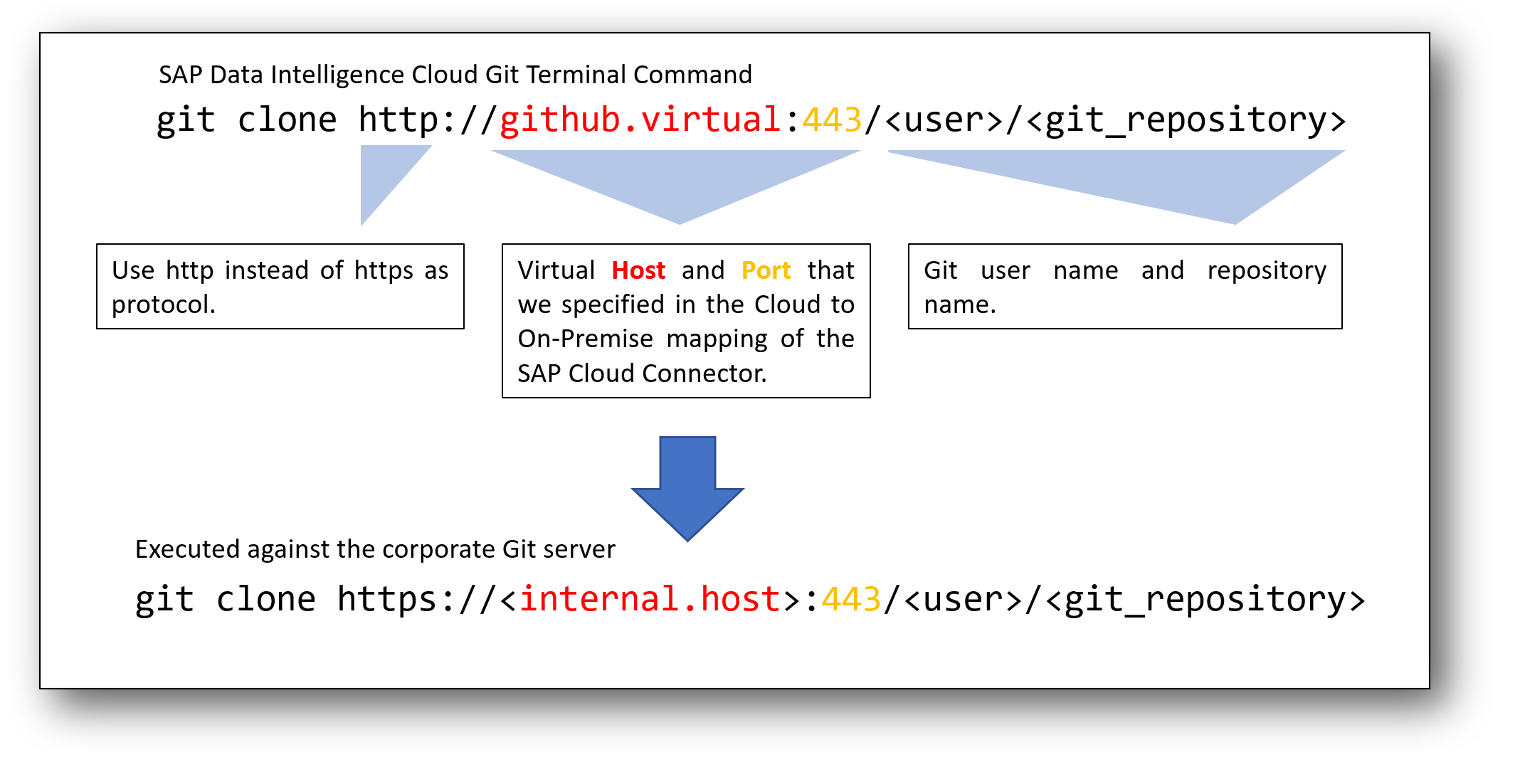

Assuming that the Git credential helper was configured properly, the user is now able to execute Git commands against the on-premise corporate Git server. Cloning a remote Git repository would work with the following command:

Regarding connectivity configurations on SAP Business Technology Platform and in SAP Cloud Connector we refer the reader to the corresponding SAP Help Documentations of SAP Business Technology Platform (see also the section about the Connectivity Proxy for Kubernetes) and SAP Cloud Connector (see also the section about inbound connectivity).

Assuming that the di_demo_git remote Git repository is set up on an on-premise corporate Git server that is not exposed to the internet a proper clone of this remote repository with the Git Terminal Application would look like the following.

In this blog you learned about the new Git integration capabilities in SAP Data Intelligence,

Finally, at the end of this blog post, I would like to thank my colleagues Mahesh Shivanna, Reinhold Kautzleben and Christian Schuerings for the great support and guidance as well as fruitful discussions during the creation of the content for this blog.

Feel free to

It is the purpose of this blog to describe the Git Terminal Application in SAP Data Intelligence Cloud that is included in release 2022.08 mid of August 2022 (see the What’s New Blog of my colleague Eduardo Schmidt Haussen)

Here is the table of contents for this blog.

- Introduction

- Prerequisites and Resources

- The SAP Data Intelligence Cloud Git Terminal Application

- Accessing the Git Terminal Application

- Authentication methods and supported Git Server

- Getting Started

- Managing Git credentials

- Cloning a remote Git repository

- Initialize a local Git repository and pushing it to a remote Git repository

- Advanced Scenarios

- Recap of SAP Data Intelligence vflow and solution folder structure

- Ignoring files with Git

- Working with SAP Data Intelligence solutions

- Using several Git repositories

- Connecting an on-premise corporate Git Server to SAP Data Intelligence Cloud

- Connect SAP Business Technology Subaccount to SAP Cloud Connector

- Connect on-premise corporate Git Server to SAP Cloud Connector

- Enable communication between SAP Data Intelligence Cloud and SAP Cloud Connector

- Summary

Appendix: Blog History

Section 3.iii also contains additional details on the structure of an SAP Data Intelligence Solution which can be seen as a short excursus. Nevertheless, it is also shown how the Git Terminal Application can be leveraged to manage such solutions in a SAP Data Intelligence user workspace.

The Appendix is going to contain a history for changes and updates on the Blog. The Blog is subject to change in order to keep it up to date.

Examples presented in this blog are only meant to be “Hello World” examples that serve the purpose to showcase certain aspects/features of SAP Data Intelligence Cloud. Although we are using examples of SAP Data Intelligence Cloud Generation 1 Pipelines the Git capabilities of the Git integration also remain valid for SAP Data Intelligence Cloud Generation 2 Pipelines.

The screenshots and strips in this blog are based on SAP Data Intelligence Cloud 2022.08 and SAP Cloud Connector 2.14.2.

1. Introduction

In this chapter we provide an overview of the Git integration in SAP Data Intelligence Cloud.

i. Prerequisites and Resources

During this blog we assume the reader to be familiar with SAP Data Intelligence Cloud regarding System and File Management as well as pipeline modeling (see the official SAP Help Documentation). We also expect the reader to have a basic understanding of the Git version control software (see the official Git documentation for a detailed exposure of the technology) as well as basic Linux bash commands and the concept of (Docker) container technology (see the official Docker documentation).

There already exist very useful blogs that describe possible CI/CD processes for productive usage/development of SAP Data Intelligence Cloud and the tools that are leveraged for this purpose as well as guidance how to use Git version control in connection with SAP Data Intelligence Cloud that were created prior to the native Git integration inside the SAP Data Intelligence Cloud Modeler Application.

- christian.sengstock - SAP Data Intelligence: Git Workflow and CI/CD Process

- thorsten.hapke - Transportation and CI/CD with SAP Data Intelligence

- jacek.klatt2 - SAP Business Technology Platform – integration aspects in the CI/CD approach

- gianlucadelorenzo - Zen and the Art of SAP Data Intelligence. Episode 3: vctl, the hidden pearl you must know

These guides and resources remain valid and have proven to be useful for different use cases. This blog is intended to serve as a complementation to the above resources and focuses on the new Git Terminal application that was released with the SAP Data Intelligence Cloud 2022.08 update.

ii. The SAP Data Intelligence Cloud Git Terminal Application

The Git integration in SAP Data Intelligence Cloud is provided via a terminal application that is visible as a tab at the bottom of the SAP Data Intelligence Cloud Modeler application. Throughout the blog we refer to it as the Git Terminal Application.

The Git Terminal Application is a user application meaning that for every user in SAP Data Intelligence Cloud a separate instance of the application is started.

The Git Terminal Application itself enables comprehensive support of the Git command line client for Linux inside a user workspace and also standard Linux commands are available. With the upcoming SAP Data Intelligence Cloud release 2022.08 version 2.34.1 of the Git command line client for Linux is shipped.

The artifacts of the Git Terminal Application are in the /project folder that is created during the Docker container creation for the application. It is a user-specific folder which is only accessible from within the Git Terminal Application. The lifetime of this folder is bound to the Git Terminal Application itself. In case of a restart of the application the content of the folder is cleared. The latter is important to know in conjunction with Git credential management (see section 2.1).

The Git Terminal Application also has access to the vhome directory of a user. In the Docker container where the Git Terminal Application is running this directory is a mounted. This directory contains the user workspace specific SAP Data Intelligence artifacts and is exposed in the Files tab of the SAP Data Intelligence Cloud System Management Application.

iii. Accessing the Git Terminal Application

In order for a user to be able to see and use the Git Terminal Application inside the SAP Data Intelligence Cloud Modeler application the user needs to have the dedicated policy sap.dh.gitTerminal.start:

This policy cannot be assigned directly to a user but instead needs to be added to another policy as a child policy. The sap.dh.gitTerminal.start policy is included out-of-the-box in the exposed policies sap.dh.admin (via sap.dh.applicationAllStart) and sap.dh.member.

Common Problem: The Git Terminal Application is not visible

Sometimes it can be the case that a user is not able to see the Git Terminal application in the Modeler although he has the sap.dh.gitTermial.start policy assigned. A possible reason is that the required vflow user settings that are stored in the user workspace were not set because those files already existed prior to the SAP Data Intelligence Cloud release 2022.08 update. The problem can be solved by following the steps in the SAP Note 3235169. Alternatively, logout off the SAP data Intelligence Cloud tenant (if you are still logged in with the user that is not able to see the Git Terminal Application) and exactly follow the steps described in the below screenshot.

These steps trigger the recreation of the layout file in the vflow/general/.user/settings/vflow/window-manager directory and add the following component to the bottom stack of the SAP Data Intelligence Cloud Modeler user interface.

{

"type": "component",

"id": "Terminal Panel",

"isClosable": false,

"isExtension": false,

"constrainDragByID": "Bottom",

"reorderEnabled": true,

"minHeight": 20,

"componentName": "Terminal Panel",

"componentState": {

"title": "Git Terminal",

"componentName": "Terminal Panel"

},

"title": "Git Terminal"

}If the Git Terminal Application is still not showing up execute the following 5th step after the above sequence while having the browser window showing the SAP Data Intelligence Modeler Application in focus.

On Mac, you can achieve an Empty Cache and Hard Reload by pressing Command + Shift + R.

iv. Authentication methods and supported Git Servers

With the Git Terminal Application, it is possible to connect to both Git Server which are exposed to the internet and such that are not exposed to the internet. For the latter case there is a dedicated chapter at the end of this blog that describes how to connect an on-premise corporate Git server to SAP Data Intelligence Cloud by using SAP Cloud Connector. Depending on the configuration of the Git server, password as well as token-based authorization is possible. In chapter 2 and 3 we are using a Git Server that is exposed to the internet, so no special configuration outside of the Git Terminal Application regarding the connectivity is necessary.

2. Getting Started

In this section we describe how to manage user specific Git credentials and explain how to create a local Git repository and/or consume a basic remote Git repository in the modeler application.

If not stated otherwise, we make the following assumptions going forward:

- We have a SAP Data Intelligence Cloud user at hand that has access to the SAP Data Intelligence Cloud Modeler application as well as the Git Terminal Application (e.g. has the dh.developer and sap.dh.member policy assigned)

- The vhome folder of the SAP Data Intelligence Cloud user is empty.

i. Managing Git credentials

To manage Git credentials one can use the following two credential helpers that are provided by Git out of the box:

- Cache: credentials stored in memory for short durations

- Store: credentials stored indefinitely on disk

We refer the reader to the corresponding Git documentation for a detailed exposure of Git credential helpers and in the following only highlight the relevant commands and configurations that are needed in our case.

Before configuring a Git credential helper via the Git Terminal Application, we need to set the HOME variable to the project folder (see section 1.2) in the user workspace (in order to copy & paste the commands in this blog into the Git Terminal Application, please use the context menu by right clicking into the Git Terminal application, since shortcuts are not supported yet).

export HOME=~You can view and inspect the project folder by using the git terminal.

cd ../project && ls -al(assuming your are in the vhome folder)

The cache credential helper

The cache credential helper can be configured by executing the following command.

git config --global credential.helper ’cache --timeout=<seconds>’where <seconds> needs to be replaced by an integer that determines for which period (in seconds) the Git credentials (once entered for the first time during the first operation with a remote Git repository) will be cached by the Git Terminal Application.

The credentials will be cached in memory in a daemon process that is communicating via a socket located in the hidden folder /project/.git-credential-cache.

The store credential helper

The store credential helper can be configured by executing the following command.

git config --global credential.helper storePlease see the official Git documentation for details. The credentials will be persisted in the hidden file /project/.git-credentials.

Keep in mind that the lifetime of the project folder is bound to the lifetime of the Docker Container where the Git Terminal Application is running. If the Git Terminal Application is restarted (e.g. the Docker container is recreated) then the credentials need to be configured again.

Without the configuration of a Git credential helper, Git will ask the user to enter valid Git credentials whenever one executes a Git command that communicates with a remote Git repository (e.g. git push, git clone, …).

Depending on the configuration of the Git server instead of a password you might need to use a personal access token instead. We refer to the documentation of the Git server how to set up a personal access token for a Git user account. For example, GitHub or GitLab for public Git servers.

ii. Cloning a remote Git repository

We assume that a Git credential helper has been properly initialized. For this demo, I have set up a basic DI pipeline that sends a “Hello World!” string to a Wiretap operator and pushed the graph to a remote Git repository di_demo_git:

In the SAP Data Intelligence Cloud Modeler the design time artifact of the above git-demo-hello-world-graph is highlighted in the following image.

The graph.json can be found in the following code block:

{

"properties": {},

"description": "Git Demo Graph",

"processes": {

"python3operator1": {

"component": "com.sap.system.python3Operator",

"metadata": {

"label": "Python3 Operator",

"x": 12,

"y": 12,

"height": 80,

"width": 120,

"extensible": true,

"filesRequired": [

"script.py"

],

"generation": 1,

"config": {

"script": "api.send(\"output\", \"Hello World!\")"

},

"additionaloutports": [

{

"name": "output",

"type": "string"

}

]

}

},

"wiretap1": {

"component": "com.sap.util.wiretap",

"metadata": {

"label": "Wiretap",

"x": 181,

"y": 12,

"height": 80,

"width": 120,

"generation": 1,

"ui": "dynpath",

"config": {}

}

}

},

"groups": [],

"connections": [

{

"metadata": {

"points": "136,52 176,52"

},

"src": {

"port": "output",

"process": "python3operator1"

},

"tgt": {

"port": "in",

"process": "wiretap1"

}

}

],

"inports": {},

"outports": {},

"metadata": {

"generation": 1

}

}

The folder structure inside the remote repository is set up in such a way that the graph.json can be found by SAP Data Intelligence Cloud as soon as we clone the content of the repository into a user workspace (see highlights in red in the above screen of the remote Git repository). We will have a closer look on the folder structure in a user workspace in a later chapter (see sections 3.i and 3.iii).

vflow/graphs/git-demo-hello-world-graph/graph.json

Now we can clone the content of the remote Git repository in the vhome folder with the following command.

git clone https://<url>/<user>/di_demo_git .Watch out for the dot at the end of the clone command that tells Git to directly put the artifacts of the remote Git repository into the vhome folder of the user workspace instead of creating a di_demo_git folder inside vhome first:

iii. Initializing a local Git repository and pushing it to a remote Git repository

Let us suppose, we have created some artifact via the SAP Data Intelligence Modeler in a user workspace and want to push it to a new remote Git repository with name di_demo_git.

We assume that a Git credential helper has been properly initialized. For the sake of simplicity, we take the git-demo-hello-world-graph graph from the previous section and assume in addition that there are no other artifacts in the user workspace.

First we initialize the local Git repository, set the remote link and add user.email and user.name to the Git config via the Git Terminal Application.

git init -b main

git config user.email <email>

git config user.name <name>

git remote add origin https://<url>/di_demo_git.gitNext, we commit the demo graph into the local branch main and push the changes to the remote branch main.

git add vflow/graphs/git-demo-hello-world-graph/graph.json

git commit -m "add demo graph"

git push -u origin main3. Advanced Scenarios

In the Getting Started chapter we assumed the user workspace to be empty for our Git commands to work. Usually, it is not the case that the user workspace is completely empty. Another tacit assumption that we made above was the functional integrity of the SAP Data Intelligence Cloud artifacts in a Git repository. But this might also be not the case. In the following section we will drop those assumptions and provide some material and guidance how certain scenarios could be handled with the Git Terminal Application. This section is just a summary of ideas and concepts regarding Git usage in SAP Data Intelligence Cloud. We will not cover all Git capabilities that might come in handy in certain circumstances and we assume the reader to be familiar with the Git concepts used in this chapter.

i. Recap of vflow and solution folder structure

The following screenshot summarizes the vflow and solution folder structure from a design time artifacts point of view. The solution folder structure contains a manifest.json file at the root of the structure that contains additional metadata. The vflow folder structure is used in SAP Data Intelligence Cloud user workspaces to structure the different SAP Data Intelligence Cloud artifacts and the structure is important for SAP Data Intelligence Cloud to properly find and list the artifacts in the SAP Data Intelligence Cloud Modeler Application. We refer the reader to the blog about Transportation and CI/CD with SAP Data Intelligence of my colleague Thorsten Hapke for more information on solution handling in the context of CI/CD.

We will shed more light on the structure of SAP Data Intelligence solutions in section 3.iii.

ii. Ignoring files with Git

There exist several possibilities to tell Git to not track certain files in a Git work tree. We briefly summarize them in the following table, the artifacts listed in sequence of their precedence.

| Artifact and Location | Stored in remote repository | Description |

| <some directory>/.gitignore | Yes | Ignore files in the directory <some directory> of the local git repository and all subdirectories of that directory that do not contain a separate .gitignore file |

| .git/info/exclude | No | Define settings and ignore rules for the local repository. This file is not pushed to the remote repository and is for local settings only. |

| $HOME/.config/git/ignore | No | Define ignore settings that shall apply across all local repositories that are created in the user workspace |

We refer the reader to the official Git documentation for gitignore files for more details on the syntax and capabilities of the functionality. We only highlight two use cases in our context.

Ignoring only specific files

Let’s again look at the remote Git repository di_demo_git from the last section and start with the following non-empty user workspace that contains artifacts that are created automatically when a user logs in to an SAP Data Intelligence Cloud tenant and opens the SAP Data Intelligence Modeler application.

Since these artifacts are created in every user space during login, we can add these artifacts to a .gitignore file and add it to the remote Git repository. First, we initialize a local Git repository, set the remote origin and create the .gitignore file with the corresponding content.

git init -b main

echo 'launchpad/**' >> .gitignore

echo 'vflow/general/.user/**' >> .gitignoreWhen adding all content of the user workspace to the Git staging area we see that only the .gitignore file as well as the content of the vflow/general/ui subfolder is tracked by Git.

Please be aware that the vflow/general/ui subfolder contains artifacts that contain information about graph and operator categories. Therefore, in general, it is not recommended to exclude this directory from Git repositories that contain graphs and operators. We will have a closer look on these category artifacts when we talk about SAP Data Intelligence solutions in section 3.iii.

Now we add the remote origin and checkout the main branch of the remote repository.

git remote add origin <url>/<user>/di_demo_git

git fetch

git checkout main

Ignoring all files except specific ones

Assume that we have a non-empty user workspace with graphs, operators and other artifacts. Assume in addition that we want to check out our di_demo_git remote Git repository into such a user workspace to do some tests (e.g. replacing the Python operator in the git-demo-hello-world-graph with a new custom one that we currently keep in the user workspace). In such a case it is possible to configure a local Git repository in the user workspace in such a way that all artifacts are ignored by Git except the ones that we want to be tracked by Git. Since, in general, one does not want to have such a configuration stored in the remote Git repository as well, one can use the .git/info/exclude file of the local Git repository.

The following commands achieve what we want:

git init -b main

echo '*' >> .git/info/exclude

echo '!*/' >> .git/info/exclude

echo '!vflow/graphs/git-demo-hello-world-graph/**' >> $GIT_DIR/info/exclude

git remote add origin <url>/<user>/di_demo_git

git fetch

git checkout mainBy suitable manipulations of the fourth command one can let Git track additional files (e.g. the custom operator that we wanted to test) of the user workspace.

In case of large remote Git repositories with many different artifacts this approach might not be practicable.

iii. Working with SAP Data Intelligence Solutions

To complement the above Sections, we now recap the structure and content of SAP Data Intelligence solutions and briefly highlight how to leverage the Git Terminal Application in conjunction with such solutions. By doing so, we will not explain every artifact that can be included in such a solution but rather highlight the most frequently used artifacts in the context of SAP Data Intelligence Cloud pipeline development. Hence this section is not supposed to be considered comprehensive regarding development of SAP Data Intelligence Solutions.

As outlined in section 3.i, an SAP Data Intelligence solution consists of a manifest.json file that contains the metadata, like the solution name and version, and a content directory with a vflow folder structure in it:

Additional vflow artifacts like vtypes and templates will not be explained in this section. We will now explain the main artifacts of an SAP Data Intelligence solution like graphs, Docker files and custom operators with a sample solution. Since the vhome directory of a user workspace only contains a vflow folder structure to store the artifacts, a possible remote Git repository that holds a solution should have a manifest.json file as well as a vflow directory at its root level. Let’s assume we have the following structure of an SAP Data Intelligence solution available in a remote Git repository di_demo_solution:

We have marked such paths, directories and files in bold whose name cannot be chosen freely but is strictly defined either by the vflow structure (e.g., dockerfiles, general/ui, …) or by programming languages (e.g., __init__.py).

Remark regarding Git submodules:

We are not going to judge Git submodules and evaluate their up- and downsides but instead will simply use them to highlight how to use the Git Terminal application in conjunction with SAP data Intelligence Solutions. We refer the reader to the official documentation of git submodules for more comprehensive guidance on how to use Git Submodules. We will only provide the commands that are needed to follow this blog. To achieve the above Git setup, that is, including the remote docker_python_module Git repository as a Git submodule inside the di_demo_solution remote Git repository one needs to locally checkout the remote Git repository di_demo_solution and then executing the following commands.

git submodule add <url>/<user>/docker_python_module.git vflow/dockerfiles/my/demo/dockerfile/docker_python_module

git add .

git commit -m "add docker_python_module submodule"

git pushWe now explain the three main building blocks (Docker files, graphs, custom operators) of the above example.

Docker files

Docker files are used to add additional resources to the Docker image that is used as a basis for the runtime container of pipelines and operators (see also the ).

The dockerfiles directory of an SAP Data Intelligence Solution contains the Docker file artifacts. Only the dockerfiles root directory has a fixed name. Apart from that Docker files can be organized in arbitrary folder structures (e.g., my/demo/dockerfile in the above example).

In the SAP Data Intelligence context such Docker images are used to provide the proper runtime environments for operators and pipelines that run, for instance, custom code or execute compiled binaries. Selection of Docker files and, hence, the resulting Docker images for certain pipeline operators is achieved via tag annotations in pipelines and operators (see the corresponding SAP Help documentation). The following picture highlights this in case of the above sample SAP Data Intelligence Solution.

Custom Categories for Pipelines and Operators

The general/ui directory contains .json files that are used to define custom categories for pipelines and operators to group these artifacts in the SAP data Intelligence Modeler user interface. The following picture shows the JSON structure of the my.demo.solution.json file and how the category grouping is reflected on the user interface.

In case the general/ui directory contains more than one .json file, they will be merged.

SAP Data Intelligence Graphs

The graphs directory of an SAP Data Intelligence Solution contains the graph design time artifacts. Only the graphs root directory has a fixed name. Apart from that, graphs can be organized in arbitrary folder structures (e.g., my/demo/pipeline in the above example).

The directory of a graph definition can also contain an additional launchConfigs folder that contains default configurations for substitution parameters that are used in operator configurations in the graph. We did not include such a folder in the above example and will not dive deeper into the topic of launch configurations. We refer the reader to the SAP Help documentation on graph creation. For the sake of completeness, the following image highlights how such a launchConfigs directory could look like in case of our example.

Custom Operators based on subengine base operators

The subengines/com/sap/python36/operators directory contains the artifacts of custom python operators as well as possible additional custom python modules that are used in custom python operators. Only the above path has a fixed name. Apart from that, custom python operators and modules can be organized in arbitrary folder structures (e.g., my/demo/operator and my/demo/python_module in the above example). The following image illustrates such a custom operator setup.

The python_module is recognized by the Python runtime of corresponding Docker container since the PYTHONPATH was extended with the subengines/com/sap directory in the past. Regarding when to use this approach or the inclusion of Python modules via custom Docker images one can stick to the following rough-and-ready rule. If the Python module is only used by a specific operator or by a set of operators, then one can include the module directly with the custom operator design time artifacts. The idea of extending the PYTHONPATH with the above directory is to give pipeline/operator developers the possibility to structure their Python code that is meant to be used in custom Python operators in convenient packets to improve maintainability. If the module is of a more general nature and can be considered independent of SAP Data Intelligence artifacts and is maybe also developed independently of SAP Data Intelligence pipelines and located in a dedicated Git repository, then one can ingest the Python module via a custom Docker image.

Building Solutions with vctl using the Git Terminal Application

With the Git Terminal Application it is now possible to also bundle the artifacts in the user workspace as a SAP Data Intelligence solution following the approach outlined at the end of the Blog of my colleague Christian Sengstock about Git Workflow and CI/CD Process. For the latter you need vctl installed in the container that runs the Git Terminal Application. To achieve this, import the vctl command line client file into the user workspace and then execute the following commands in the Git Terminal Application:

export PATH="~:$PATH"

mv vctl ../project

chmod +x ../project/vctlYou can then check if vctl is working by executing vctl in the command line. The result should look similar to the one in the screen below.

One is then able to bundle a solution out of the artifacts that are currently present in the user workspace by following the steps outlined at the end of the blog of my colleague christian.sengstock on SAP Data Intelligence: Git Workflow and CI/CD Process.

iv. Using several Git repositories

When renaming the Git directory .git (e.g., first.git) and using the capabilities of ignoring files in Git (see Section 3.2) it is also possible to setup/manage multiple Git repositories (e.g., adding a second.git repository as well) at the same time in one SAP Data Intelligence Cloud user workspace that share the vhome directory as their worktree. To switch between the different git repositories one would need to use the variables GIT_WORK_TREE and GIT_DIR and one needs to manually (and additional .gitignore configuration) control which artefacts of the joint worktree directory is tracked by which Git repository. We are not going to tackle this rather complicated and error-prone approach in this blog and refer the reader to the official Git Documentation on environment variables used by Git and Git worktrees. We do not recommend to use such an approach unless you encounter a justified exceptional case.

4. Connecting an on-premise corporate Git Server to SAP Data Intelligence Cloud

i. Connect SAP Business Technology Subaccount to SAP Cloud Connector

We assume that a SAP Cloud Connector is installed in the on-premise landscape and that there exists a SAP Business Technology Platform Subaccount with a SAP Data Intelligence Cloud instance. The following Cloud Connector configurations need to be performed with a user that has the rights to add SAP BTP Subaccounts to SAP Cloud Connector. For more details on SAP Cloud Connector, we refer the reader to the official SAP Help Documentation.

Log in to SAP Cloud Connector and add a new subaccount (the one where the SAP Data Intelligence Cloud instance, we want to connect the on-premise corporate Git server to, is deployed):

The SAP Cloud Connector Location Id is typically used, when you setup multiple SAP Cloud Connectors for a single BTP subaccount. For this blog, we assume that the SAP Cloud Connector is configured with a Location Id. The Cloud Connector Location Id is used later on when connecting SAP Data Intelligence Cloud to the SAP Cloud Connector. Depending on the configuration of the identity provider the string in the Password field might consist out of several pieces apart from the actual user password.

The SAP Cloud Connector Location Id is typically used, when you setup multiple SAP Cloud Connectors for a single BTP subaccount. For this blog, we assume that the SAP Cloud Connector is configured with a Location Id. The Cloud Connector Location Id is used later on when connecting SAP Data Intelligence Cloud to the SAP Cloud Connector. Depending on the configuration of the identity provider the string in the Password field might consist out of several pieces apart from the actual user password.ii. Connect on-premise corporate Git Server to SAP Cloud Connector

Next, we create a Cloud To On-Premise mapping to the on-premise corporate Git server from the SAP Cloud Connector. To immediately perform a connectivity check against the internal host during the creation process, one can optionally mark the corresponding checkbox on the summary popup of the creation process. As recommended by the SAP Cloud Connector, we use “github.virtual” as the virtual hostname. This hostname is later used, when interacting with the Git server from within the Git Terminal Application.

The Check Result should be reachable. Next, we add the resources that shall be accessible via the mapping to the on-premise corporate Git server that we just created. In the image below we allowed access to all paths on the on-premise corporate Git server.

Switching to the SAP Business Technology Platform Subaccount that we used in the last section we should see the following status in the Cloud Connectors section:

iii. Enable communication between SAP Data Intelligence Cloud and SAP Cloud Connector

We now switch to a SAP Data Intelligence Cloud tenant that is deployed in the SAP Business Technology Platform Subaccount that we used above. For the following steps that are performed in SAP Data Intelligence Cloud, a user with write access to the SAP Data Intelligence Connection Management as well as access to the SAP Data Intelligence Modeler and Git Terminal Application is needed. We create a new connection via the SAP Data Intelligence Cloud Connection Management application. For the example in the screenshot and in the following steps, we use “Corporate_Git” for the connection id of the new connection.

If a SAP Data Intelligence Cloud user -that has access to the SAP Data Intelligence Cloud Modeler and the Git Terminal Application in it- wants to connect to the on-premise corporate Git server the following commands need to be executed in the Git Terminal application. The script interacts with the SAP Data Intelligence Cloud Connection Management and retrieves the necessary configuration parameters. Last it configures Git to use the SAP Cloud Connector for all following commands.

export PYTHONHOME=/usr/local

export HOME=~

#CONNECTION_ID refers to the Id of the connection that we just created in the SAP Data Intelligence Cloud connection management

export CONNECTION_ID=Corporate_Git

#add the proxy-authorization header as http.extraHeader in the global git config file

proxy_authorization=$(curl -s http://vsystem-internal:8796/app/datahub-app-connection/connectionsFull/$CONNECTION_ID | python -c 'import json,sys;obj=json.load(sys.stdin);token=obj["gateway"]["authentication"];print(f"proxy-authorization: Bearer {token}")')

git config --global --add http.extraHeader "$proxy_authorization"

#add the SAP-Connectivity-SCC-Location_ID header as http.extraHeader in the global git config file

proxy_location=$(curl -s http://vsystem-internal:8796/app/datahub-app-connection/connectionsFull/$CONNECTION_ID | python -c 'import json,sys;obj=json.load(sys.stdin);location=obj["gateway"]["locationId"];print(f"SAP-Connectivity-SCC-Location_ID: {location}")')

git config --global --add http.extraHeader "$proxy_location"

#set the http.proxy in the global git config file

proxy=$(curl -s http://vsystem-internal:8796/app/datahub-app-connection/connectionsFull/$CONNECTION_ID | python -c 'import json,sys;obj=json.load(sys.stdin);host=obj["gateway"]["host"];port=obj["gateway"]["port"];print(f"http://{host}:{port}")')

git config --global --add http.proxy "$proxy"

Hint: After a while this configuration script may need to be executed again, as git configuration parameters may change. For example, the authorization token is valid for only one hour.

The execution should look like the following with no errors occurring.

Of course, it is also possible to save the above bash script in a file (e.g., script.sh) and executing it via the source command in the Git Terminal application (e.g., source script.sh).

If we check the global Git configuration which is located in /project/.gitconfig it should look similar to the following:

In case of any errors regarding the http.extraHeader and http.proxy properties in the config file you can always remove the properties with the following commands:

git config --global --unset-all http.extraHeader

git config --global --unset http.proxyA more detailed explanation of the http section in the Git config file as well as the --unset flag can be found in the official Git documentation.

Assuming that the Git credential helper was configured properly, the user is now able to execute Git commands against the on-premise corporate Git server. Cloning a remote Git repository would work with the following command:

Regarding connectivity configurations on SAP Business Technology Platform and in SAP Cloud Connector we refer the reader to the corresponding SAP Help Documentations of SAP Business Technology Platform (see also the section about the Connectivity Proxy for Kubernetes) and SAP Cloud Connector (see also the section about inbound connectivity).

Assuming that the di_demo_git remote Git repository is set up on an on-premise corporate Git server that is not exposed to the internet a proper clone of this remote repository with the Git Terminal Application would look like the following.

5. Summary

In this blog you learned about the new Git integration capabilities in SAP Data Intelligence,

- Technical background and security aspects for the Git Terminal application

- Required steps to establish a basic setup for using Git in SAP Data Intelligence Cloud

- Discussing advanced capabilities of Git (e.g., .gitignore, working with SAP Data Intelligence solutions)

- Excursus on the structure of SAP Data Intelligence Solutions

- Connecting an on-premise corporate Git server via SAP Cloud Connector with SAP Data Intelligence Cloud

Finally, at the end of this blog post, I would like to thank my colleagues Mahesh Shivanna, Reinhold Kautzleben and Christian Schuerings for the great support and guidance as well as fruitful discussions during the creation of the content for this blog.

Feel free to

- share your thoughts on the content of this blog,

- ask questions at its end via a comment or

- ask questions about SAP Data Intelligence.

- follow my profile danielkraemer or the SAP Data Intelligence Cloud topic page if you are interested in similar topics/blogs.

Appendix: Blog History

- Initial Release: 25th of October 2022

- 3rd of November 2022: Add a more precise step-by-step guide to the Common Problem: The Git Terminal Application is not visible subsection of section 1.iii

- SAP Managed Tags:

- SAP Data Intelligence

Labels:

13 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

430 -

Workload Fluctuations

1

Related Content

- Sapphire 2024 user experience and application development sessions in Technology Blogs by SAP

- How to add SAP Datasphere as a datastore in Cloud Integration for data services? in Technology Q&A

- Using Integration Suite API's with Basic Auth in Technology Q&A

- SAP BW/4 - revamp and true to the line 2024 in Technology Blogs by Members

- SAP Datasphere's updated Pricing & Packaging: Lower Costs & More Flexibility in Technology Blogs by Members

Top kudoed authors

| User | Count |

|---|---|

| 31 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |