- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- How SAP’s Generative AI Hub facilitates embedded, ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This blog post is part of a series that dives into various aspects of SAP’s approach to generative AI, and its technical underpinnings. Read the first blog post of the series.

Introduction

In our previous blog post, we discussed how SAP's comprehensive architecture components underpin business AI. In this blog post, we delve deeper into the heart of this architecture – the generative AI hub.

Why a generative AI hub

The large language model (LLM) landscape has grown significantly for over a year, with the introduction of multiple models, each equipped with unique benefits and limitations. The suitability of a model often depends on the specific use case, with factors such as accuracy, latency, and operational environment playing pivotal roles.

At SAP, we are fortunate to have a plethora of use cases to leverage LLMs. For each, there needs to be a systematic and tool supported selection of an appropriate model from a wide range of offerings from SAP’s AI partners, such as Microsoft Azure, Google Cloud Platform (GCP), Amazon Web Services (AWS), Aleph Alpha, Anthropic, Cohere, and others, or from open source. Moreover, we must ensure versatility in application runtime, supporting a variety of models tailored to meet diverse needs. While we appreciate the innovation brought forward by LLM providers, our focus remains on avoiding over-reliance on a single provider. This approach allows us to pivot towards other LLMs that may better cater to our customers and use cases, and react to changes in the market over time.

Our emerging use cases share a common set of requirements. First, we prioritize establishing compliance and fostering trust in the application of LLMs. Following this, we diligently meet all commercial, metering, and billing necessities. However, our primary emphasis is on streamlining and harmonizing the incorporation of these models into our business applications. By addressing these concerns in a standardized way and following a common programming model, we are aiming to speed up the innovation process within our application development teams.

Grounding, which involves providing LLMs with specific and relevant information beyond their inherent knowledge, is crucial in ensuring the accuracy, quality, and relevance of the generated output. Within SAP, our extensive data assets are integral in enhancing LLM use cases with business context seamlessly and reliably, making them more effective.

The transformative potential of generative AI (GenAI) extends beyond just the capabilities of new models; it also lies in their accessibility to both developers and non-developers. When the excitement around ChatGPT started a year ago, we realized that SAP engineers required an uncomplicated while enterprise compliant instrument to unlock the potential of LLMs. This led to the creation of the internal SAP AI playground system, a simple service to explore different models. The result was an enthusiastic response and a flood of innovative ideas to incorporate LLMs into SAP applications. We believe that providing such central, easy access to LLMs is a key component of fostering innovation within any organization.

With these considerations in mind, SAP has decided to bring together trusted LLM access, business grounding for LLMs, and LLM exploration into a single generative AI hub. This hub is provided as integral part of SAP AI Core and SAP AI Launchpad, central elements of our AI foundation on SAP Business Technology Platform (SAP BTP). This strategic move aims to streamline our approach to leveraging LLMs, making it more efficient and effective. While the generative AI hub addresses the requirements from SAP’s own business applications, we are aware that these requirements are shared in the wider SAP ecosystem, and thus took the decision to make the generative AI hub also available to our partners and customers.

How we realize the generative AI hub

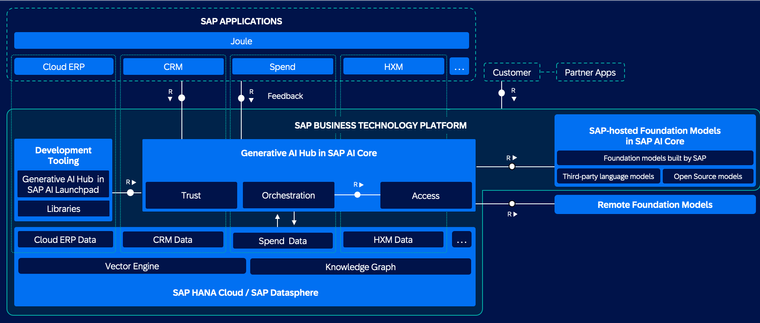

The architecture of the generative AI hub looks as follows:

Figure 1: Architecture of generative AI hub

The primary purpose of the generative AI hub is to combine the best of LLMs with the best of SAP processes and data. To achieve this, first it needs to be easy to integrate LLMs of a wide range of provider types into SAP’s business applications.

Let us go step by step through the components of generative AI hub.

Access

In generative AI Hub, we build on SAP AI Core, an SAP BTP service used by all SAP applications and services. SAP AI Core is not only providing capabilities to run AI workloads, but also efficient in proxying access to models that are operated as a service by providers. This is enabling us to maintain consistent access and lifecycle, and reuse much of SAP AI Core’s security and metering implementation.

For models that we operate independently, we depend on SAP AI Core’s features to cost-effectively run AI models on a large scale. To achieve this, we employ a Kubernetes-based structure with specific features suited to AI, such as GPU support.

What do applications need to do to integrate an LLM via Generative AI Hub into their application? Conceptually there are three major steps involved.

In the first step, users must acquire a service instance of SAP AI Core using the SAP BTP Cockpit.

In the second step, the goal is to create a deployment programmatically or via the SAP AI Launchpad, to instantiate a use case specific LLM configuration. It references a model provider specific executable, e.g., models provided via the Azure OpenAI service are bundled together in one. Further parameters like model name, model version, etc. can be configured as well. For each deployment, SAP AI Core provides a unique URL that can be used to access the LLM.

In the final step, the deployment URL can be embedded within an application. It is possible to use this URL as if interacting directly with the native LLM provider API, e.g. through an LLM provider specific SDK. We have intended for this to be the case for a good reason: we acknowledge that various LLMs may have unique features, which we want our use case providers to make use of. However, to ease the migration from one provider to the other, we are also planning to provide an abstract API that works uniformly across all providers, despite any provider-specific features that may be missing.

These steps are described in detail in this dedicated tutorial.

Trust

On the service-level, generative AI hub adheres to the highest industry standards by integrating SAP BTP's security functions, including Identity and Access Management, multi-tenancy, audit log service, TLS management, asset management, and CAM. Along with all other SAP products, it strictly adheres to a secure development and operations lifecycle, comprising among others threat modeling, static and dynamic code scans, open-source risk assessment, penetration testing, and periodic scans of cloud infrastructure. Operational security best practices are enforced, including access control, audit logging, multi-tenancy, and network layer security. These security procedures and their execution undergo review by external auditors in accordance with ISO 27001, SOC2, C5, and NIST CSF audits.

In addition, the generative AI hub also addresses the need for additional trust capabilities for GenAI use cases, primarily content moderation capabilities. The hub will check prompts and LLM responses for policy violations, and we aim to soon support optional de-identification and re-identification of personal data.

All our GenAI use cases need to support consumption-based pricing following our commercial model for AI. The generative AI hub plays a pivotal role in reliable usage metering, automatically reporting and aggregating consumed tokens based on tenants and the relevant business context.

Trust needs to extend beyond just the technical aspects. We have a legal and commercial framework in place, including a set of policies, procedures, and agreements that govern how we conduct business, interact with our selected AI partners, and comply with laws and regulations. Therefore, from input to outcome, trust is integral in all operations, not just in the technology we use but also in its management, customer engagement, and legal compliance.

Orchestration and Grounding

LLMs very often incorporate contextual data using patterns such as Retrieval Augmented Generation (RAG) and other in-context learning methods, to achieve higher accuracy and include up-to-date information. This process is supported by common libraries to access data sources and orchestrate LLMs; one of the most notable examples is LangChain. The generative AI hub is designed to work seamlessly with LangChain and similar libraries.

Consider an application that would like to utilize a database of documents (e.g. emails) as contextual information (e.g. in order to utilize historic email responses). To realize this, we need to setup LLM deployments in generative AI hub (see above) for an embedding model as well as a completion model. Further we setup a vector database and store embeddings calculated by the embedding model of our documents. As outlined in the previous post, our SAP HANA database will soon provide such vector store capabilities, although in some use cases certain SAP BTP services, such as PostgreSQL, might already be a good enough option. With the help of LangChain we can now orchestrate a retrieval step by embedding a query through generative AI hub, using the vector database to retrieve the most similar matches, and finally generate a response to the query via generative AI hub.

While generative AI hub embraces LangChain, to optimize the development process, the service will support an orchestrated completion mechanism. It will enable users to define an orchestration configuration, which outlines the specific orchestration procedure (such as RAG), types of grounding data sources (such as a designated vector database), actual data source destination (detailing how to connect to a vector store), etc. In doing so, this mechanism provides a customizable and efficient solution for developers working with LLMs.

For an example around generating email insights with generative AI hub and LangChain have a look into this sample project.

Tooling

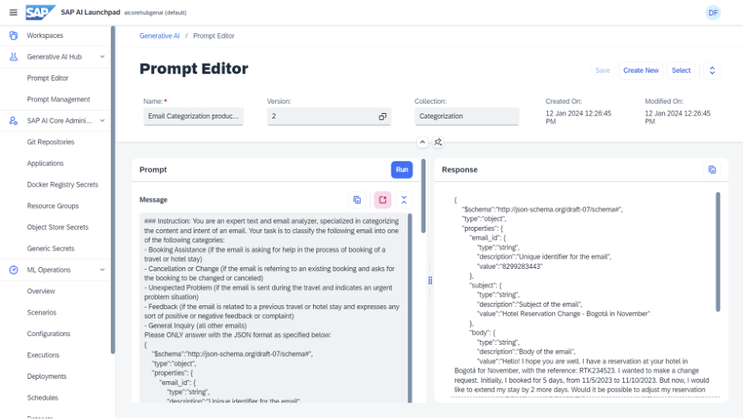

As a further generative AI hub capability, there is a professional prompt engineering experience that we integrate into our SAP AI Launchpad. SAP AI Launchpad is designed from the outset to be a one-stop shop for all AI-related activities, providing a modular architecture that is extendable with further tools, sitting on top of the AI API offered by SAP AI Core. Thus SAP AI Launchpad is the natural place to add prompt engineering capabilities that simplify getting started with GenAI, such as storing and versioning prompts, comparing different variants with different LLMs. The screenshot below shows the prompt editor next to the other AI and GenAI related capabilities of SAP AI Launchpad.

Figure 2: Prompt Engineering with generative AI hub

We also see significant potential in making SAP AI Launchpad a place where the productization of LLM-centric applications begins, and continuous monitoring of prompt performance takes place.

UI based tooling is of course just one part of the story, we also provide more developer focused tooling. In this direction, our generative AI hub SDK and our SAP AI Core SDK are already available.

Conclusion

Our technical architecture at SAP for GenAI revolves around the generative AI hub, which centrally addresses common concerns of our business applications and that we plan to gradually open up to the entire SAP ecosystem. This technical architecture is only one part of a successful GenAI narrative. Our next blog post will introduce how we perform benchmarking of LLMs, which is a crucial part of our journey towards relevant, reliable and responsible business AI powered by GenAI technology.

Co-authored by Dr. Andreas Roth and Dr. Philipp Herzig

- SAP Managed Tags:

- Artificial Intelligence,

- SAP AI Core,

- SAP AI Launchpad,

- SAP Business Technology Platform

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

430 -

Workload Fluctuations

1

- SAP Signavio is the highest ranked Leader in the SPARK Matrix™ Digital Twin of an Organization (DTO) in Technology Blogs by SAP

- SAP HANA Cloud Vector Engine: Quick FAQ Reference in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP BTP - Blog 4 Interview in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Experiencing Embeddings with the First Baby Step in Technology Blogs by Members

| User | Count |

|---|---|

| 30 | |

| 17 | |

| 15 | |

| 13 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |