- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Harnessing Generative AI Capabilities with SAP HAN...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

As we approach the close of the first quarter, we're excited to announce the availability of the "Vector Engine" feature in SAP HANA Cloud as part of the QRC1 Release. This new feature is set to enhance the multi-model capabilities of SAP HANA Cloud by incorporating vector database capabilities for storing embeddings. Embeddings are instrumental in transforming high-dimensional data into a more manageable, lower-dimensional format, thereby simplifying the understanding of complex and unstructured data such as text, images, or user behavior.

Moreover, the integration of the SAP HANA Cloud Vector Engine with the Generative AI Hub for accessing Large Language Models (LLMs) will empower our customers to develop robust AI-based applications and reporting solutions, all while complying with our governance and ethical framework.

Numerous blogs on our SAP Community/Medium delve into the concepts of the SAP HANA Cloud Vector Engine. For a comprehensive understanding of the basics and features of SAP HANA Cloud, Shabana's blog is a great resource. In this discussion, we will concentrate on the use case we published for the Discovery Mission and the scenarios it encompasses.

We have launched an SAP Discovery Mission that covers the basics of SAP HANA Cloud Vector Engine, embedding texts by accessing the foundation models from SAP Generative AI Hub or Azure Open AI. And deploying the RAG application using SAP CAP. We have provided all the repositories as part of the mission and here is the link to our Discovery Mission: Harnessing Generative AI Capabilities with SAP HANA Cloud Vector Engine

In this blog series, I will discuss the architecture and detail one of those scenarios in the follow-up blogs. For the remaining scenarios, I encourage you to subscribe to the Discovery Mission to gain access to Git repositories and Python scripts.

What is the ideal use case for this Discovery Mission?

Consider a hypothetical healthcare client, referred to as Client X, who has data stored across multiple systems, including SAP, Salesforce, and external platforms. This data could consist of customer interactions such as calls or emails to their call centers, which could be inquiries, service requests, or feedback about the services offered by the healthcare company.

Client X is interested in leveraging SAP HANA Cloud to process this unstructured data, enabling their business to run direct queries on feedback or transcribed phone calls. This enriched information can then be used for reporting purposes. For instance, if the business asks, "Display all service requests from the past two weeks," the system should be capable of scanning all transcribed texts, analyzing the content to distinguish between service requests and feedback, and delivering the relevant customer texts for the business to act upon. This scenario provides a clear illustration of how:

- SAP HANA Cloud can access multiple Large Language Models (LLMs) from the SAP Generative AI Hub (GenAI Hub) and embed the transcribed texts.

- The SAP GenAI Hub SDKs enable seamless access to LLMs.

- SAP HANA Cloud can access JSON documents either from a Data Lake or from JSON Document Collections within SAP HANA Cloud itself.

- The SAP Langchain plugin aids in the ingestion of embedded data into SAP HANA Cloud.

- The Vector Engine can be leveraged to query the embedded texts in SAP HANA Cloud based on user prompts.

While we cannot provide actual customer data due to privacy concerns, we will substitute it with JSON reviews about various products or restaurants that will demonstrate all the points mentioned above. We have included a schema as part of the Discovery Mission, and we will also share some scenarios and code snippets in our upcoming blogs.

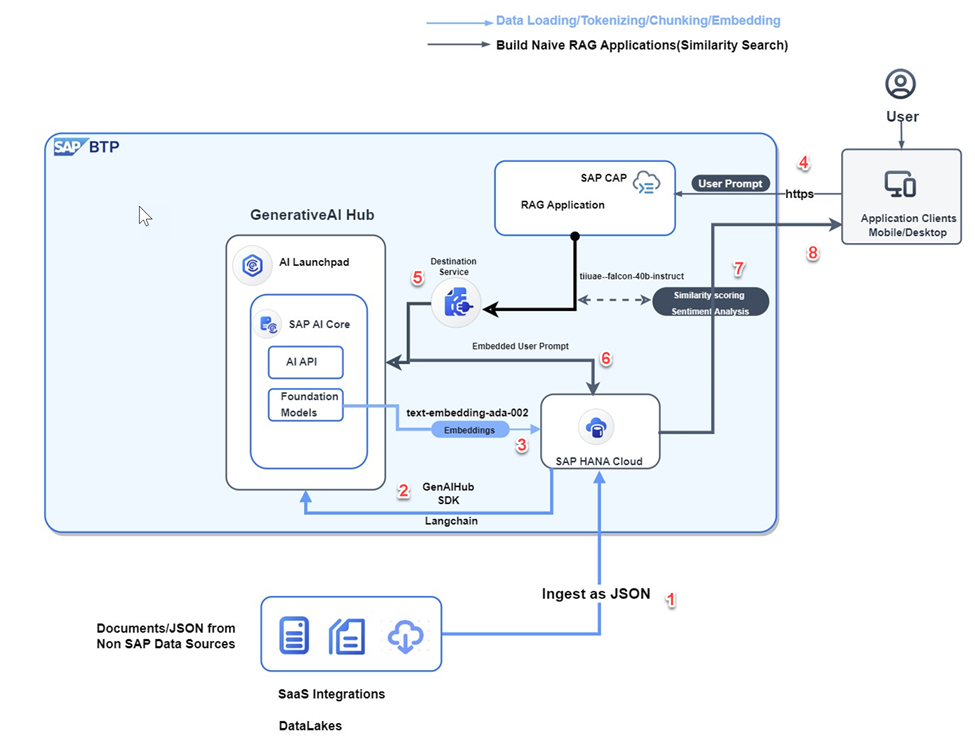

What about the architecture for the use case leveraging SAP HANA Cloud Vector Engine and Large Language Models(LLMs) from Generative AI Hub?

The architecture we're discussing consists of two main phases: data ingestion and user interaction. Let's dive into the details:

Phase 1: Data Ingestion (Steps 1-3) & Phase 2: User Interaction (Steps 4-8)

- We kick things off by reading customer reviews from an Azure data lake and ingesting the data as JSON documents into our system. We'll provide the schema for these JSON documents as part of this Discovery Mission but will access the Data Lake as part of the blog series and walk you through the code.

- Next, we leverage the power of the GenAI Hub SDKs and the langchain plugin to read the JSON documents from SAP HANA Cloud and embed them using text-embedding-ada-002 (LLMs) from Generative AI Hub.

- With the embeddings obtained, we ingest the data back into SAP HANA Cloud using the langchain plugin, setting the stage for the next phase.

- Users can now interact with the deployed CAP app by prompting it with queries related to restaurant reviews.

- The app utilizes the destination service to connect to LLMs from the GenAI Hub and embeds the user's prompt.

- This embedded prompt is then queried against the vectors ingested in Step 3, allowing us to retrieve relevant information.

Before presenting the results to the user, we access the tiiuae--falcon-40b-instruct(LLMs)from the GenAI Hub to analyze the sentiment of the retrieved text, adding an extra layer of context.

- Finally, the app responds to the user's prompt with the actual text, along with its relevance score and sentiment analysis, providing a comprehensive and insightful answer.

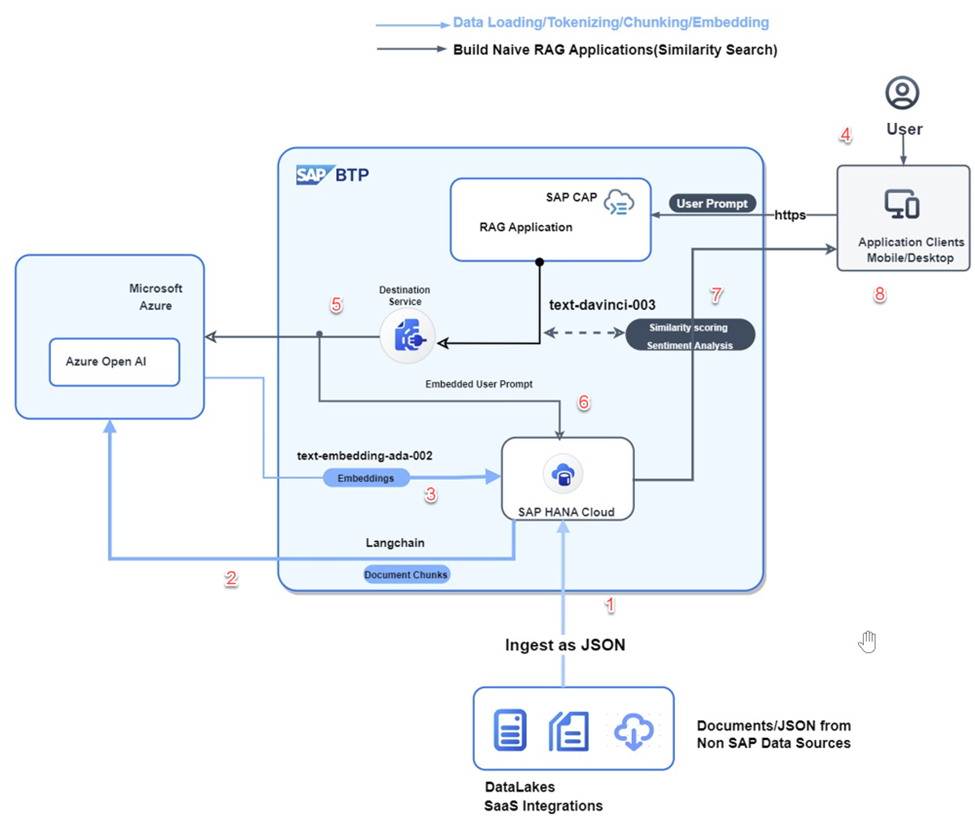

What about the architecture for the use case leveraging SAP HANA Cloud Vector Engine and Large Language Models(LLMs) from Azure Open AI?

We adopt a similar approach as discussed before.

Phase 1: Data Ingestion (Steps 1-3) & Phase 2: User Interaction (Steps 4-8)

- Our journey begins by connecting to an Azure data lake to read customer reviews. These reviews are then ingested as JSON documents into our system. We'll provide the schema for these JSON documents as part of our mission, but we'll also delve into the code in this blog series for a more detailed understanding.

- Next, we harness the capabilities of Azure Open AI SDKs and the langchain plugin. These tools allow us to read the JSON documents from SAP HANA Cloud and embed them using text-embedding-ada-002 (LLMs) from Azure OpenAI.

- After extracting valuable embeddings from the JSON documents, we'll ingest this enriched data back into SAP HANA Cloud. The langchain plugin streamlines this process, ensuring a seamless integration between Azure OpenAI and SAP HANA Cloud.

- At this stage, users can interact with the deployed Cloud Application Programming (CAP) app, prompting it with queries about product reviews.

- When a user submits a prompt through the CAP app, the app leverages the destination service to connect with Azure OpenAI's LLMs. These LLMs then embed the user's prompt, transforming it into a format suitable for efficient querying.

- The embedded user prompt is then queried against the vectors ingested in Step 3. This process enables us to identify relevant customer reviews that closely match the user's query.

- Based on the query results, we retrieve the corresponding text, along with its scoring and sentiment analysis. To ensure accurate sentiment analysis, we access the text-davinci-003 (LLM) from Azure OpenAI's deployment.

- Finally, we synthesize the retrieved text, scoring, and sentiment analysis into a coherent response tailored to the user's prompt.

Throughout the following blog series, we'll dive deeper into the code implementation, guiding you through each step of this exciting journey. Stay tuned for more insights and practical examples!

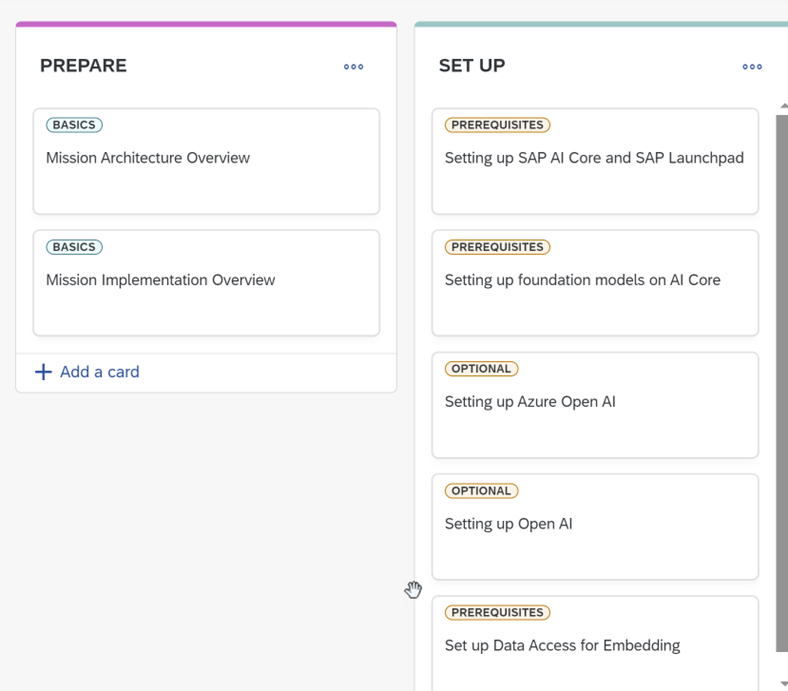

What configurations & pre-requisites you need to navigate through this Discovery

Mission?

To ensure you're ready to execute these steps, please refer to the "Preparation" and "Setup" sections of the Project board, included in the Discovery Mission.

If you don't have a subscription for GenAI Hub, don't worry.

We've provided alternative options using Azure OpenAI to keep you moving forward.

What about the essential Repositories and Scripts?

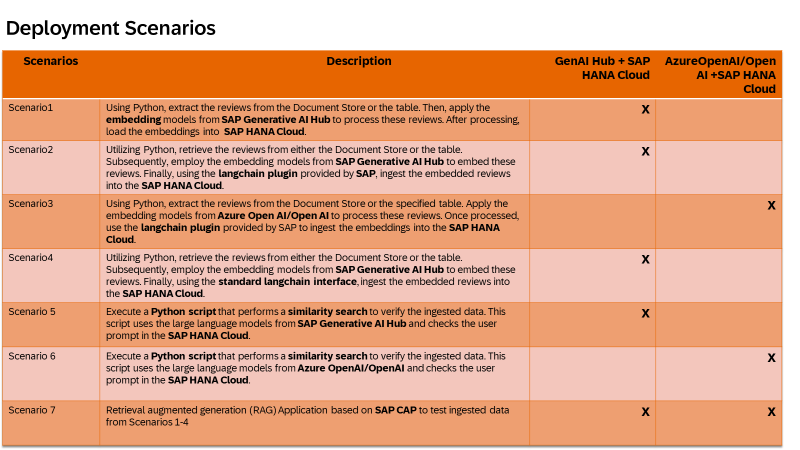

In our mission, we explored diverse scenarios to showcase the capabilities of SAP HANA Cloud Vector Engine and Generative AI Hub. For those experts eager to delve deeper into these technologies, we've provided Python scripts that serve as a practical resource. The other scenarios are catering to experts focused on SAP HANA Cloud Vector Engine and Azure OpenAI/OpenAI integration.

Here's a breakdown of the scenarios:

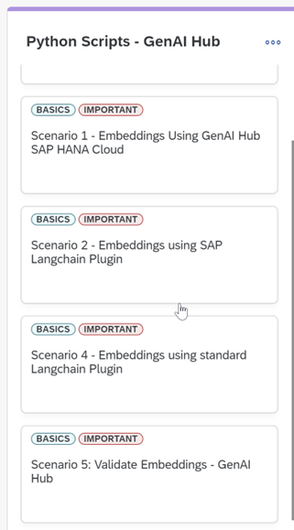

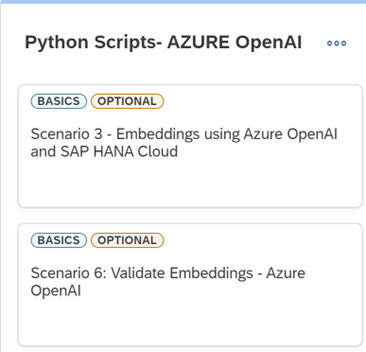

Scenarios 1 through 4 introduced various Python scripts that demonstrated the core functionalities of embedding using different SDKs & plugins. Building upon the previous scenarios, Scenarios 5 and 6 focused on validating the embeddings generated from Scenarios 1 to 4. These validation steps ensured the accuracy and reliability of the embeddings, which are crucial for downstream tasks like similarity analysis and clustering.

Finally, Scenario 7 showcased an SAP CAP application that validated embeddings based on either SAP Generative AI Hub or Azure OpenAI/OpenAI integration. This real-world application demonstrated how these technologies can be seamlessly integrated into existing workflows and applications.

Throughout these scenarios, we aimed to provide a comprehensive overview of SAP HANA Cloud Vector Engine and Generative AI Hub, equipping experts with the tools and knowledge necessary to leverage these powerful technologies effectively.

If you have BTP subscriptions to both SAP HANA Cloud and SAP Generative AI Hub, you have the capability to utilize the "Python Scripts-GenAI Hub" tile. This feature enables you to import data from provided JSON document samples and cross-check the data using the Python scripts that are part of these tiles.

For those with subscriptions to SAP HANA Cloud and Azure Open AI, you have the ability to run the "Python Scripts-Azure Open AI" tile. This feature lets you import data from sample JSON documents and subsequently verify its accuracy.

How do you deploy the SAP CAP Application?

You can easily set it up by adhering to the step-by-step instructions provided within this tile. It's worth noting that the CAP application is compatible with LLMs based on both SAP Generative AI Hub and Azure Open AI.

In the upcoming blog, we'll dive into a practical, hands-on exploration and code review for one of the scenarios we've discussed.

We encourage you to delve into the mission and follow the step-by-step content to gain a deeper understanding of the SAP HANA Cloud Vector Engine and its impressive features that you can experiment with. Your feedback is highly anticipated and greatly valued. If you encounter any difficulties while navigating through the mission, don't hesitate to contact our support team through the Discover Center Mission. Here's to an enjoyable and enlightening learning journey!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

- Sapphire 2024 user experience and application development sessions in Technology Blogs by SAP

- Supporting Multiple API Gateways with SAP API Management – using Azure API Management as example in Technology Blogs by SAP

- Improving Time Management in SAP S/4HANA Cloud: A GenAI Solution in Technology Blogs by SAP

- IoT - Ultimate Data Cyber Security - with Enterprise Blockchain and SAP BTP 🚀 in Technology Blogs by Members

- SAP Signavio is the highest ranked Leader in the SPARK Matrix™ Digital Twin of an Organization (DTO) in Technology Blogs by SAP

| User | Count |

|---|---|

| 32 | |

| 17 | |

| 15 | |

| 14 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |