- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- First steps using the Hana Vector Engine with SAP ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

NOTE: The views and opinions expressed in this blog are my own

In a recent blog Which Embedding Model should I use with my Corporate LLM? I highlighted the importance of considering which embedding model you may be using if combining RAG (Retrieval-Augmented Generation) with LLM calls, to enrich your queries with additional business context.

For Enterprises the next important step may then be to chose which database to store your document embeddings.

The good news is that Hana Clouds latest release (QRC1/2024) now includes a Vector Engine. You can upgrade now or use the trial to test it out.

From Developer's Desk: SAP HANA Cloud Vector Engine

SAP HANA Cloud, SAP HANA Database Vector Engine Guide

One of the main pre-requisites before you can test this is that you have access to an embedding model, that can take your text and convert to embeddings.

Some options to do that are:

- OpenAI API access (e.g. access to text-embedding-ada-002)

- Access to Open Source LLM Model API's (e.g. LLAMA2, PHI-2, MISTRAL,GROK,etc)

- SAP GEN AI (Enterprise grade access to a curated set of models, including Azure OpenAI API's)

In this blog I will focus on using SAP GEN AI, to access Azure OpenAI API's to provide greater security to the Embedding process..

A typical scenario would be to take your enterprise unstructured data (e.g. Enterprise Documents) split them into suitable chunks (e.g. Whole Documents or Pages or Paragraphs) and store those Embeddings into a Vector Database, for subsequent optimised searching.

Lets work with a simpler example though, we have a list of product descriptions:

products = [

"Bubbling Berry Blast",

"Glimmering Galaxy Drops",

"Enchanted Forest Fudge",

"Cloud Nine Confections",

"Golden Gabfest Ganache",

"Spectral Spice Skewers",

"Cosmic Caramel Crunch",

"Whirlwind Whispers Wafers",

"Echoing Eclair Euphoria",

"Mystical Moon Munchies"

]

Without adding any additional information we want to see how the OpenAI embedding model ( text-embedding-ada-002) does at enabling searches to find which ones are closely related to "Chocolate".

The products all need to be converted to Embeddings, and the question "Chocolate" needs to be turned into Embeddings. Then functions like cosine_similarity and l2distance can be applied to find out which products may be most likely related to Chocolate.

Stop for a moment and have a guess.....

Based on your understanding of the world what would be your top 3 products, fom the list, that may contain Chocolate?

My guess would be:

- Glimmering Galaxy Drops

- Golden Gabfest Ganache

- Enchanted Forest Fudge

Click to Enlarge

Click to EnlargeIt's answer isn't wrong, it just that different Embedding Models may have different life experiences (based on their design and training) so may give different results (guesses).

The reminder of this blog will go into the steps required to reproduce this for yourself.

PRE-REQ:

- SAP AI CORE (extended plan), with Azure OpenAI Ada foundation model deployed

- SAP HANA Cloud (Release QRC1/2024) [I used the trial edition]

NOTE: You can proceed without SAP AI CORE , if you have access to an OpenAI API Key. Some small code changes are required, if you get stuck aks in the comments.

First lets install the necessary python libraries:

#!pip install hdbcli

#!pip install generative-ai-hub-sdk

Next lets connect to Hana:

import hdbcli

from hdbcli import dbapi

conn = dbapi.connect(

address=<fill this in>,

port=<fill this in>,

user=<fill this in>,

password=<fill this in>,

encrypt=True

)

Next lets connect to SAP Gen AI:

import os

os.environ["AICORE_AUTH_URL"] = <fill this in> + "/oauth/token"

os.environ["AICORE_CLIENT_ID"] = <fill this in>

os.environ["AICORE_CLIENT_SECRET"] = <fill this in>

os.environ["AICORE_RESOURCE_GROUP"] = <fill this in>

os.environ["AICORE_BASE_URL"] = <fill this in> + "/v2"

from gen_ai_hub.proxy.native.openai import embeddings

Next lets create Tables and Views in Hana to help with the testing:

cursor = conn.cursor()

## Create Table in Hana for Embeddings Content

sql_command = '''CREATE TABLE "EMBEDDING_CONTENT" (

"id" BIGINT,

"content" NCLOB MEMORY THRESHOLD 0,

"model_dim" nvarchar(50), -- Used to compare embedding models

"EMBEDDING" REAL_VECTOR -- variable size Vectors

)'''

cursor.execute(sql_command)

## Create Table in Hana for Embeddings Questions

sql_command = '''CREATE TABLE "EMBEDDING_QUESTIONS" (

"id" BIGINT,

"content" NCLOB MEMORY THRESHOLD 0,

"model_dim" nvarchar(50),

"EMBEDDING" REAL_VECTOR

)'''

cursor.execute(sql_command)

#Create View to Compare embeddings

sql_command = '''CREATE VIEW "V_EMBEDDING_COMPARE" AS (

SELECT EQ."model_dim", EQ."id" as "question_id" , EQ."content" as "question",

EC."id" as "content_id" , EC."content",

COSINE_SIMILARITY(EQ."EMBEDDING",EC."EMBEDDING") as "COSINE_SIMILARITY",

L2DISTANCE(EQ."EMBEDDING",EC."EMBEDDING") as "L2DISTANCE"

FROM EMBEDDING_QUESTIONS EQ

INNER JOIN EMBEDDING_CONTENT EC

ON EQ."model_dim" = EC."model_dim"

)

'''

cursor.execute(sql_command)

#Create View to Rank Comparisons

sql_command = '''CREATE VIEW "V_EMBEDDING_RANK" AS (

SELECT "model_dim", "question_id", "question", "content_id", "content",

ROW_NUMBER() OVER (PARTITION BY "model_dim", "question_id" ORDER BY "COSINE_SIMILARITY" DESC) AS "cos_sim_row_num",

ROW_NUMBER() OVER (PARTITION BY "model_dim", "question_id" ORDER BY "L2DISTANCE" ASC) AS "l2d_row_num"

FROM "V_EMBEDDING_COMPARE"

ORDER BY "model_dim", "question_id", "content_id"

)

'''

cursor.execute(sql_command)

cursor.close()

Lets create a reusable embedding function:

def get_sap_genai_ada_embedding(input) -> str:

response = embeddings.create(

input=input,

model_name="text-embedding-ada-002",

#encoding_format='base64'

)

return str(response.data[0].embedding)

TIP: Change this for OpenAI if you don't have the more secure Enterprise ready SAP GEN AI

Now using the products defined at the begining of the blog lets convert them to a CSV format and insert them into Hana using the new Vector Engine:

products = [

"Bubbling Berry Blast",

"Glimmering Galaxy Drops",

"Enchanted Forest Fudge",

"Cloud Nine Confections",

"Golden Gabfest Ganache",

"Spectral Spice Skewers",

"Cosmic Caramel Crunch",

"Whirlwind Whispers Wafers",

"Echoing Eclair Euphoria",

"Mystical Moon Munchies"

]

## Create CSV Rows for EMBEDDING_CONTENT

csv_rows =[]

forceLowerCase =False #expirement with this if you like to see impact of rankings

for i, p in enumerate(products):

if forceLowerCase:

p = p.lower()

content = p

model_dim = 'ADA_1536' ## OPENAI ADA ... Dimensions 1536

embedding = get_sap_genai_ada_embedding(content)

csv_rows.append([i,content,model_dim,embedding])

### insert csv rows into Embedding Content

conn.setautocommit(False)

cursor = conn.cursor()

sql = '''INSERT INTO EMBEDDING_CONTENT ("id","content", "model_dim", "EMBEDDING") VALUES (?,?,?,TO_REAL_VECTOR(?))'''

try:

cursor.executemany(sql, csv_rows)

except Exception as e:

conn.rollback()

print("An error occurred:", e)

try:

conn.commit()

finally:

cursor.close()

Now we can store the question 'Chocolate' as an embedding too:

question = 'Chocolate'

embedding = get_sap_genai_ada_embedding(question)

csv_rows = [[0,question, 'ADA_1536',embedding]]

### insert csv rows into Embedding Questions

conn.setautocommit(False)

cursor = conn.cursor()

sql = '''INSERT INTO EMBEDDING_QUESTIONS ("id","content", "model_dim", "EMBEDDING") VALUES (?,?,?,TO_REAL_VECTOR(?))'''

try:

cursor.executemany(sql, csv_rows)

except Exception as e:

conn.rollback()

print("An error occurred:", e)

try:

conn.commit()

finally:

cursor.close()

NOTE: It's not necessary in an app to save the question Embedding into Hana, instead the question embedding can be passed in similarity search using a SELECT statement (e.g. as part of a WHERE Clause) , examples can be found in the help docs linked earlier.

Finally in Hana we can compare the results using the following SQL:

SELECT * FROM "EMBEDDING_CONTENT";

SELECT * FROM "EMBEDDING_QUESTIONS";

SELECT * FROM "V_EMBEDDING_COMPARE";

SELECT * FROM "V_EMBEDDING_RANK" order by "cos_sim_row_num" ASC

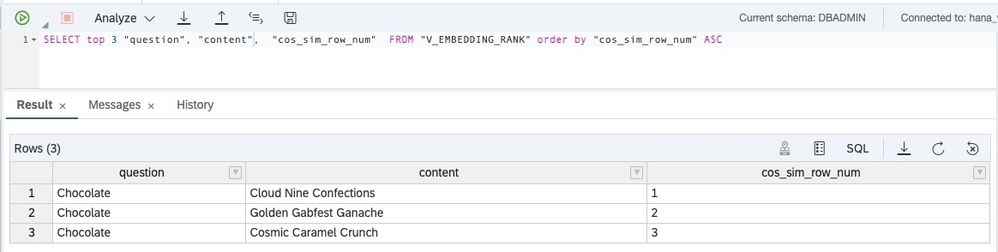

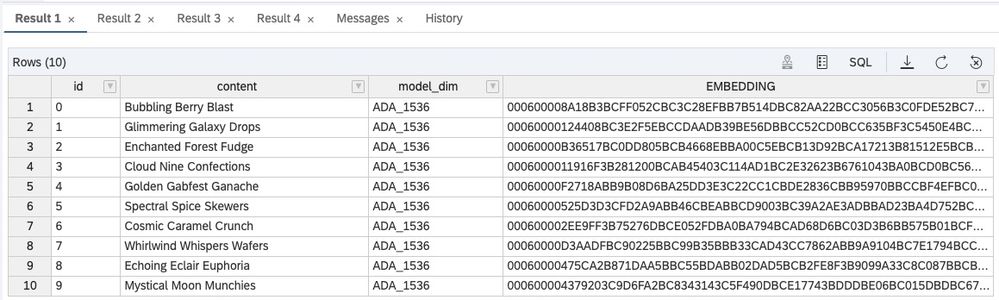

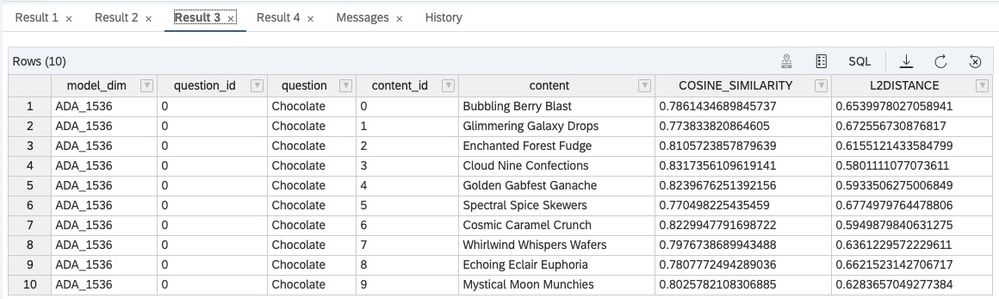

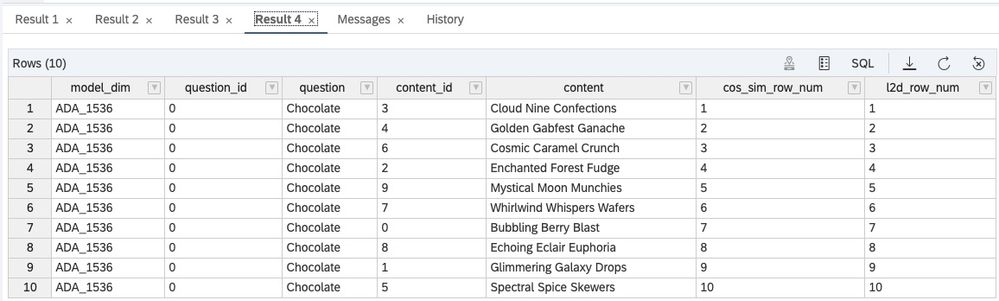

The results are a follows....

The product descriptions saved as embeddings:

Question 'Chocolate' converted to an embedding:

Similarity comparison results:

Finally the full Ranking order (based on cosine_similarity):

I hope this blog helped you better understand how Embedding work and how the Hana Vector Engine and GEN AI can help you to build a robust solution for Enterprises.

How would your improve the embeddings in this example to get more acurate results?

I welcome your comments and suggestions below.

--------------------------------------------------------------------------------------------

Other interesting related reads are:

Langchain Vector Stores - SAP HANA Cloud Vector Engine

SAP notes that posts about potential uses of generative AI and large language models are merely the individual poster’s ideas and opinions, and do not represent SAP’s official position or future development roadmap. SAP has no legal obligation or other commitment to pursue any course of business, or develop or release any functionality, mentioned in any post or related content on this website.

- SAP Managed Tags:

- SAP HANA Cloud, SAP HANA database,

- SAP AI Core

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

- SAP Datasphere's updated Pricing & Packaging: Lower Costs & More Flexibility in Technology Blogs by Members

- Govern SAP APIs living in various API Management gateways in a single place with Azure API Center in Technology Blogs by Members

- Checking HANA Cloud Vector Engine performances in Technology Blogs by SAP

- LLM, RAG and Cloud Foundry: No space left on device in Technology Q&A

- Data Privacy Embedding Model via Core AI in Technology Q&A

| User | Count |

|---|---|

| 34 | |

| 17 | |

| 15 | |

| 14 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |