- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- CAP LLM Plugin – Empowering Developers for rapid G...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Background and the Challenges

In today's swiftly evolving technological environment, the imperative to integrate Generative AI into business applications and product frameworks has reached critical significance. Leveraging the capabilities of Large Language Models (LLMs) through the SAP Generative AI Hub, alongside the vector engine prowess of SAP HANA Cloud, empowers customers to forge resilient Gen AI-based SAP Cloud Application Programming (SAP CAP) solutions. However, the development of such applications mandates strict adherence to data governance and ethical norms. Thus, the anonymization of sensitive data during interactions with LLMs emerges as a pivotal component of responsible data management, demanding considerable time and resources investment.

Moreover, developers endeavoring to construct such applications necessitate effortless access to LLMs via the SAP Generative AI Hub. Nonetheless, the initial configuration of SAP AI Core and LLM hosting within the SAP Generative AI Hub may prove intricate and time intensive. Furthermore, subsequent interactions with LLMs mandate developers to craft redundant boilerplate code, thereby amplifying the developmental endeavor required for such applications.

Addressing challenges such as hallucination and diminished task performance due to reliance on enterprise-specific knowledge, Retrieval-Augmented Generation (RAG) solutions emerge as crucial aids. For developers embarking on RAG application development, the utilization of SAP HANA Cloud vector engine capabilities becomes pivotal for the storage and retrieval of enterprise-specific contextual data (as embeddings). However, the construction of such applications often proves to be a time-intensive endeavor, demanding significant effort and the navigation of steep learning curves for developers.

Introducing the plugin

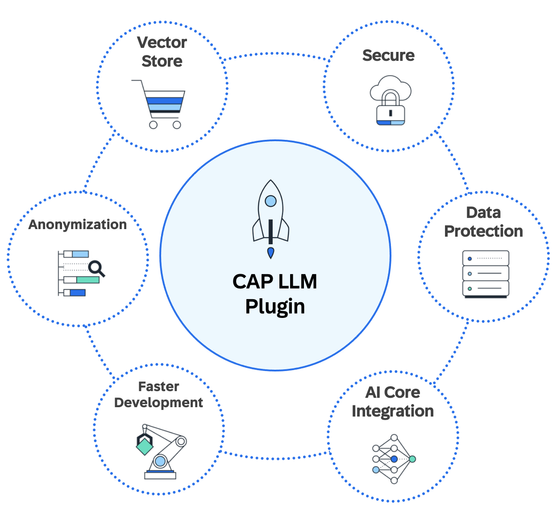

Introducing the CAP LLM Plugin, an indispensable solution empowering developers with the essential tools to effortlessly craft robust AI-driven CAP applications. Seamlessly integrating the LLM capabilities of SAP Generative AI Hub with the vector engine and anonymization features of SAP HANA Cloud, this plugin revolutionizes the development journey.

Harnessing the power of SAP HANA Cloud, the plugin simplifies the anonymization process, ensuring smooth implementation for robust data privacy and anonymity. Beyond mere data protection, it offers seamless connectivity to LLMs through SAP Generative AI Hub, abstracting access to diverse LLM capabilities, as we'll explore in greater detail. By streamlining access to these functionalities, developers can redirect their focus towards refining the business logic of their applications.

Moreover, the plugin empowers developers to effortlessly leverage the vector engine capabilities provided by SAP HANA Cloud, facilitating the development of RAG solutions with unparalleled ease—an aspect we'll delve into further in the subsequent use case.

Crafted in the tradition of CDS plugin architecture, this plugin seamlessly integrates into your CAP application through the SAP endorsed methodology. Presently, the CAP LLM Plugin offers five pivotal features, catering to the pressing requirements of CAP developers seeking to harness AI capabilities from SAP offerings and our esteemed partners.

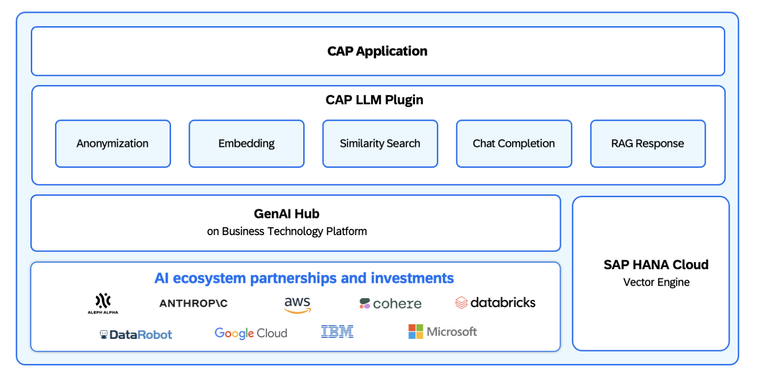

The below diagram provides a comprehensive overview of how the plugin seamlessly integrates into the development landscape of a CAP application.

CAP LLM Plugin Stack

Prime features of the plugin

Anonymization

Access Layer for LLMs

The plugin offers expedited access to LLMs, encompassing embedding models and chat models, accessible via SAP Generative AI Hub on SAP Business Technology Platform, facilitated by straightforward configurations. Upon deploying the model on SAP Generative AI Hub, integration into your code becomes seamless using the plugin, as demonstrated below:

const vectorplugin = await cds.connect.to("cap-llm-plugin");

const determinationResponse = await vectorplugin.getChatCompletion(payload);

In addition to the getChatCompletion method, illustrated in the accompanying image, we also furnish the getEmbedding method as a convenient shortcut for generating embedding values from any embedding model deployed on SAP Generative AI Hub.

Vector Engine Support

Similarity Search

The plugin right now supports the COSINE_SIMILARITY and L2DISTANCE algorithms and will continue to support future available algorithms too.

/**

* Perform Similarity Search.

* {string} tableName - The full name of the SAP HANA Cloud table which contains the vector embeddings.

* {string} embeddingColumnName - The full name of the SAP HANA Cloud table column which contains the embeddings.

* {string} contentColumn - The full name of the SAP HANA Cloud table column which contains the page content.

* {number[]} embedding - The input query embedding for similarity search.

* {string} algoName - The algorithm of similarity search. Currently only COSINE_SIMILARITY and L2DISTANCE are accepted.

* {number} topK - The number of entries you want to return.

* @returns {object} The highest match entries from DB.

*/

async similaritySearch(tableName, embeddingColumnName, contentColumn, embedding, algoName, topK)

const similaritySearchResults = await vectorplugin.similaritySearch(

tableName,

embeddingColumn,

contentColumn,

userQuesEmbeddings,

'COSINE_SIMILARITY',

20

);

The above code snippets highlight the steps involved in retrieving the results of a similarity search.

Support for RAG Architecture

In a typical scenario, a developer of a RAG architecture based application, configures their models, whether embedding or chat models, on SAP Generative AI Hub, thereby gaining access to these models. The knowledge base, typically represented by documents, undergoes segmentation into text chunks, which are then transformed into vector embeddings through embedding models. These embeddings are subsequently stored in SAP HANA Cloud utilizing the schema defined as a CAP entity. For the chat model to execute tasks accurately and devoid of hallucinations, it necessitates access to pertinent text chunks stored in SAP HANA Cloud. This process typically entails the creation of vector embeddings, execution of similarity searches, and access to the LLM chat-based model.

The CAP LLM Plugin serves to streamline these tasks comprehensively, thereby substantially reducing the developmental overhead.

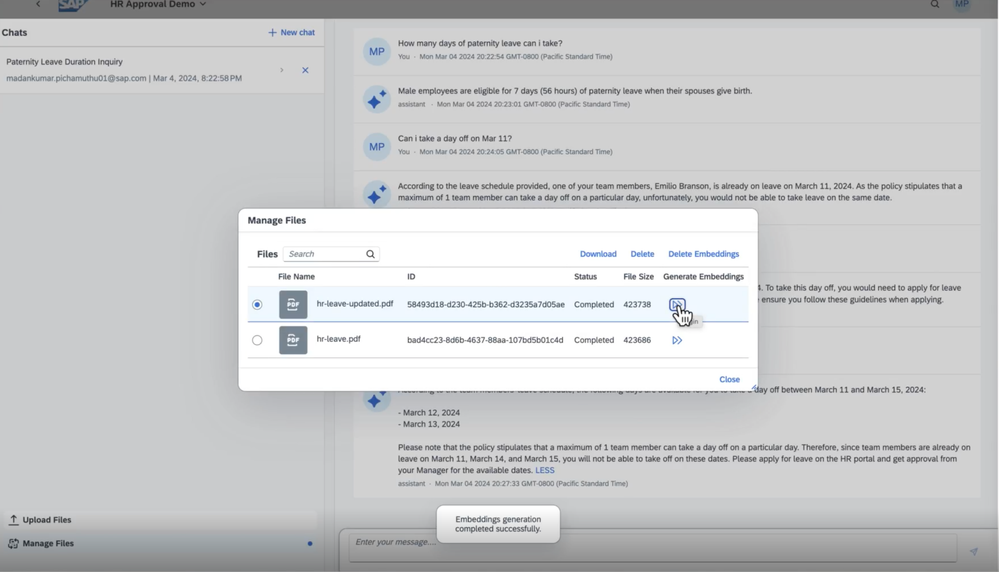

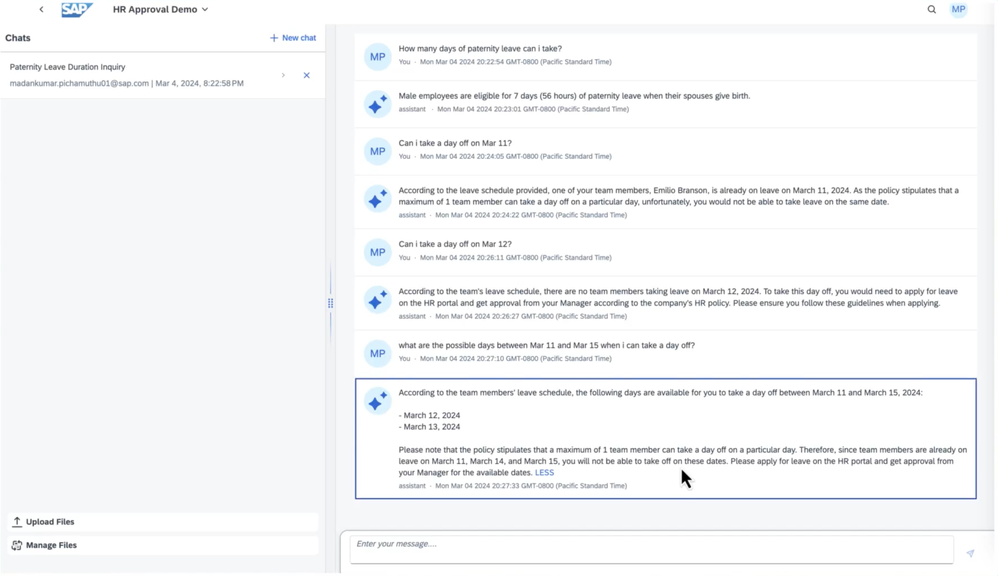

Plugin Demo – Leave Policy Compliance Assistant

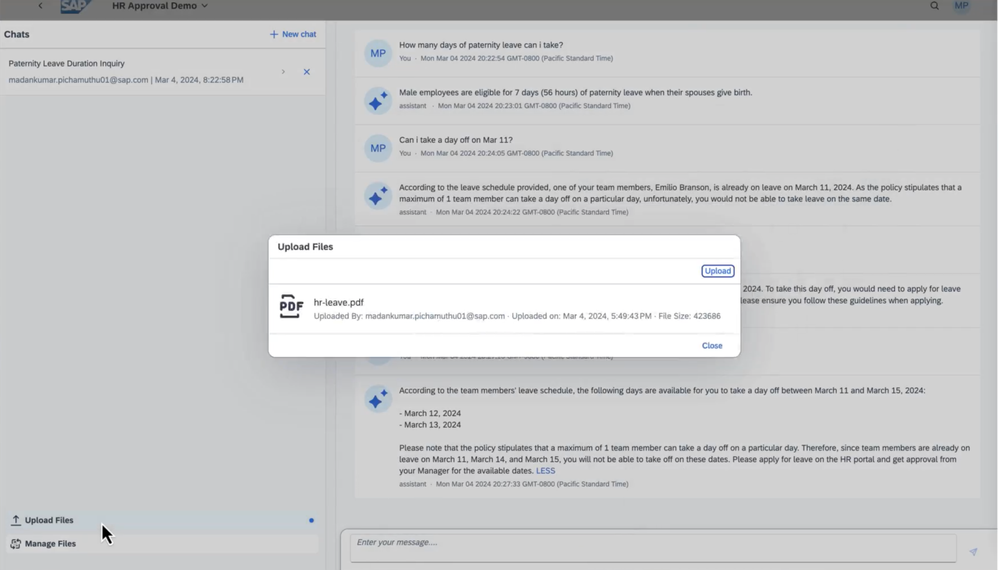

Here is an illustrative example showcasing the practical implementation of an end-to-end RAG scenario utilizing the CAP LLM Plugin: the Leave Policy Compliance Verification Assistant.

Business Scenario

Effectively implementing such policies necessitates a multi-faceted approach, including the validation of leave requests against existing ones in the SAP SuccessFactors system, verifying requests against unstructured leave policy documents, and leveraging the capabilities of the LLM to address user queries comprehensively.

By integrating the CAP LLM Plugin into this process, developers can streamline and automate various tasks, including generating embeddings for user queries, conducting similarity searches against existing policies, appending relevant policy excerpts to user prompts, and ultimately leveraging the LLM to provide tailored assistance to users regarding leave policy compliance.

Through this cohesive integration, the Leave Policy Compliance Verification Assistant not only enhances operational efficiency but also ensures adherence to company policies and regulatory requirements, thereby facilitating a smoother and more compliant leave management process for employees and administrators alike.

Let us explore how the CAP LLM plugin simplifies the development of the CAP application for this scenario.

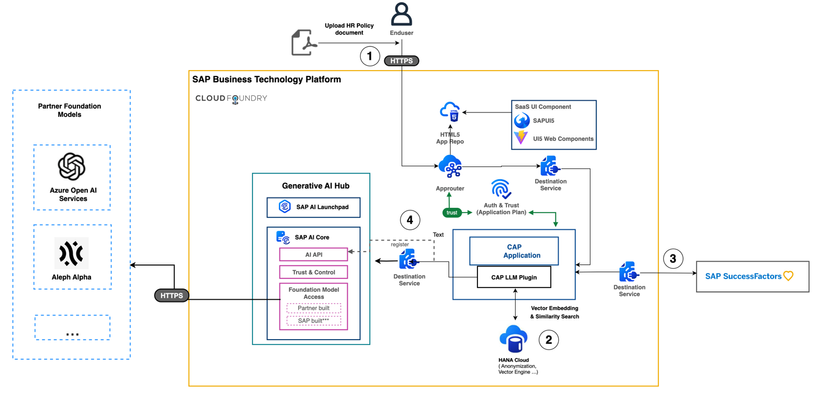

Architecture Diagram

Implementation Steps

Step 1: Leave policy document upload:

The manager uploads the team-specific and generic leave policy documents from an SAPUI5 user interface.

Step 2: Document chunking, embedding generation and storage:

The documents are split into text chunks based on the user requirements. The embeddings for the text chunks are generated seamlessly using a single ‘getEmbedding()’ method of the CAP LLM Plugin as follows:

const embedding = await vectorplugin.getEmbedding(chunk.pageContent);

This abstracts away most of the boiler plate code required to generate the embeddings, such as connecting to the embedding model via SAP Generative AI Hub, passing in the necessary configurations for inferencing the model, etc. The embeddings are then stored in SAP HANA Cloud.

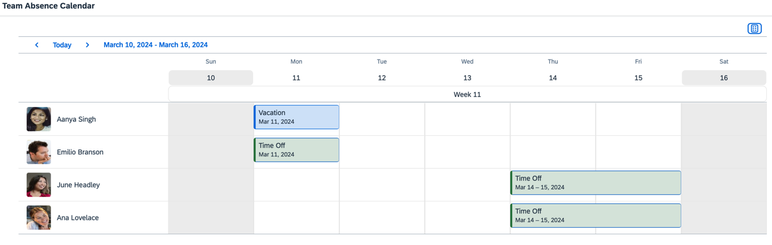

Step 3: Retrieve existing team members’ leaves from SAP Success factors API server via destination service.

A sample team leave calendar in SAP SuccessFactors looks as follows:

Step 4: RAG retrieval process based on user query:

Option 1: The RAG retrieval process can be implemented with simple calls as follows:

- Retrieve the similarity search results from the vector engine of SAP HANA Cloud with ease using the plugin method as follows:

const similaritySearchResults = await vectorplugin.similaritySearch(

tableName,

embeddingColumn,

contentColumn,

userQuesEmbeddings,

'COSINE_SIMILARITY',

20

);

- With the relevant leave policy retrieved from the vector engine and the team’s leave data from SAP SuccessFactors system, the chat completion method of the plugin to effortlessly generate the appropriate response as follows:

const chatCompletionReqPayload = {

"messages": prompts,

"temperature": 0.1,

"max_tokens": 512,

};

const chatCompletionResp = await vectorplugin.getChatCompletion(chatCompletionReqPayload);

Option 2: The next option would be to streamline the entire RAG retrieval process using a single ‘getRagResponse()’ method of the CAP LLM Plugin as follows:

const chatRagResponse = await vectorplugin.getRagResponse(

user_query,

tableName,

embeddingColumn,

contentColumn,

promptCategory[category] ,

memoryContext .length > 0 ? memoryContext : undefined,

30

);

This method performs the following steps behind the scenes:

- The user query is converted into vector embeddings for similarity search.

- Based on the user query, the relevant leave policies are retrieved from vector engine using similarity search.

- The prompt is generated combining the leave policy data from documents and the team members’ leave data from the SAP SuccessFactors system.

- The chat completion LLM model is passed the prompt and is instructed to answer the user’s question based on the leave policy and the leave data.

This reduces the required development effort significantly with the automation of multiple steps as listed above, required for the RAG phase.

Note: If the leave policy document needs to be updated, the manager can upload a new document from the UI. Remember to create a new chat once the policy document is updated.

Useful Resources

For detailed documentation on the CAP LLM Plugin, refer the guide.

To get started with this end-to-end RAG scenario, refer the guide.

Summary

The genesis of the CAP LLM Plugin stems from a deep-seated commitment to empower CAP Developers by alleviating the challenges inherent in GenAI application development. Drawing from our own firsthand experiences, each obstacle encountered during our journey was meticulously transformed into features within the plugin, ensuring its alignment with the precise needs of developers.

The integration of this plugin within our team has yielded tangible results, markedly reducing development time while enhancing overall team efficiency. We are confident that the adoption of this plugin within your development endeavors will yield commensurate benefits, propelling your projects towards greater success and efficiency.

Acknowledgement

Great thanks to the team members for their contributions towards the plugin– Akash Amarendra, Feng Liang, MadanKumar Pichamuthu, Weikun Liu. Special thanks to Sivakumar N and Anirban Majumdar for support and guidance.

If you have any questions, please contact us at paa@sap.com

- SAP Managed Tags:

- SAP HANA Cloud, SAP HANA database,

- SAP AI Core

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

95 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

308 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

353 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

440 -

Workload Fluctuations

1

- CAP Nodes Local development Test Business User in Technology Q&A

- SAP BTP Innobytes – April 2024 in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform - Blog 6 in Technology Blogs by SAP

- The 2024 Developer Insights Survey: The Report in Technology Blogs by SAP

- SAP CAI Chatbot integration in Alexa and Telegram in Technology Blogs by Members

| User | Count |

|---|---|

| 22 | |

| 13 | |

| 13 | |

| 11 | |

| 10 | |

| 10 | |

| 9 | |

| 9 | |

| 9 | |

| 8 |