- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Bring SAP Concur Data to SAP Datasphere (Part-2)

Technology Blogs by SAP

Learn how to extend and personalize SAP applications. Follow the SAP technology blog for insights into SAP BTP, ABAP, SAP Analytics Cloud, SAP HANA, and more.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

10-28-2023

8:39 AM

Blog post series to understand how to bring SAP Concur Data to SAP Datasphere.

|

Welcome back! In my previous blog post (click here), we discussed how the SAP Concur solution centralizes all spent data for an organization to help in expense analysis.

For analyzing data, SAP Datasphere is a flexible, scalable, and cost-effective solution; with Integrated tools, data governance, and self-service analytics, you can easily cleanse, enrich, and visualize your data. It provides a large set of default connections to access data from a wide range of sources but still, we can extend connectivity beyond SAP Datasphere standard connectivity and cover additional data sources.

We have already seen how to fetch SAP Concur Expense data using integration flow in the previous article, In this blog post you will see how to push the SAP Concur Expense data to the SAP Datasphere for further analysis to build analytical applications.

Solution Environment

You should have SAP Concur cloud travel and expense system. you need to register an application with SAP Concur. Once you have registered an application, you will receive a clientId, clientSecret, and geolocation. you will be using this credential to obtain tokens for the application. To register your application you can follow the excellent blog by Tim Chapman Introduction to Web Services for Concur Expense – Authentication

You need to have SAP Business Technology Platform setup and ensure that you already provisioned SAP Integration Suite and have experienced capabilities offered by SAP Integration Suite like creating and deploying an integration flow and sound knowledge of integration packages, adapters, and flow steps. To set up the SAP Integration Suite you can follow the tutorial Get onboarded to the SAP Integration Suite trial account.

Also, the SAP BTP account is enabled with the SAP Datasphere instance please follow the excellent blog on How to create an SAP Datasphere service instance in the SAP BTP Platform.

Note: You have already covered some of the topics discussed in this blog post if you have read and understood the great article Walkthrough: Capturing Business Events in SAP Datasphere using SAP Integration Suite by willem.pardaens.

Create Database Schema and Table in SAP Datasphere space

Database users are technical users who can connect the underlying SAP HANA Cloud, SAP HANA database to third-party SQL clients and allow data to be received or sent out via JDBC/ODBC. In SAP Datasphere, an open SQL schema is always created when you create a database user. This open SQL schema grants the database user read privileges to the underlying database. Depending on your needs, different privileges can be set for different schemas.

After creating a space in the SAP Datasphere, you need this space to create a dedicated schema which will be used to store the Concur Expense Report data. To do so, create a database user to connect external tools to SAP Datasphere. Provide a Database User Name Suffix for your schema, and enable both Read and Write access. Once the database user is created, you can use its login information like Database User Name, Host Name, Port, and Password to open the Database Explorer and to connect with the cloud integration flow.

In Database Explorer, you can create a new table to store the Concur Expense Report data which will have an ID, Name, currency code, Country, Total, and so on with all necessary fields for analyzing data.

Add Cloud Integration IP address range to IP Allowlist

SAP Datasphere uses an IP allowlist concept where external systems have to be registered with their IP address before they can connect. In our case, SAP Integration Suite is the external system.

First, you need to find out where your cloud integration’s tenant is hosted. You can see that in your integration’s tenant URL.Now look up the range of potential egress IP addresses this tenant can use via the documentation on the SAP Help portal: Regions and API Endpoints Available for the Cloud Foundry Environment.

In the SAP Datasphere menu, click on System and Configuration. Open the IP Allowlist menu and select Trusted IPs. Now click on Add for each of the addresses to add them to your allowlist.

SAP Datasphere is now ready to store your data.

Create JDBC Data Source in the SAP Integration Suite tenant

To connect an integration flow to the SAP Datasphere data source, you need to provide the credentials hence the JDBC data source artifact is required. This artifact will be used to store the access data for the database (as defined and generated previously).

During the integration flow design, for the JDBC adapter, you need to point to the alias of the JDBC data source. No additional configurations are then required in the integration flow.

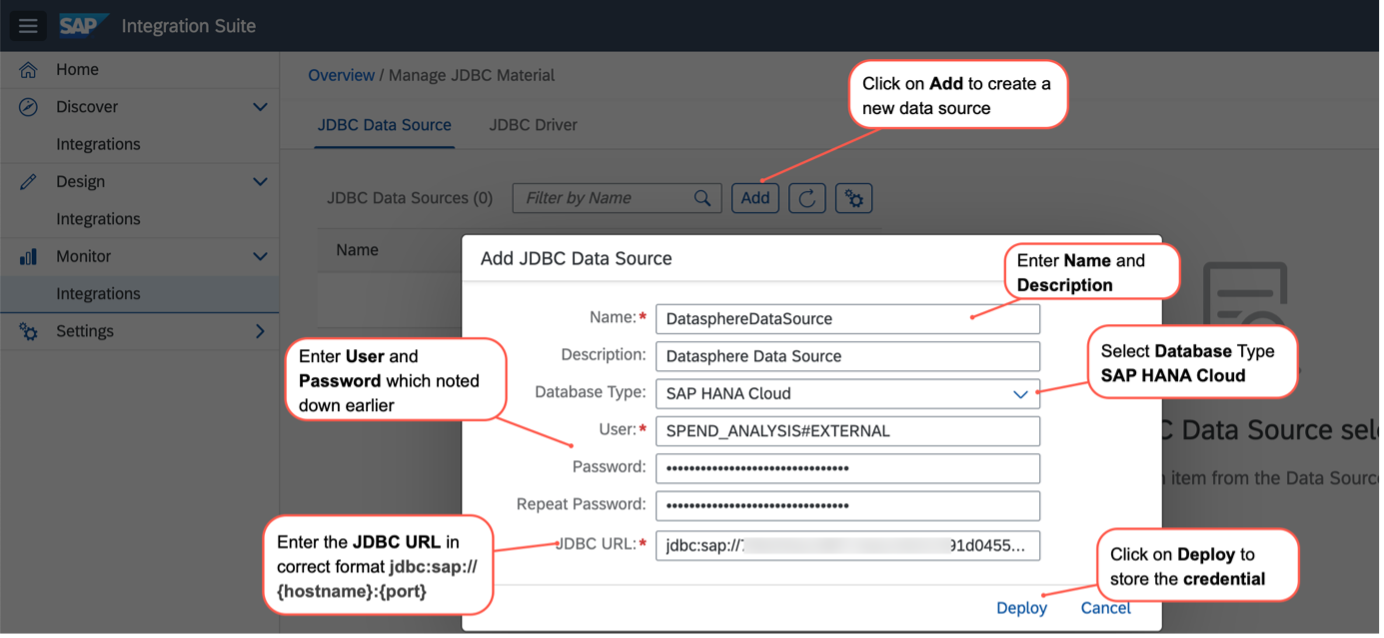

For this, In the SAP Integration Suite tenant, we need to navigate to Monitor > Integration, Then choose JDBC Material. Click Add to create a new data source, and provide the SAP Datasphere schema credentials noted down earlier.

Make sure to change the Database Type to SAP HANA Cloud, and use the correct format for the JDBC URL field: jdbc:sap://{hostname}:{port}. and Deploy to store this credential.

Update the existing integration flow

In my previous blog post, we have already used pre-packaged integration content which help us to get started with minimal integration efforts. Now you are going to extend the same integration package.

Go to your SAP Integration Suite tenant. navigate to Design > Integration and select the integration package that you have created. Edit the Consume SAP Concur API Integration Package by updating the Groovy Script Integration flow step. In the script editor, specify the script according to the requirements of your scenario.

For an overview of the classes and interfaces supported by the Script step, see SDK API. For more information on how to use the dedicated interfaces and methods for specific use cases, refer to Script Use Cases.

This is the Groovy script you can use to insert the data in the "SPEND_ANALYSIS#EXTERNAL"."REPORTS" table which we have created in the SAP Dataspace Database.

import com.sap.gateway.ip.core.customdev.util.Message;

import java.util.HashMap;

import java.util.Arrays;

def Message processData(Message message) {

def body=message.getBody(java.lang.String) as String;

def xml=new XmlSlurper().parseText(body);

List paramList = new ArrayList();

xml.Items.Report.each{

paramList.add(Arrays.asList(it.ID.text(),it.Name.text(),it.CurrencyCode.text(),

it.Country.text(),it.ReceiptsReceived.text(),it.OwnerLoginID.text(),

it.OwnerName.text(),it.PaymentStatusName.text(),it.Total));

}

message.setHeader("CamelSqlParameters",paramList);

message.setBody("INSERT INTO SPEND_ANALYSIS#EXTERNAL.REPORTS(ID,NAME,CURRENCYCODE,COUNTRY,RECEIPTSRECEIVED,"+

"OWNERNAME,OWNERLOGINID,PAYMENTSTATUSNAME,TOTAL) VALUES(?,?,?,?,?,?,?,?,?)");

return message;

}

Deploy & Monitor Integration Flow

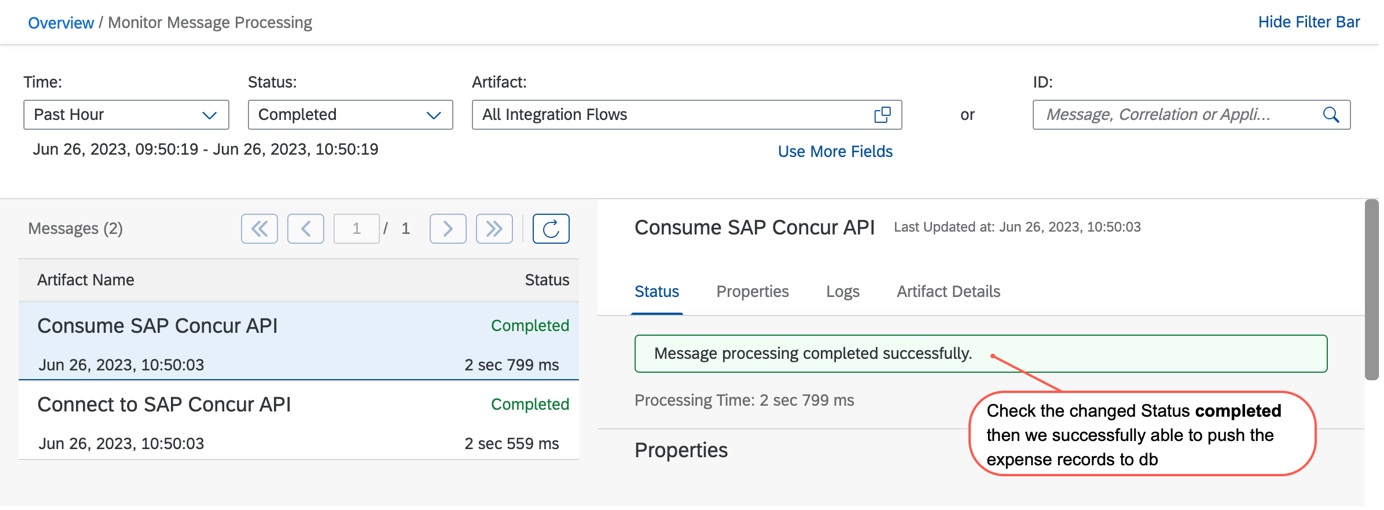

After you Save your integration flow and then select Deploy to deploy the integration flow to the SAP Integration Suite tenant. This will trigger the deployment of your integration flow. you can navigate to the Monitor-> Integrations tab to check the status.

Since the integration flow was designed as a Run immediate timer-based integration the flow will be executed as soon as it is deployed. Select the Completed Messages tile to view the successfully executed integration flows. In case of any failure, it will appear under Failed Messages. Check the status of the newly created integration flow from the Monitor Message Processing.

If the status changes to Completed then go back to the SAP Datasphere and open the Database Explorer. In the Explorer Search for the table and check the records.

Modeling using a Graphical view

The graphical view builder in SAP Datasphere makes it simple to develop data views. You may proceed logically without requiring to be familiar with SQL statements. In the graphical view builder, you have many resources to model your data, combine them from many sources, and assign business semantics that make your output easier to understand.

In your DataSphere tenant, In Data Builder -> New Graphical View your Concer Expense Report data under Sources. Now you can easily transform or drill down your Expense Report Data by adding filters, joins, and projections.

Summary

SAP Datasphere provides us the different approaches to connect with the source system. One of the ways we have used here is to create a database user to allow external tools to connect to the space and write data to Open SQL schemas associated with the space.

In this blog post series, you have seen the Integrating Data via Database Users/Open SQL Schemas approach to store SAP Concur’s Data in SAP Datasphere using SAP Integration Suite to use it in Data Builder to model and enrich the business expenses data.

Before this blog post series comes to an end, I would like to thank my team at SAP for their continuous support and express special gratitude to anbazhagan_uma, who supported me in validating and publishing this post series.

What’s next?

Well, that’s it from my side! I appreciate your patience and following this blog post series. We are very interested to hear about your own experiences and ideas on other SAP Datasphere use cases for SAP or non-SAP solutions.

Keep your anticipation high and stay curious!

Reference & Further Reading

About Concur

Expense Management

Travel and Expense Management

Developing Script and Script Collection

Integrating Data and Managing Spaces in SAP Datasphere

Integrating Data via Database Users/Open SQL Schemas

For more information and related blog posts on the topic page for SAP Datasphere.

If you have questions about SAP Datasphere you can submit them in the Q&A area for SAP Datasphere in the SAP Community.

- SAP Managed Tags:

- SAP Datasphere,

- SAP Integration Suite,

- SAP Concur,

- SAP Business Technology Platform

Labels:

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

95 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

310 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

353 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

441 -

Workload Fluctuations

1

Related Content

- SAP Datasphere - Space, Data Integration, and Data Modeling Best Practices in Technology Blogs by SAP

- Deliver Real-World Results with SAP Business AI: Q4 2023 & Q1 2024 Release Highlights in Technology Blogs by SAP

- SAPinsider 2024: Are You Ready for a Challenge? in Technology Blogs by SAP

- SAP Inside Track Bangalore 2024: FEDML Reference Architecture for Hyperscalers and SAP Datasphere in Technology Blogs by SAP

- SAP Inside Track Bangalore 2024: Generative AI Extravaganza in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 19 | |

| 14 | |

| 12 | |

| 11 | |

| 10 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |