- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Augmenting SAP BTP Use Cases with AI Foundation: A...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

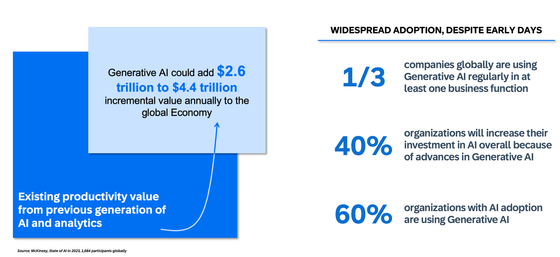

Generative AI is accelerating in the industry, with a McKinsey report stating that Generative AI could add between $2.6 trillion to $4.4 trillion in incremental value to the global economy annually. Companies globally are increasingly using Generative AI, with about one-third of them using it regularly in at least one business function.

At SAP, we integrate Generative AI technology with industry-specific data and process knowledge to create innovative AI capabilities for our applications. To illustrate how Generative AI can benefit businesses, in this blog we'll walk you through a citizen reporting app for the public administrations industry.

Our use case involves a fictitious city, "Sagenai City", struggling with managing and tracking maintenance in public areas. The city wants to improve how it handles reported issues from citizens by analyzing social media posts and making informed decisions. A better perception of public administration by citizens is an anticipated outcome.

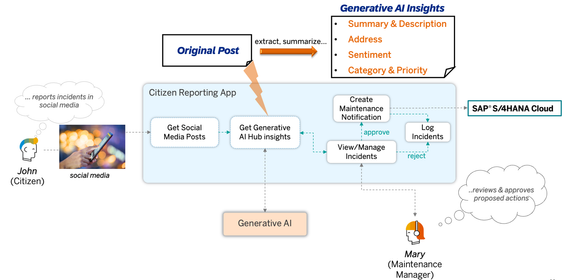

The goal of the citizen reporting app is to assist the Maintenance Manager by extracting insights from citizen social posts, classifying them, and creating maintenance notifications in the SAP S/4 HANA Cloud tenant.

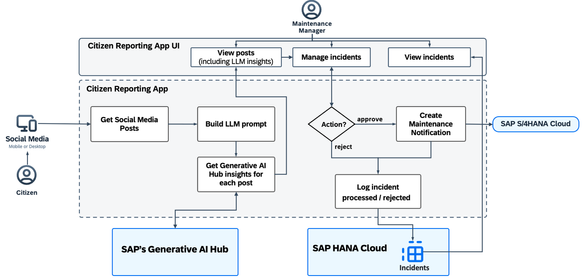

Let’s understand the Citizen Reporting App business flow:

- A citizen reports an incident through a post on the city's community page on Reddit (we have used Reddit as having a rich and free API, but any social media of your choice can be chosen).

- The citizen reporting app receives the post and notifies the responsible persons from the public administration office.

- The post is then processed and analyzed by a Large Language Model through SAP’s Generative AI Hub to extract key points and derive insights such as summarizing the issue, identifying the issue type, its urgency level, determining the incident's location, and analyzing the sentiment behind the post.

- The Maintenance Manager reviews the incident details extracted by Generative AI and decides whether to approve or reject the incident. As a result, the manager saves time through the reporting process, thanks to the powerful text analysis by Generative AI.

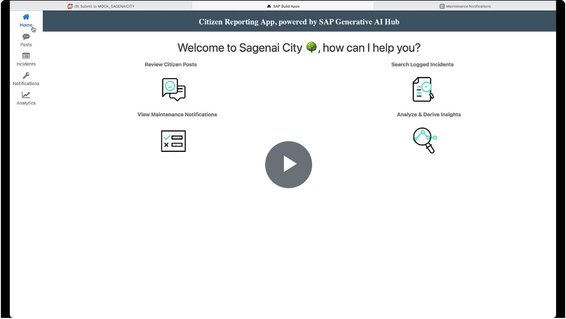

To demonstrate this in action please check the following demo video of the Citizen Reporting App, leveraging the power of SAP’s Generative AI.

Before we go into details on how we implemented this proof of concept, let’s review some key concepts.

LLM’s Overview

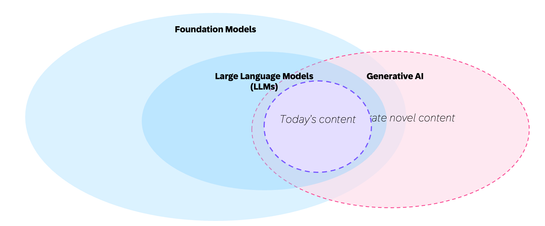

Large Language Models (LLMs) have been making waves in the artificial intelligence (AI) landscape. These foundation models trained on broad datasets have expanded their influence beyond natural language to domains such as videos, speech, tabular data, and protein sequences.

Understanding Large Language Models

Foundation models, a term coined by Stanford researchers, are self-supervised models trained on vast datasets. They can be easily adapted to a myriad of downstream tasks. Among these, Large Language Models (LLMs) focus on natural language in the form of text.

In some cases, LLMs also exhibit impressive generative capabilities, making them suitable for creating novel content. They are often referred to as generative AI models.

In this blog we will focus mainly on the LLMs application around Generative AI.

These models leverage transfer learning, a concept that has been in use for decades in the image domain, on a much larger scale. The change of scale was made possible by a set of key-factors, such as improved computing capabilities, the advent of the transformer architecture, and the availability of massive datasets. As a consequence, these large models started to show new emerging capabilities. One of them is in-context learning, which allows to adapt the model to perform a certain task with no need for additional training, just giving instructions in human language in the form of text. This can reduce tremendously the time to value required to implement artificial intelligence for a wide set of tasks.

Also, LLMs are typically trained on the task of predicting the next word in a text. This approach requires the model to gain substantial knowledge about the world, making them a form of "lossy compression" of the information available on the internet.

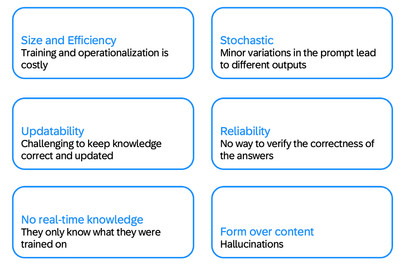

Limitations of Large Language Models

Despite their remarkable capabilities, LLMs come with some limitations. First of all, training these models is time-consuming and expensive. Furthermore, keeping the models' knowledge correct and updated is a challenge. They are also stochastic, meaning minor variations in the prompt can lead to different outputs. There is no way to verify the correctness of the models' answers and finally, they tend to prioritize form over content, so they can generate incorrect but plausible sounding answers.

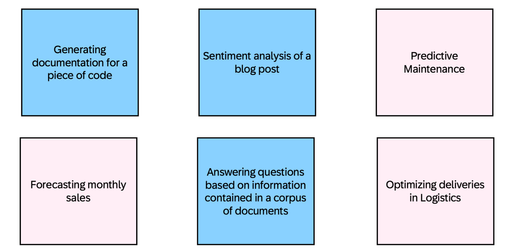

With the current state of the art, LLMs can help solving many uses cases requiring language understanding and language generation. LLM struggle, instead, in logics and math, and with use cases that require analysis of numerical, structured data. In the following image we share some use case samples that we have classified into good LLM use case and not such a good case for LLMs.

Adapting LLMs to Desired Tasks

There are several strategies to adapt LLMs to the desired task and work-around some of their limitations. The most widely used are listed below:

- Prompt engineering.

Prompt engineering is the process of designing and refining prompts or instructions given to a language model to elicit desired responses.

The quality of the prompt can greatly help in improving the response model's accuracy, controlling its biases, or generating creative and coherent responses. - Retrieval Augmented Generation (RAG).

RAG idea is to provide the model with a knowledge base (a document or a corpus of documents) and ask it to answer our prompts based on these documents. - Fine-tuning.

Fine-tuning refers to the process of taking a pre-trained model and adapting it to perform a specific task or on a specific dataset. In machine learning, models are typically trained on large datasets to learn general patterns and features. Fine-tuning allows to take advantage of this pre-trained knowledge and apply it to a more specific task or dataset.

The costs involved in fine-tuning can be considerable as they might include computational resources, such as GPU or cloud computing costs, as well as the time and effort required to collect and preprocess data, train the model, and evaluate its performance.

Choosing the Right LLM

When choosing an LLM, it's important to consider factors like price, latency, and request limits. Latest and larger models are usually more capable and robust. Consider looking at model benchmarks and leaderboards, such as Chatbot Arena, to compare the performance of different models.

Evaluating LLMs

Before putting an LLM-based application into production, it is always best practice to test the performance of the LLM for your specific task. To do that, you can come up with a sample of data points to test your model. Apply your LLM prompt in batch to the sample and then evaluate the LLM response accuracy. Sometimes, this operation can be challenging, maybe because of the lack of data points to use for testing, or because it is difficult to rate how good is the model output when a language-related task is involved. LLMs can be useful to overcome these challenges. For instance, you can ask an LLM to generate test data points for you. Also, you can use an LLM as a judge, to rate the quality of an LLM-generated text with the criteria that are important for you, for instance creativity, correctness, etc.

Libraries / LangChain

There are libraries bridging the gap between traditional software development and Generative AI, to make it easier to implement generative AI technology in an application. Langchain is one of the most well-known but there are other options.

Langchain is available for python and Javascript, and it offers many templates to build prompt effectively, parsers to parse the response output, chains to build sequences of LLM operation, Agents, and model evaluation. Langchain can be used in combination with SAP tools for Generative AI, we will talk about it later in this blog.

Overview on SAP’s Generative AI Hub

Artificial Intelligence (AI) is revolutionizing every aspect of business, and at SAP, we are committed to harnessing this power to drive business transformation. Our focus is on Business AI, which means AI for every aspect of the business. We continuously strive to build the best technology and leverage the best tools on the market, including strategic partnerships with industry leaders.

SAP Business AI Strategy

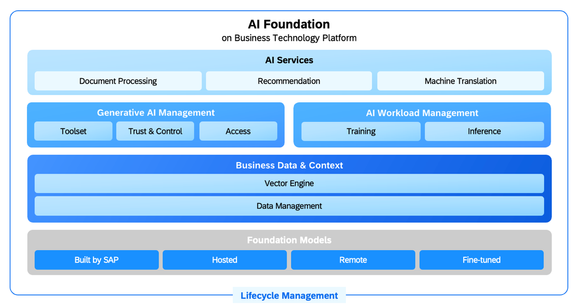

Our SAP Business AI strategy is embedded across various business domains including Finance, Supply Chain, HR, Procurement, Marketing and Commerce, Sales and Services, and IT. We offer a complete set of services that enable developers to create solutions with artificial intelligence. These services form the AI Foundation from which developers can pick whatever they need to build their AI- and generative AI-powered extensions and applications on SAP Business Technology Platform (SAP BTP).

SAP BTP AI Portfolio

Our AI portfolio includes SAP AI Services to help you automate and optimize corporate processes by adding intelligence to your applications using AI models pretrained on business-relevant data. These services include document information extraction, document classification, personalized recommendations, data attribute recommendation, and translation of software texts and documents.

As we have seen, Generative AI, a form of artificial intelligence, can produce text, images, and varied content based on its training data. At SAP, we integrate Generative AI with extensive industry-specific data and deep process knowledge to create innovative AI capabilities for the SAP applications you use every day. It’s built-in, relevant to your business, and responsible by design.

We also offer the Generative AI Hub, which provides instant access to a broad range of large language models (LLMs) from different providers. The hub provides tooling for prompt engineering, experimentation, and other capabilities to accelerate the development of your SAP BTP applications infused with generative AI, in a secure and trusted way.

Generative AI Hub

The Generative AI Hub allows access to a large variety of foundation models, from the hosted or open-source ones to the proprietary and remote models and in the future also models built or fine-tuned by SAP.

The Generative AI Hub is a new SAP BTP capability offered across two different services: AI Core and AI Launchpad.

Deployment of LLMs in SAP AI Core

To consume the LLMs through SAP’s Generative AI Hub, we first need to deploy them in SAP AI Core (Create a Deployment for a Generative AI Model). We leverage AI Core capabilities to provide a standard model inference platform on Kubernetes and serve the LLMs taking care of security aspects, multi-tenancy support, ...

Check the Models and Scenarios in the Generative AI Hub up-to-date list of supported models.

Generative AI Hub Capabilities in SAP AI Launchpad

SAP AI Launchpad provides a graphical interface for Generative AI Hub and from there several tools are provided for the developers. These include a playground system where developers can perform prompt engineering and test prompts against different LLMs, and Prompt Management features that allow storing and versioning prompts.

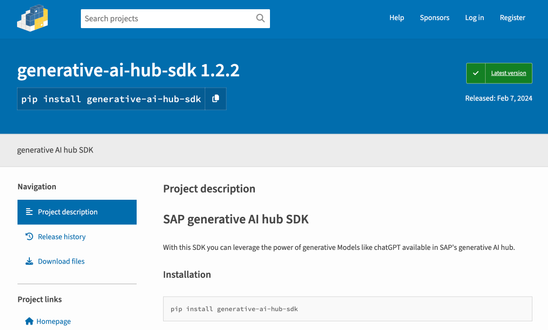

Generative AI Hub SDK

The Generative AI Hub SDK is a collection of tools, in addition to the existing ai-core-sdk, to support LLM access. It boosts efficiency when working with various LLM models by streamlining the deployment of LLM models and the querying of available models.

At the time of writing this blog, only the Python version is made GA (Generable Available), stay tuned for a JavaScript version!

Deep Dive into the Citizen Reporting App Use Case

In the previous sections, we've explored the concept of Generative AI and how SAP's Generative AI Hub can help you implement your own solutions. Now, we're going to delve deeper into our specific use case: the Citizen Reporting app.

Business Scenario

The Citizen Reporting app is designed to streamline the process of incidents reporting in social media. The app leverages the capabilities of the Generative AI Hub to gain insights from citizens' social posts. This not only saves time for maintenance managers but also improves the public's perception of the administration.

The app allows maintenance managers to easily review and manage incidents, including AI insights and classification. Once the relevant incidents are supervised, the app creates Maintenance Notifications in the SAP S/4HANA Cloud tenant.

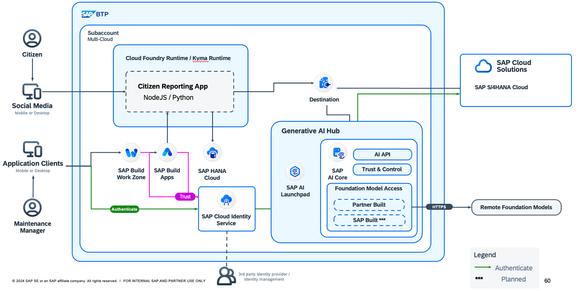

Solution Architecture

The Citizen Reporting app consists of a server-side application that can be run in SAP BTP Cloud Foundry or Kyma runtime, and a user interface developed with SAP Build Apps. The server-side application provides all the APIs required to get data and start the execution of the actions required by the maintenance manager.

The server-side application consumes APIs from different BTP Services, including the SAP AI Core service, which provides Generative AI APIs from different foundation models in a trusted and controlled way.

The Destination service is used to securely connect to the SAP AI Core APIs as well as SAP S/4HANA Cloud APIs to automatically create Maintenance Requests after Maintenance Manager’s approval.

Implementation Steps

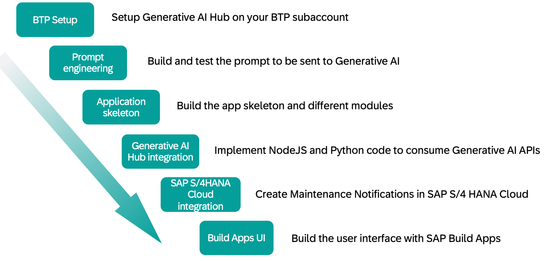

Let’s go a bit deeper into the implementation by having a look to the different implementation steps:

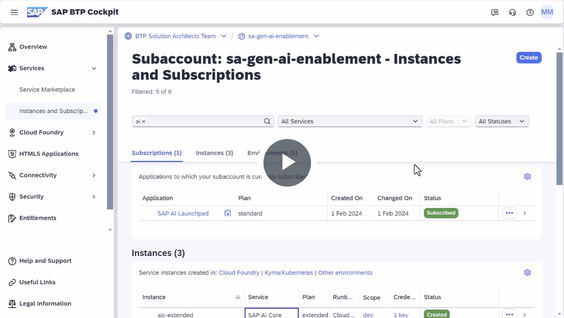

SAP BTP Setup

To work with Generative AI hub in SAP Business Technology Platform, the first requirement is to have an SAP BTP sub account with an instance of SAP AI Core. The SAP AI Core instance will provide us with a set of APIs to consume Generative AI Hub from our implementation.

Once we have our instance of SAP AI Core, we can create instance of the SAP AI Launchpad to have access to a user interface to configure and manage our generative AI deployments.

As we saw in the architecture diagram, other SAP BTP services like the Cloud Foundry environment, the SAP HANA Cloud Service, SAP Authorization and Trust Management Service (XSUAA) & Destinations Service, would be required for the application and the service instances will need to be created.

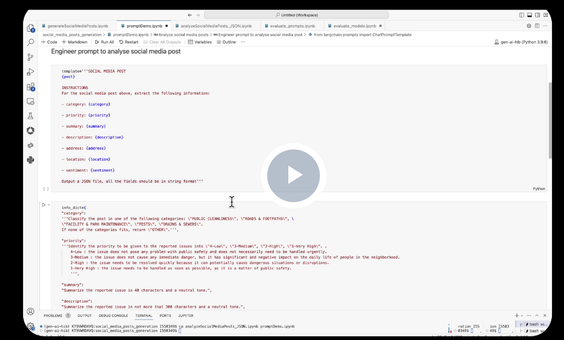

Prompt Engineering

Once your SAP BTP account is ready, we will build the prompt to be sent to the Foundation Model. This is a very important phase to get accurate insights from SAP’s Generative AI Hub. The prompt engineering step is usually recursive and conducted with the help of tools like the AI Launchpad or a Jupiter Notebook, to test and fine-tune the prompt.

Let’s review how we built our prompt to get insights from the Citizen’s social media posts.

Application Skeleton

The application flow begins with citizens reporting incidents on social media. The server-side application reads these posts and sends their content, along with a prompt, to SAP's Generative AI Hub. The SAP’s Generative AI Hub generates insights from each post, which are then presented to the Maintenance Manager.

The manager can choose to approve or reject each incident. Approved incidents result in a maintenance notification being created in SAP S/4 HANA, while all incidents, approved or rejected, are logged in the SAP HANA Cloud database.

We have implemented this application by building three different modules:

- Social Media integration

- Generative AI Hub integration

- SAP S/4 HANA Cloud integration.

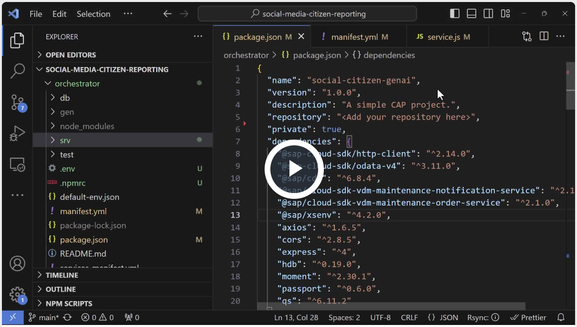

The full source code of our application is available in the SAP-samples/btp-generative-ai-hub-use-cases GitHub repository part of the SAP-Samples.

Consuming Generative AI Hub in JavaScript

To implement the server side of our social media citizen reporting app in JavaScript, we have leveraged the SAP Cloud application programming model.

The JavaScript implementation shown in this demo directly calls the SAP AI Core Generative AI Hub APIs through an HTTP POST and shows how to consume the SAP’s Generative AI Hub raw APIs. Please check Generative AI Reference Architecture including a GitHub repository to check other implementation options in TypeScript leveraging Langchain.

Consuming Generative AI Hub in Python

To consume Generative AI Hub in Python, we leverage the Generative AI Hub SDK, which simplifies the implementation of the calls to the SAP’s Generative AI Hub APIs.

SAP S/4 HANA Cloud Integration

Once the Maintenance Manager approves an incident, a maintenance notification is created in the SAP S/4 HANA Cloud tenant. We used the SAP Cloud SDK Maintenance Notification Service module to implement this functionality. The user interface calls a function to create the maintenance notification, providing all the required information from the citizen post and the insights generated by the Generative AI Hub.

User Interface Implementation

The user interface was implemented using SAP Build Apps, a low-code, no-code tool that enabled us to implement our proof of concept quickly, using ready-to-consume UI components. The user interface connects to the backend via OData APIs to retrieve all the details required by the Maintenance Manager.

All the source code of this application is available on our Github repository for further exploration and includes both the JavaScript as well as the Python version of the backend application among other resources.

Explore further resources on generative AI at SAP

Source code of our prototype

Generative AI at SAP

- OpenSAP Course | Generative AI with SAP

- SAP Community | Artificial Intelligence and Machine Learning at SAP

- Generative AI Hub in SAP AI Core

- Generative AI Hub in SAP AI Launchpad

- Generative AI Roadmap

- Discovery Center | SAP AI Core and Generative AI Hub

- Data Processing Agreement

Implementation Samples

- Social Media Citizen Reporting App – GitHub repository

- Generative AI Reference Architecture

- Generative AI Hub Tutorials

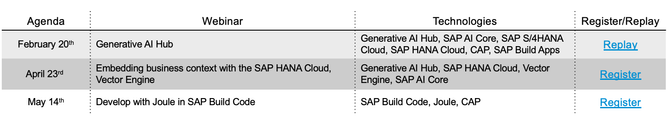

More sessions on this series

Please check the central blog Augment Your SAP BTP Use Cases With AI Foundation on SAP BTP to get all the details on the sessions part of this series, register for the upcoming sessions and earn a knowledge badge by attending passing the associated web assessment.

Conclusion and Key Takeaways

Large Language Models have ushered in a new era in the field of artificial intelligence. While they come with their limitations, their ability to generate novel content and adapt to a wide range of tasks makes them a powerful tool. By understanding these models and how to adapt, choose, and evaluate them, we can leverage their capabilities in various SAP environments and beyond.

The SAP’s Generative AI Hub offers a streamlined toolset for Generative AI solution development, instant access to top-rated foundation models from multiple providers, and an elevated level of trust and control.

We hope this blog has inspired you to start designing and implementing your own use cases to unlock the power of generative AI in your solutions. Stay tuned for more blogs in this series as we continue to explore the exciting possibilities of generative AI with SAP!

- SAP Managed Tags:

- SAP AI Services,

- SAP AI Core,

- SAP AI Launchpad,

- SAP Business Technology Platform

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

93 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

67 -

Expert

1 -

Expert Insights

177 -

Expert Insights

301 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,577 -

Product Updates

346 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

429 -

Workload Fluctuations

1

- SAP HANA Cloud Vector Engine: Quick FAQ Reference in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP BTP - Blog 4 Interview in Technology Blogs by SAP

- 10+ ways to reshape your SAP landscape with SAP Business Technology Platform – Blog 4 in Technology Blogs by SAP

- Harnessing the Power of SAP HANA Cloud Vector Engine for Context-Aware LLM Architecture in Technology Blogs by SAP

- AI Foundation on SAP BTP: Q1 2024 Release Highlights in Technology Blogs by SAP

| User | Count |

|---|---|

| 34 | |

| 17 | |

| 15 | |

| 14 | |

| 11 | |

| 9 | |

| 8 | |

| 8 | |

| 8 | |

| 7 |