- SAP Community

- Products and Technology

- Technology

- Technology Blogs by Members

- state of testing in UI5: OPA5, UIVeri5 and wdi5

Technology Blogs by Members

Explore a vibrant mix of technical expertise, industry insights, and tech buzz in member blogs covering SAP products, technology, and events. Get in the mix!

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

vobu

Active Contributor

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-19-2020

10:47 PM

Testing as a means of securing investment into

To further push the issue, let’s evaluate the most prominent frameworks for end-2-end testing in

Hint: all codes samples are and all setup is bolted into the

Looking at the test-pyramid, we have essentially three e2e-testing frameworks out there:

All three allow for testing user-facing functionality, operating a

(

Additionally,

Let’s look and compare all three from a usage endpoint. I’ll try and follow recommended/best practices as much as possible along the way.

(Disclaimer: I thought up

excerpt from

Once,

It’s then set up via a config file and files containing test code - make sure both are in the same file sys folder.

minimal config file:

exemplary test file

A common pitfall is that

Then

Similar to

As there’s no equivalent for testing hybrid apps with

With Node.js >= 12:

Config file is identical to

exemplary test file

Similar to

The topic "selectors" can be handled quickly: all share the same syntax 🙂

So no matter wether you write a test in

Using it in

In

Similar, in

Generally speaking, all three frameworks support the same locator options for

Check out the documentation on the subject for

As the brief code examples above already hint, interacting with located

In

Further programmatic querying of a

Also, operating any elements or controls (think "reload") outside of the

With

Once located in

Additional

Theoretically,

To stay API-compliant with

With wdio as its’ base, all functionality of Webdriver.IO can be used with

Similar to

Probably the biggest difference between the three frameworks is the approach to actually writing the tests. For comparison’s sake, I’ve coded both "plain" and page-object-style tests with in

For

same for

Encapsulating test functionality in Page Objects is done for

Every action and assertion needs to be wrapped in a

This makes more complex scenarios complicated to write syntax-wise. Also nesting actions/assertions get difficult to maintain.

A synchronous notation is encouraged, using

However, running single tests or only one "Journey" requires commenting out/in source code constantly

→ clumsy dev time turnaround.

Assertions are done via

For halting the test and runtime, another

This stops the

With

In combination with the test-runner karma, a console-based report can be achieved. As this adds another layer of installation/setup/configuration, I’m not going to cover it here. But you can try it out via (in

While locating

The proper way of when to use what coding approach already highlights one of the challenges when working with

The Page Object pattern in

That also drills through to the debugging part. Per se

But debugging the actual test is difficult, when only above mentioned sync coding is used - there’s no place to actually put a break point to determine a

While not a big deal, this still puts overhead into the dev time turnaround.

Still, command-line API and reporter FTW!

Thanks to Webdriver.IO,

Test suites are organized via (multiple and nested)

Assertions are a superset of Jest.js matchers, extended by Webdriver.IO-specific methods.

Page Objects in

In combination with class inheritance, Page Objects in

The synchronous coding style makes it easy to put a breakpoint in a test at any line and inspect the runtime state of a

For launching

Many reporters are available for Webdriver.IO that subsequently work for

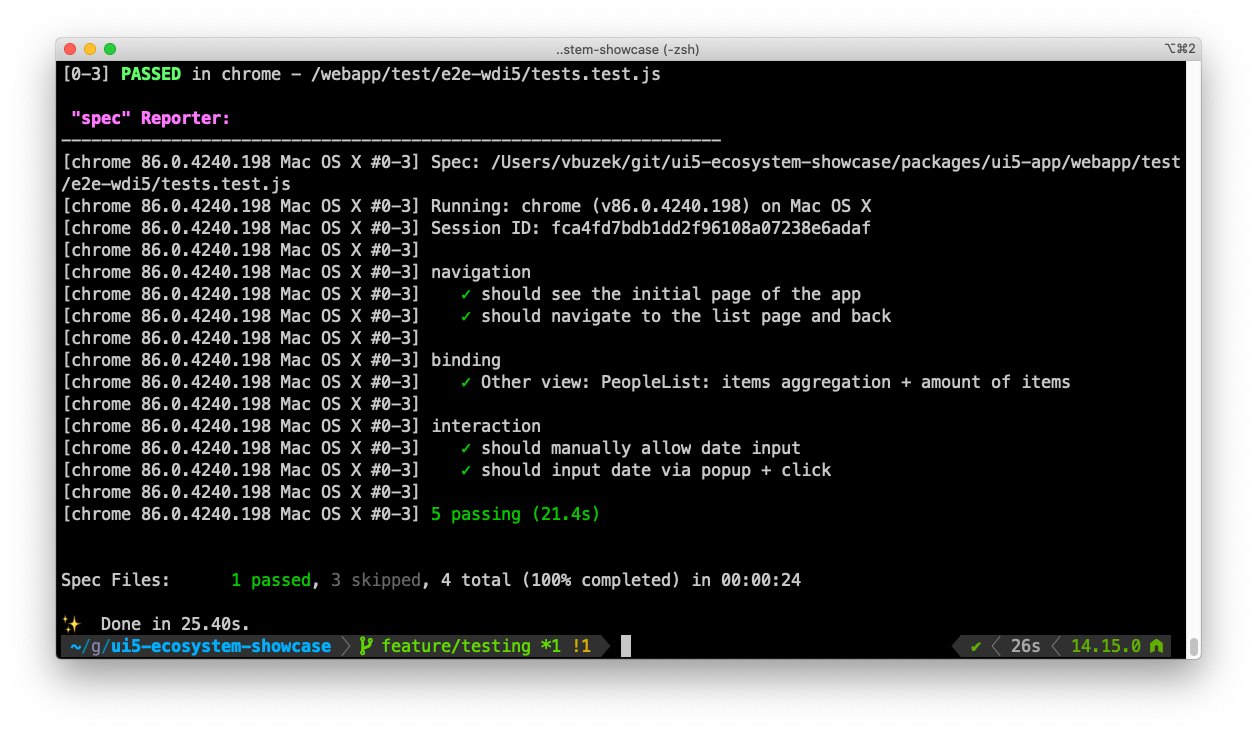

Per default, the spec reporter offers a concise status report:

Time is valuable, not only for testing. But specifically during development: the faster a test-runner executes, the quicker the developer can react according to the results and fix/add/change tests.

And most certainly in CI/CD: the quicker a suite of test runs, the faster Pull/Merge Requests can pass through, the faster features can be (continuously) delivered, the higher the literal quality of service.

All tests in the ecosystem showcase share the same requirements. With the

Nevertheless, here are the metrics of the tests all run in headless Chrome (

Hardware is my MacBook Pro 2.3 GHz Quad-Core Intel Core i7 with 32 GB RAM (yeah, I know).

Every test framework was run 100 times (really!), these are the mean runtimes:

Which framework is "the best", which one is "the winner"?

Clearly there’s no straight answer to that, as each one has its’ advantages and disadvantages, serving certain use cases best.

With

So which one to pick?

In terms of licensing, there most certainly is a winner:

In that regard: cheers!

UI5 app development has become more prevalent. Yet it’s not as integral a part of every UI5 dev effort as I’d like to see. What’s keeping ya?To further push the issue, let’s evaluate the most prominent frameworks for end-2-end testing in

UI5.Hint: all codes samples are and all setup is bolted into the

UI5 ecosystem showcase, so you can glimpse at the code while you’re reading this.- state of testing in

UI5:OPA5,UIVeri5,wdi5

- what’s out there

- Installation + Setup

- Selectors, Locators + Usage

- Test syntax + Utilization

- Performance

- Summary

what’s out there

Looking at the test-pyramid, we have essentially three e2e-testing frameworks out there:

OPA5, UIVeri5 and wdi5.

test pyramid + wdi5

All three allow for testing user-facing functionality, operating a

UI5 application "as a user could": interacting with UI elements.UIVeri5 and wdi5 both remotely control a browser. That is, they have a runtime other than the UI5 application. Thus, both need a (web)server of sorts -running the UI5 app under test- they can connect to.OPA5 differs as it shares the same runtime with the UI5 application - it works adjacent to the UI5 app under test, not separated.(

QUnit is out of the picture for this article, as we’re concentrating on running user-facing tests, not purely functional ones.)Additionally,

wdi5 allows for testing hybrid applications on mobile devices. It can connect to a cordova-wrapped UI5 app on iOS, Android and Electron and run the same tests as with a browser-based app.Let’s look and compare all three from a usage endpoint. I’ll try and follow recommended/best practices as much as possible along the way.

(Disclaimer: I thought up

wdi5, so I’m certainly biased; yet I’ll try and remain neutral in comparing the frameworks; deal with it 🙂 )Installation + Setup

OPA5

OPA5 comes with UI5, no additional installation steps needed. Yet its’ setup is not intuitive, mingles OPA5’s base QUnit in, and needs several inclusion levels.webapp/test/integration/opaTests.qunit.html: <!-- ... -->

<script src="opaTests.qunit.js"></script>

</head>

<body>

<div id="qunit"></div>

<!-- ... -->

webapp/test/integration/opaTests.qunit.js:sap.ui.getCore().attachInit(function () {

"use strict";

sap.ui.require([

// all test suites aggregated in here

"test/Sample/test/integration/AllJourneys"

], function () {

// `OPA5`'s mama 🙂

QUnit.start();

});

});

webapp/test/integration/AllJourneys.js:sap.ui.define([

"./arrangements/Startup", // arrangements

"./NavigationJourney", // actions + assertions

"./BindingJourney", // actions + assertions

"./InteractionJourney" // actions + assertions

], /* ... */

excerpt from

webapp/test/integration/BindingJourney.js:sap.ui.define(["sap/ui/test/opaQunit", "./pages/Main", "./pages/Other"], function (opaTest) {

"use strict";

QUnit.module("Binding Journey");

QUnit.module("Other view: PeopleList: items aggregation");

opaTest("bound status", function (Given, When, Then) {

Given.iStartMyApp();

When.onTheAppPage.iPressTheNavButton();

Then.onTheOtherView.iShouldSeeTheList().and.theListShouldBeBound();

});

// ...

Once,

yarn dev is started (in / of the UI5-ecosystem-showcase), the OPA5 tests can be run via http://localhost:1081/test/integration/opaTests.qunit.htmlUIVeri5

UIVeri5 requires Node.js >=8 and is installed via the standard npm command npm install @ui5/uiveri5.It’s then set up via a config file and files containing test code - make sure both are in the same file sys folder.

minimal config file:

webapp/test/e2e/conf.jsexports.config = {

profile: "integration",

baseUrl: "http://localhost:1081/index.html"

};

exemplary test file

webapp/test/e2e/binding.spec.js:describe("binding", function () { // remember the suite name for the file name!

it("Other view: PeopleList: items aggregation", function () {

element(

by.control({

viewName: "test.Sample.view.Main",

id: "NavButton"

})

).click()

/* ... */

})

})

A common pitfall is that

UIVeri5 requires the test-file filename to be identical to the test suite name - e.g. if the suite name is binding , the test file must be saved as binding.spec.js.Then

UIVeri5 can be started via (in /)- first launch the webserver:

yarn dev(oryarn start:ci) yarn test:uiveri5

wdi5

Similar to

UIVeri5, wdi5 lives in Node.js land. Depending on whether you want to use the plain browser-based runtime (wdio-ui5-service) or the hybrid-app test-driver (wdi5), installation and setup differs.As there’s no equivalent for testing hybrid apps with

OPA5 or UIVeri5, we’ll concentrate on wdi5’s browser-only incarnationwdio-ui5-service - for brevity’s sake, we’ll refer to it as wdi5 in this popst, even though that might confuse the two packages even more 🙂With Node.js >= 12:

npm i @wdio/cli wdio-ui5-service.wdi5 is based on Webdriver.IO (wdio), yet dependency-free itself, so it needs the "base" installed manually.Config file is identical to

wdio and only needs the "ui5" service listed (yes, wdi5 is an official Webdriver.IO-service😞/packages/ui5-app/wdio.conf.js:// ...

services: [

// other services like 'chromedriver'

// ...

'ui5'

]

// ...

Hint: you might want to runnpx wdio configto see the plethora of featurewdioand thuswdi5provides as a test-runner.

exemplary test file

webapp/test/e2e-wdi5/tests.test.js:describe("binding", () => {

it("Other view: PeopleList: items aggregation + amount of items", () => {

browser.asControl(navFwdButton).firePress()

const oList = browser.asControl(list)

const aListItems = oList.getAggregation("items")

expect(aListItems.length).toBeGreaterThanOrEqual(1)

})

})

Similar to

UIVeri5, run wdi5 via (in /)- first launch the webserver:

yarn dev(oryarn start:ci) yarn test:wdi5

Selectors, Locators + Usage

Selectors

The topic "selectors" can be handled quickly: all share the same syntax 🙂

So no matter wether you write a test in

OPA5, UIVeri5 or wdi5, a typical selector object always looks similar:{

viewName: "test.Sample.view.Main",

id: "NavButton"

}

Using it in

OPA5 always requires embedding it in a waitFor():// When || Then || (in page object method) this

When.waitFor({

viewName: "test.Sample.view.Main",

id: "NavButton",

// ...

})

In

UIVeri5, it gets a little more bracket-ish:element(

by.control({

viewName: "test.Sample.view.Main",

id: "NavButton"

})

)

Similar, in

wdi5, a wrapping into another object is needed (b/c there’s additional properties on selector level possible):{

selector: {

viewName: "test.Sample.view.Main",

id: "NavButton"

}

}

Locators

Generally speaking, all three frameworks support the same locator options for

UI5 controls. They can be retrieved via their id and View-association, but also via a binding path or a property value.Check out the documentation on the subject for

OPA5: https://ui5.sap.com/#/topic/21aeff6928f84d179a47470123afee59UIVeri5: https://github.com/SAP/ui5-uiveri5/blob/master/docs/usage/locators.mdwdi5: https://github.com/js-soft/wdi5/blob/develop/README.md#Usage

Usage

As the brief code examples above already hint, interacting with located

UI5 controls differs between the frameworks.OPA5

In

OPA5, there are predefined actions that allow interactions with a located UI5 control:// webapp/test/integration/InteractionJourney.js

When.waitFor({

viewName: "Main",

id: "DateTimePicker",

actions: new EnterText({ // from "sap/ui/test/actions/EnterText"

text: "2020-11-11",

pressEnterKey: true,

}),

})

Further programmatic querying of a

UI5 control is possible in the waitFor’s success-handler. All of the control’s native method’s can be used:Then.waitFor({

id : "productList",

viewName : "Category",

success : function (oList) {

var oItems = oList.getItems()

// ...

}

}

OPA5 gets at its’ limit when interactions with generated UI5 elements inside a control are required, e.g. selecting a date in a sap.m.DateTimePicker’s calendar pop-out. (You could argue that using jQuery for that purpose and doing the $(/id/).trigger("tap")-dance might be an option, but…let’s not go there 🙂 ).Also, operating any elements or controls (think "reload") outside of the

UI5 app is not possible with OPA5.UIVeri5

With

UIVeri5, interaction with located UI5 controls is mainly possible via the underlying Protractor API:// from webapp/test/e2e/interaction.spec.js

const input = element(

by.control({

viewName: "test.Sample.view.Main",

id: "DateTimePicker",

})

)

input

.sendKeys("2020-11-11")

.then((_) => {

// this is very likely overlooked...

return input.sendKeys(protractor.Key.ENTER)

}) /*...*/

Once located in

UIVeri5, unfortunately only a limited set of a UI5 control’s native API is exposed: it’s pretty much only the getProperty() method, chained via .asControl().// ...continuing above Promise chain

.then((_) => {

return input.asControl().getProperty("value")

})

/* ... */

Additional

UI5 API methods, be it convenience shortcuts like getVisible() or aggregations-related calls such as sap.m.List.getItems() , are not available for located controls.Theoretically,

UIVeri5 would allow to remotely operate all browser functions (think "reload") via the underlying Protractor API - but it’s then up to the developer to re-inject the UI5 dependency into the async WebDriver control flow.wdi5

wdi5 proxies all public API methods of a located UI5 control to the test.// from webapp/test/e2e-`wdi5`/tests.test.js

const dateTimePicker = {

forceSelect: true, // don't cache selected control

selector: {

viewName: "test.Sample.view.Main",

id: "DateTimePicker"

}

}

const oDateTimePicker = browser.asControl(dateTimePicker)

oDateTimePicker.setValue("2020-11-11") // UI5 API!

To stay API-compliant with

UIVeri5, wdi5 offers browser.asControl(<locator>) to make the located UI5 control’s API accessible, including access to its’ aggregation(s).// from webapp/test/e2e-`wdi5`/tests.test.js

const oList = browser.asControl(list)

const aListItems = oList.getAggregation("items")

With wdio as its’ base, all functionality of Webdriver.IO can be used with

wdi5.Similar to

UIVeri5, wdi5 allows for late- and re-injecting of its’ UI5 dependency , so browser reload/refresh scenarios as well as test against localStorage et al are possible.Test syntax + Utilization

Probably the biggest difference between the three frameworks is the approach to actually writing the tests. For comparison’s sake, I’ve coded both "plain" and page-object-style tests with in

OPA5, UIVeri5 and wdi5.For

OPA5, the test/integration/InteractionJourney.js is coded "plain",same for

UIVeri5 in test/e2e/interaction.spec.js andwdi5 in test/e2e-wdi5/tests.test.js (describe('interaction')).

interaction test in opa5, uiveri5 + wdi5

Encapsulating test functionality in Page Objects is done for

OPA5:test/integration/pages/*withtest/integration/BindingJourney.js+test/integration/NavigationJourney.jsUIVeri5:test/e2e/pages/*withtest/e2e/binding.spec.js+test/e2e/navigation.spec.jswdi5:/test/e2e-wdi5/pages/*withtest/e2e-wdi5/tests.test.js (describe('navigation'))

OPA5

Every action and assertion needs to be wrapped in a

waitFor(), be it inside a test or a Page Object:opaTest("...", function (Given, When, Then) {

// Arrangements

Given.iStartMyApp();

// Action

When.waitFor({ /*...*/ });

// Assertion

Then.waitFor({/*...*/ });

});

This makes more complex scenarios complicated to write syntax-wise. Also nesting actions/assertions get difficult to maintain.

A synchronous notation is encouraged, using

waitFor-sequences that automagically…well… wait for the underlying asynchronous Promises to resolve.However, running single tests or only one "Journey" requires commenting out/in source code constantly

→ clumsy dev time turnaround.

Assertions are done via

sap/ui/test/OPA5/assert and offer ok, equal, propEqual, deepEqual, strictEqual and their negative counterparts:// from `test/integration/InteractionJourney.js`

Then.waitFor({

viewName: "Main",

id: "DateTimePicker",

success: function (oDateTimePicker) {

`OPA5`.assert.ok(true, oDateTimePicker.getValue().match(/2020/));

`OPA5`.assert.ok(true, oDateTimePicker.getValue().match(/11/));

},

});

debugging

For halting the test and runtime, another

waitFor needs to be used:When.waitFor({success: function() { debugger; } })

This stops the

OPA5 test at the desired location. Even though aged, more still valid details in https://blogs.sap.com/2018/09/18/testing-ui5-apps-part-2-integration-aka-opa-testing/ by yours truly 🙂reporting/output

With

OPA5 sharing the UI5 runtime with the application, its’ output per definition is browser-based.

In combination with the test-runner karma, a console-based report can be achieved. As this adds another layer of installation/setup/configuration, I’m not going to cover it here. But you can try it out via (in

/) yarn test:opa5 and yarn test:opa5-ci 🙂UIVeri5

UIVeri5 test suites are limited to one suite (describe) per file, offering few organizational capability.While locating

UI5 controls at test time is synchronous in notation, all other significant (inter-)actions in a test require async coding approaches, e.g. via a Promise chain:// from test/e2e/interaction.spec.js

input

.asControl()

.getProperty("value")

.then((value) => {

expect(value).toMatch(/2020/);

expect(value).toMatch(/15/);

})

.catch((err) => {

return Promise.reject("gnarf");

});

UIVeri5 uses Jasmine matchers for doing the test assertions. When a tests consists of a basic interaction only in conjunction with an assertion, it’s possible to use these UIVeri5 matchers to forego a synchronous coding syntax only:// from test/e2e/binding.spec.js

const list = element.all(

by.control({

viewName: "test.Sample.view.Other",

controlType: "sap.m.StandardListItem",

})

);

// no Promise chain here

expect(list.count()).toBeGreaterThan(2)

The proper way of when to use what coding approach already highlights one of the challenges when working with

UIVeri5: the handling of async vs sync test coding parts is …well… lots of trying. It’s not always clear what method is from the Protractor API, what’s from WebdriverJS native, what’s from UIVeri5 custom?The Page Object pattern in

UIVeri5 works well:// from test/e2e/pages/main.view.js

module.exports = createPageObjects({

Main: {

arrangements: {

iStartMyApp: () => {

return true

}

},

// ...

assertions: {

iShouldSeeTheApp: () => {

const title = element(

by.control({

viewName: "test.Sample.view.Main",

controlType: "sap.m.Title",

properties: {

text: "#`UI5` demo",

},

})

);

// don't know why this isn't title.asControl() ... 😞

expect(title.getText()).toBe("#`UI5` demo");

}

}

}

})

// from test/e2e/navigation.spec.js

const mainPageObject = require("./pages/main.view");

// ...

describe("navigation", function () {

it("should see the initial page of the app", function () {

Given.iStartMyApp();

Then.onTheMainPage.iShouldSeeTheApp();

});

// ...

})

Main from the page object API translates to onTheMainPage in the test, so does arrangements to Given and assertions to Then. Certainly nice BDD, and although comfortable, it feels a little like too much magic is going on under the hood 🙂debugging

That also drills through to the debugging part. Per se

UIVeri5 offers the typical Node.js approach for debugging: npx uiveri5 —debug exposes the process for attaching by the Node debugger.But debugging the actual test is difficult, when only above mentioned sync coding is used - there’s no place to actually put a break point to determine a

UI5 control’s value. So typically, UIVeri5 tests need to be rewritten in async coding syntax in order to hook into the proper spot during test execution:// original sync syntax

const myElement = element(by.control({ id: /.../ }))

expect(myElement.getText() /* can't put a breakpoint here */).toEqual("myText")

// needs to be rewritten for debugging to:

myElement.getText().then(text => {

/* put breakpoint here or use "debugger" statement */

expect(text).toEqual("myText");

})

While not a big deal, this still puts overhead into the dev time turnaround.

reporting/output

UIVeri5’s reporter(s) is/are customizable, but offers little overview in its’ default setting, having lots of noise versus result.

Still, command-line API and reporter FTW!

wdi5

Thanks to Webdriver.IO,

wdi5 test syntax coding is synchronous sugar entirely:// from test/e2e-`wdi5`/tests.test.js

describe("interaction", () => {

it("should manually allow date input", () => {

const oDateTimePicker = browser.asControl(dateTimePicker)

oDateTimePicker.setValue("2020-11-11")

/* put breakpoint here or "browser.debug()" to

inspect runtime state of oDateTimePicker */

expect(oDateTimePicker.getValue()).toMatch(/2020/)

expect(oDateTimePicker.getValue()).toMatch(/11/)

})

// ...

})

Test suites are organized via (multiple and nested)

describe statements. It’s form uses mocha ’s describe and it keywords per default, although jasmine-style notation is possible (all configured in wdio.conf.js).Assertions are a superset of Jest.js matchers, extended by Webdriver.IO-specific methods.

Page Objects in

wdi5 are plain ES6 classes and can be used just as such in the tests:// in test/e2e-wdi5/pages/Main.js

class Main extends Page {

_viewName = "test.Sample.view.Main"

_navFwdButton = {

forceSelect: true,

selector: {

viewName: "test.Sample.view.Main",

id: "NavButton"

}

}

iShouldSeeTheApp() {

return (

browser

.asControl({

forceSelect: true,

selector: {

viewName: this._viewName,

controlType: "sap.m.Title",

properties: {

text: "#UI5 demo"

}

}

})

.getText() === "#UI5 demo"

)

}

// ...

module.exports = new Main()

// in test/e2e-wdi5/tests.test.js

const MainPage = require("./pages/Main")

describe("navigation", () => {

it("should see the initial page of the app", () => {

MainPage.open() // inherited from Page.js

expect(MainPage.iShouldSeeTheApp()).toBeTruthy()

})

// ...

})

In combination with class inheritance, Page Objects in

wdi5 become highly reusable items. You define shared methods in a base class and re-use them in child classes:// from packages/ui5-app/webapp/test/e2e-wdi5/pages/Page.js

module.exports = class Page {

open(sHash) {

// wdi5-specific

browser.goTo({sHash: `index.html${sHash}`})

}

}

// in test/e2e-wdi5/pages/Main.js

class Main extends Page {

// ...

}

// in a test file you can now do

const Main = require("./path/to/Main")

Main.open()

debugging

The synchronous coding style makes it easy to put a breakpoint in a test at any line and inspect the runtime state of a

UI5 control.For launching

wdi5 in debug mode, either do the regular npx wdio from a VSCode "Debug" terminal or add an inspect flag to wdio.conf.js.reporting/output

Many reporters are available for Webdriver.IO that subsequently work for

wdi5 as well.Per default, the spec reporter offers a concise status report:

Performance

Time is valuable, not only for testing. But specifically during development: the faster a test-runner executes, the quicker the developer can react according to the results and fix/add/change tests.

And most certainly in CI/CD: the quicker a suite of test runs, the faster Pull/Merge Requests can pass through, the faster features can be (continuously) delivered, the higher the literal quality of service.

All tests in the ecosystem showcase share the same requirements. With the

OPA5 tests being a small exception as they are missing any calendar popup interaction due to incapability. Thus, OPA5 has a slight performance advantage per se.Nevertheless, here are the metrics of the tests all run in headless Chrome (

karma-ui5 as the testrunner for OPA5), with minimum log level set.Hardware is my MacBook Pro 2.3 GHz Quad-Core Intel Core i7 with 32 GB RAM (yeah, I know).

Every test framework was run 100 times (really!), these are the mean runtimes:

OPA5: 12.03 secUIVeri5: 34.02 secwdi5: 32.02 secSummary

Which framework is "the best", which one is "the winner"?

Clearly there’s no straight answer to that, as each one has its’ advantages and disadvantages, serving certain use cases best.

OPA5 is the fastest of the three, as it shares the runtime with UI5, saving infrastructure overhead such as launching the browser itself. Yet it quickly reaches its’ limits when more advanced test behavior is required, such as cross-interaction amongst UI5 controls or operating on elements other than UI5 controls. Plus it feels clumsy with the manyfold waitFor interaction.With

UIVeri5, it’s possible to really operate the UI as an external user, including elements and features outside of UI5 controls. But UIVeri5’s core (Protractor, WebdriverJS) feels dated, and the glue between the core elements is sometimes missing that last implementation mile (not possible to set a log level in conf.js?!). Certain limits such as the forced correlation between suite name (describe) and filename also add to that impression. Unfortunately only a subset of UI5 API methods are available on a control at test-time. Also the documentation seems to be fragmented - yet a bit hidden in the docs, UIVeri5 has pre-built authenticators, with SAP Cloud Platform SAP ID amongst them.wdi5 is the youngest framework amongst the three and benefits greatly not only from its’ Webdriver.IO-core, but also from the integration with the sap.ui.test.RecordReplay-API. The latter enables the OPA5-stlye locators during test-time. With syntactic sugar for test notation, the wide variety of options (try running npx wdio config!), TypeScript support and a large support of UI5 API methods on a control, make using wdi5 feel more up-to-date than working with the other two frameworks. Combined with its’ larger sibling, the actual wdi5 npm module (see the docs for the distinction), it can not only drive tests in the browser-scope, but also run them against hybrid apps on iOS, Android and Electron.So which one to pick?

In terms of licensing, there most certainly is a winner:

wdi5 lives under the derived beer-ware license, encouraging all users to frequently buy the contributors a beer.In that regard: cheers!

- SAP Managed Tags:

- SAPUI5

8 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

"automatische backups"

1 -

"regelmäßige sicherung"

1 -

"TypeScript" "Development" "FeedBack"

1 -

505 Technology Updates 53

1 -

ABAP

14 -

ABAP API

1 -

ABAP CDS Views

2 -

ABAP CDS Views - BW Extraction

1 -

ABAP CDS Views - CDC (Change Data Capture)

1 -

ABAP class

2 -

ABAP Cloud

3 -

ABAP Development

5 -

ABAP in Eclipse

1 -

ABAP Platform Trial

1 -

ABAP Programming

2 -

abap technical

1 -

abapGit

1 -

absl

2 -

access data from SAP Datasphere directly from Snowflake

1 -

Access data from SAP datasphere to Qliksense

1 -

Accrual

1 -

action

1 -

adapter modules

1 -

Addon

1 -

Adobe Document Services

1 -

ADS

1 -

ADS Config

1 -

ADS with ABAP

1 -

ADS with Java

1 -

ADT

2 -

Advance Shipping and Receiving

1 -

Advanced Event Mesh

3 -

Advanced formula

1 -

AEM

1 -

AI

8 -

AI Launchpad

1 -

AI Projects

1 -

AIML

9 -

Alert in Sap analytical cloud

1 -

Amazon S3

1 -

Analytical Dataset

1 -

Analytical Model

1 -

Analytics

1 -

Analyze Workload Data

1 -

annotations

1 -

API

1 -

API and Integration

3 -

API Call

2 -

API security

1 -

Application Architecture

1 -

Application Development

5 -

Application Development for SAP HANA Cloud

3 -

Applications and Business Processes (AP)

1 -

Artificial Intelligence

1 -

Artificial Intelligence (AI)

5 -

Artificial Intelligence (AI) 1 Business Trends 363 Business Trends 8 Digital Transformation with Cloud ERP (DT) 1 Event Information 462 Event Information 15 Expert Insights 114 Expert Insights 76 Life at SAP 418 Life at SAP 1 Product Updates 4

1 -

Artificial Intelligence (AI) blockchain Data & Analytics

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise Oil Gas IoT Exploration Production

1 -

Artificial Intelligence (AI) blockchain Data & Analytics Intelligent Enterprise sustainability responsibility esg social compliance cybersecurity risk

1 -

ASE

1 -

ASR

2 -

ASUG

1 -

Attachments

1 -

Authorisations

1 -

Automating Processes

1 -

Automation

2 -

aws

2 -

Azure

1 -

Azure AI Studio

1 -

Azure API Center

1 -

Azure API Management

1 -

B2B Integration

1 -

Backorder Processing

1 -

Backup

1 -

Backup and Recovery

1 -

Backup schedule

1 -

BADI_MATERIAL_CHECK error message

1 -

Bank

1 -

Bank Communication Management

1 -

BAS

1 -

basis

2 -

Basis Monitoring & Tcodes with Key notes

2 -

Batch Management

1 -

BDC

1 -

Best Practice

1 -

bitcoin

1 -

Blockchain

3 -

bodl

1 -

BOP in aATP

1 -

BOP Segments

1 -

BOP Strategies

1 -

BOP Variant

1 -

BPC

1 -

BPC LIVE

1 -

BTP

13 -

BTP AI Launchpad

1 -

BTP Destination

2 -

Business AI

1 -

Business and IT Integration

1 -

Business application stu

1 -

Business Application Studio

1 -

Business Architecture

1 -

Business Communication Services

1 -

Business Continuity

1 -

Business Data Fabric

3 -

Business Fabric

1 -

Business Partner

12 -

Business Partner Master Data

10 -

Business Technology Platform

2 -

Business Trends

4 -

BW4HANA

1 -

CA

1 -

calculation view

1 -

CAP

4 -

Capgemini

1 -

CAPM

1 -

Catalyst for Efficiency: Revolutionizing SAP Integration Suite with Artificial Intelligence (AI) and

1 -

CCMS

2 -

CDQ

12 -

CDS

2 -

Cental Finance

1 -

Certificates

1 -

CFL

1 -

Change Management

1 -

chatbot

1 -

chatgpt

3 -

CL_SALV_TABLE

2 -

Class Runner

1 -

Classrunner

1 -

Cloud ALM Monitoring

1 -

Cloud ALM Operations

1 -

cloud connector

1 -

Cloud Extensibility

1 -

Cloud Foundry

4 -

Cloud Integration

6 -

Cloud Platform Integration

2 -

cloudalm

1 -

communication

1 -

Compensation Information Management

1 -

Compensation Management

1 -

Compliance

1 -

Compound Employee API

1 -

Configuration

1 -

Connectors

1 -

Consolidation Extension for SAP Analytics Cloud

2 -

Control Indicators.

1 -

Controller-Service-Repository pattern

1 -

Conversion

1 -

Cosine similarity

1 -

cryptocurrency

1 -

CSI

1 -

ctms

1 -

Custom chatbot

3 -

Custom Destination Service

1 -

custom fields

1 -

Customer Experience

1 -

Customer Journey

1 -

Customizing

1 -

cyber security

4 -

cybersecurity

1 -

Data

1 -

Data & Analytics

1 -

Data Aging

1 -

Data Analytics

2 -

Data and Analytics (DA)

1 -

Data Archiving

1 -

Data Back-up

1 -

Data Flow

1 -

Data Governance

5 -

Data Integration

2 -

Data Quality

12 -

Data Quality Management

12 -

Data Synchronization

1 -

data transfer

1 -

Data Unleashed

1 -

Data Value

8 -

database tables

1 -

Dataframe

1 -

Datasphere

3 -

datenbanksicherung

1 -

dba cockpit

1 -

dbacockpit

1 -

Debugging

2 -

Defender

1 -

Delimiting Pay Components

1 -

Delta Integrations

1 -

Destination

3 -

Destination Service

1 -

Developer extensibility

1 -

Developing with SAP Integration Suite

1 -

Devops

1 -

digital transformation

1 -

Documentation

1 -

Dot Product

1 -

DQM

1 -

dump database

1 -

dump transaction

1 -

e-Invoice

1 -

E4H Conversion

1 -

Eclipse ADT ABAP Development Tools

2 -

edoc

1 -

edocument

1 -

ELA

1 -

Embedded Consolidation

1 -

Embedding

1 -

Embeddings

1 -

Employee Central

1 -

Employee Central Payroll

1 -

Employee Central Time Off

1 -

Employee Information

1 -

Employee Rehires

1 -

Enable Now

1 -

Enable now manager

1 -

endpoint

1 -

Enhancement Request

1 -

Enterprise Architecture

1 -

ESLint

1 -

ETL Business Analytics with SAP Signavio

1 -

Euclidean distance

1 -

Event Dates

1 -

Event Driven Architecture

1 -

Event Mesh

2 -

Event Reason

1 -

EventBasedIntegration

1 -

EWM

1 -

EWM Outbound configuration

1 -

EWM-TM-Integration

1 -

Existing Event Changes

1 -

Expand

1 -

Expert

2 -

Expert Insights

2 -

Exploits

1 -

Fiori

14 -

Fiori Elements

2 -

Fiori SAPUI5

12 -

first-guidance

1 -

Flask

1 -

FTC

1 -

Full Stack

8 -

Funds Management

1 -

gCTS

1 -

GenAI hub

1 -

General

1 -

Generative AI

1 -

Getting Started

1 -

GitHub

9 -

Grants Management

1 -

groovy

1 -

GTP

1 -

HANA

6 -

HANA Cloud

2 -

Hana Cloud Database Integration

2 -

HANA DB

2 -

Hana Vector Engine

1 -

HANA XS Advanced

1 -

Historical Events

1 -

home labs

1 -

HowTo

1 -

HR Data Management

1 -

html5

8 -

HTML5 Application

1 -

Identity cards validation

1 -

idm

1 -

Implementation

1 -

Infuse AI

1 -

input parameter

1 -

instant payments

1 -

Integration

3 -

Integration Advisor

1 -

Integration Architecture

1 -

Integration Center

1 -

Integration Suite

1 -

intelligent enterprise

1 -

iot

1 -

Java

1 -

job

1 -

Job Information Changes

1 -

Job-Related Events

1 -

Job_Event_Information

1 -

joule

4 -

Journal Entries

1 -

Just Ask

1 -

Kerberos for ABAP

8 -

Kerberos for JAVA

8 -

KNN

1 -

Launch Wizard

1 -

Learning Content

2 -

Life at SAP

5 -

lightning

1 -

Linear Regression SAP HANA Cloud

1 -

Loading Indicator

1 -

local tax regulations

1 -

LP

1 -

Machine Learning

4 -

Marketing

1 -

Master Data

3 -

Master Data Management

14 -

Maxdb

2 -

MDG

1 -

MDGM

1 -

MDM

1 -

Message box.

1 -

Messages on RF Device

1 -

Microservices Architecture

1 -

Microsoft Universal Print

1 -

Middleware Solutions

1 -

Migration

5 -

ML Model Development

1 -

Modeling in SAP HANA Cloud

8 -

Monitoring

3 -

MTA

1 -

Multi-Record Scenarios

1 -

Multilayer Perceptron

1 -

Multiple Event Triggers

1 -

Myself Transformation

1 -

Neo

1 -

Neural Networks

1 -

New Event Creation

1 -

New Feature

1 -

Newcomer

1 -

NodeJS

3 -

ODATA

2 -

OData APIs

1 -

odatav2

1 -

ODATAV4

1 -

ODBC

1 -

ODBC Connection

1 -

Onpremise

1 -

open source

2 -

OpenAI API

1 -

Oracle

1 -

PaPM

1 -

PaPM Dynamic Data Copy through Writer function

1 -

PaPM Remote Call

1 -

Partner Built Foundation Model

1 -

PAS-C01

1 -

Pay Component Management

1 -

PGP

1 -

Pickle

1 -

PLANNING ARCHITECTURE

1 -

Popup in Sap analytical cloud

1 -

PostgrSQL

1 -

POSTMAN

1 -

Prettier

1 -

Process Automation

2 -

Product Updates

6 -

PSM

1 -

Public Cloud

1 -

Python

4 -

python library - Document information extraction service

1 -

Qlik

1 -

Qualtrics

1 -

RAP

3 -

RAP BO

2 -

Record Deletion

1 -

Recovery

1 -

recurring payments

1 -

redeply

1 -

Release

1 -

Remote Consumption Model

1 -

Replication Flows

1 -

research

1 -

Resilience

1 -

REST

1 -

REST API

1 -

Retagging Required

1 -

Risk

1 -

Rolling Kernel Switch

1 -

route

1 -

rules

1 -

S4 HANA

1 -

S4 HANA Cloud

1 -

S4 HANA On-Premise

1 -

S4HANA

4 -

S4HANA Cloud

1 -

S4HANA_OP_2023

2 -

SAC

10 -

SAC PLANNING

9 -

SAP

4 -

SAP ABAP

1 -

SAP Advanced Event Mesh

1 -

SAP AI Core

9 -

SAP AI Launchpad

8 -

SAP Analytic Cloud Compass

1 -

Sap Analytical Cloud

1 -

SAP Analytics Cloud

4 -

SAP Analytics Cloud for Consolidation

3 -

SAP Analytics Cloud Story

1 -

SAP analytics clouds

1 -

SAP API Management

1 -

SAP BAS

1 -

SAP Basis

6 -

SAP BODS

1 -

SAP BODS certification.

1 -

SAP BTP

22 -

SAP BTP Build Work Zone

2 -

SAP BTP Cloud Foundry

6 -

SAP BTP Costing

1 -

SAP BTP CTMS

1 -

SAP BTP Generative AI

1 -

SAP BTP Innovation

1 -

SAP BTP Migration Tool

1 -

SAP BTP SDK IOS

1 -

SAP BTPEA

1 -

SAP Build

11 -

SAP Build App

1 -

SAP Build apps

1 -

SAP Build CodeJam

1 -

SAP Build Process Automation

3 -

SAP Build work zone

10 -

SAP Business Objects Platform

1 -

SAP Business Technology

2 -

SAP Business Technology Platform (XP)

1 -

sap bw

1 -

SAP CAP

2 -

SAP CDC

1 -

SAP CDP

1 -

SAP CDS VIEW

1 -

SAP Certification

1 -

SAP Cloud ALM

4 -

SAP Cloud Application Programming Model

1 -

SAP Cloud Integration for Data Services

1 -

SAP cloud platform

8 -

SAP Companion

1 -

SAP CPI

3 -

SAP CPI (Cloud Platform Integration)

2 -

SAP CPI Discover tab

1 -

sap credential store

1 -

SAP Customer Data Cloud

1 -

SAP Customer Data Platform

1 -

SAP Data Intelligence

1 -

SAP Data Migration in Retail Industry

1 -

SAP Data Services

1 -

SAP DATABASE

1 -

SAP Dataspher to Non SAP BI tools

1 -

SAP Datasphere

9 -

SAP DRC

1 -

SAP EWM

1 -

SAP Fiori

3 -

SAP Fiori App Embedding

1 -

Sap Fiori Extension Project Using BAS

1 -

SAP GRC

1 -

SAP HANA

1 -

SAP HANA PAL

1 -

SAP HANA Vector

1 -

SAP HCM (Human Capital Management)

1 -

SAP HR Solutions

1 -

SAP IDM

1 -

SAP Integration Suite

9 -

SAP Integrations

4 -

SAP iRPA

2 -

SAP LAGGING AND SLOW

1 -

SAP Learning Class

1 -

SAP Learning Hub

1 -

SAP Master Data

1 -

SAP Odata

2 -

SAP on Azure

2 -

SAP PAL

1 -

SAP PartnerEdge

1 -

sap partners

1 -

SAP Password Reset

1 -

SAP PO Migration

1 -

SAP Prepackaged Content

1 -

SAP Process Automation

2 -

SAP Process Integration

2 -

SAP Process Orchestration

1 -

SAP S4HANA

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Cloud for Finance

1 -

SAP S4HANA Cloud private edition

1 -

SAP Sandbox

1 -

SAP STMS

1 -

SAP successfactors

3 -

SAP SuccessFactors HXM Core

1 -

SAP Time

1 -

SAP TM

2 -

SAP Trading Partner Management

1 -

SAP UI5

1 -

SAP Upgrade

1 -

SAP Utilities

1 -

SAP-GUI

8 -

SAP_COM_0276

1 -

SAPBTP

1 -

SAPCPI

1 -

SAPEWM

1 -

sapfirstguidance

1 -

SAPHANAService

1 -

SAPIQ

1 -

sapmentors

1 -

saponaws

2 -

SAPS4HANA

1 -

SAPUI5

5 -

schedule

1 -

Script Operator

1 -

Secure Login Client Setup

8 -

security

9 -

Selenium Testing

1 -

Self Transformation

1 -

Self-Transformation

1 -

SEN

1 -

SEN Manager

1 -

service

1 -

SET_CELL_TYPE

1 -

SET_CELL_TYPE_COLUMN

1 -

SFTP scenario

2 -

Simplex

1 -

Single Sign On

8 -

Singlesource

1 -

SKLearn

1 -

Slow loading

1 -

soap

1 -

Software Development

1 -

SOLMAN

1 -

solman 7.2

2 -

Solution Manager

3 -

sp_dumpdb

1 -

sp_dumptrans

1 -

SQL

1 -

sql script

1 -

SSL

8 -

SSO

8 -

Substring function

1 -

SuccessFactors

1 -

SuccessFactors Platform

1 -

SuccessFactors Time Tracking

1 -

Sybase

1 -

system copy method

1 -

System owner

1 -

Table splitting

1 -

Tax Integration

1 -

Technical article

1 -

Technical articles

1 -

Technology Updates

15 -

Technology Updates

1 -

Technology_Updates

1 -

terraform

1 -

Threats

2 -

Time Collectors

1 -

Time Off

2 -

Time Sheet

1 -

Time Sheet SAP SuccessFactors Time Tracking

1 -

Tips and tricks

2 -

toggle button

1 -

Tools

1 -

Trainings & Certifications

1 -

Transformation Flow

1 -

Transport in SAP BODS

1 -

Transport Management

1 -

TypeScript

3 -

ui designer

1 -

unbind

1 -

Unified Customer Profile

1 -

UPB

1 -

Use of Parameters for Data Copy in PaPM

1 -

User Unlock

1 -

VA02

1 -

Validations

1 -

Vector Database

2 -

Vector Engine

1 -

Vectorization

1 -

Visual Studio Code

1 -

VSCode

2 -

VSCode extenions

1 -

Vulnerabilities

1 -

Web SDK

1 -

work zone

1 -

workload

1 -

xsa

1 -

XSA Refresh

1

- « Previous

- Next »

Related Content

- The 2024 Developer Insights Survey: The Report in Technology Blogs by SAP

- Govern SAP APIs living in various API Management gateways in a single place with Azure API Center in Technology Blogs by Members

- Explore Business Continuity Options for SAP workload using AWS Elastic DisasterRecoveryService (DRS) in Technology Blogs by Members

- SAP PI/PO migration? Why you should move to the Cloud with SAP Integration Suite! in Technology Blogs by SAP

- Boosting Benchmarking for Reliable Business AI in Technology Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 10 | |

| 5 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 2 |