- SAP Community

- Products and Technology

- Supply Chain Management

- SCM Blogs by SAP

- Integrating SAP Datasphere with SAP Integrated Bus...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

This blog series describe how we can exchange data relevant for planning between SAP IBP and a space in SAP Datasphere. I am using two SAP middleware tools – SAP Cloud Integration, which is a 100% cloud service on BTP and SAP Cloud Integration for data services which is a hybrid solution ( with an on-premise component ) to exchange data bidirectionally. This blog series contains three sections,

- Reading forecast data from SAP IBP and writing to SAP Datasphere (This blog).

- Writing data from SAP Datasphere into a planning area in SAP IBP.

- Using SAP CI-DS for bi-directional integration between SAP IBP and SAP Datasphere

The last section describes how we could integrate bidirectionally using SAP Cloud Integration for data services and first two sections describe the data exchange using SAP Cloud Integration. To support large data volumes, we use SAP Remote Functions to extract data from SAP IBP into the middleware tools. SAP Datasphere also offer a JDBC interface which can be leveraged from Cloud Integration. The software components used for this integration are presented below in a high-level block diagram below:

Prerequisites while using SAP Cloud Integration

Let’s start with the following prerequisites in place:

- Have a forecast run on SAP IBP completed. Identify a keyfigure which stored the computed forecast. In my example I considered CONSDEMANDQTY as a sample keyfigure with its own set of root attributes.

- A new communication scenario – SAP_COM_0931 is now available in SAP IBP for interfacing SAP Cloud Integration with SAP IBP. Make sure you have a communication user, system and arrangement using this scenario.

- In Feb 2024, SAP IBP has released a set of helper iFlows in SAP Cloud Integration. These iFlows are for communicating with SAP IBP using web-socket RFC based interfaces – have these iFlows imported into your Cloud Integration instance.

- Use the Destination Service on the SAP BTP for the SAP IBP instance using the communication setup you have from Step 2. We will use this destination name from SAP Cloud Integration.

- Create a Space and a table in that space in SAP Datasphere.

Reading data from SAP Integrated Business Planning

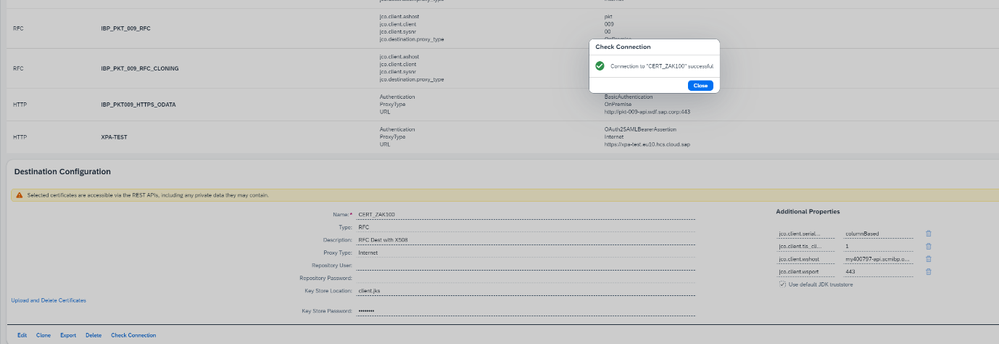

From SAP Cloud Integration, the efficient way of communicating with SAP IBP is using web socket based remote function calls. In step 2 of our pre-requisite, we created the credentials for communicating with SAP IBP. They are using the pre-delivered interfaces which are exposed under the communication scenario: SAP_COM_0931. We can use these credentials to create a named destination in the SAP Business Technology Platform. For this, open your sub account landing page on the SAP BTP. Navigate to your Destination menu from the left and create a new destination using the above credentials. The configuration might look like this screen shot:

Here, the destination name is called IBP_G5R_100. We will use this name in our iFlows to make these WS-RFC calls to SAP IBP from Cloud Integration. A pre-delivered set of helper iFlows in SAP Cloud Integration are used for this integration. These iFlows take care of making the WS-RFC calls the creating the right payloads as well as waiting for the response from the backend. We create iFlows which are specific to business processes on top of these helper iFlows. We consume the helper iFlows using process direct mechanism in SAP Cloud Integration.

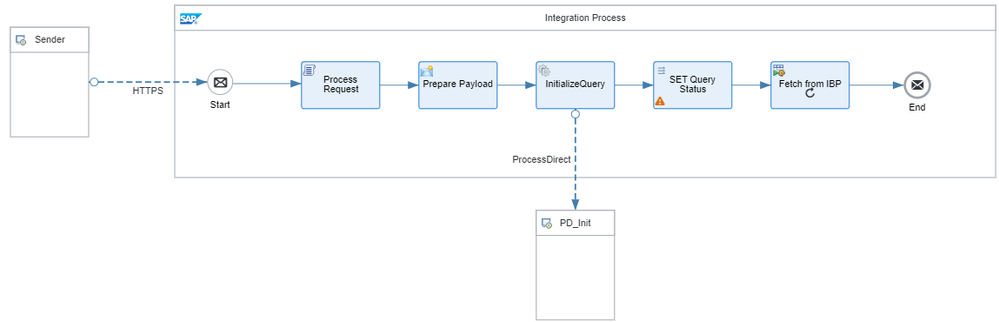

Initialize Query

Reading from SAP IBP is done asynchronously. A select query is first initialized. Input parameters for this select query can be done either via a global parameter configuration or this iFlow can be triggered from an external application using a HTTPs call. In my example, I exposed the iFlows as HTTPS endpoint and trigger them via a POST call. A Screen shot of this iFlow is given below.

I am using a content modifier adapter to prepare a payload that can be sent to the helper iFlows via a process direct. It can be a groovy or even an XSLT mapping that can prepare the payload for this call. Here is a sample payload which I am generating in that content modifier. The inputs for this payload are prepared from the HTTP request call which triggers this iFlow.

<IBPReads>

<IBPRead Key="${header.IBPQueryKey1}"

Destination="${header.IBPDestination}"

PackageSizeInRows="${header.IBPPackageSizeInRows}"

Select="${header.IBPQuerySelect}"

OrderBy="${header.IBPQueryOrderBy}"

Filter="${header.IBPQueryFilterString}"

TypeOfData="${header.IBPQueryTypeOfData}"

TimeAggregationLevel="2"/>

</IBPReads>

Fetch data

If the select query has a large data set, for example 5 million rows of data, the helper iFlows can be used to fetch pages of this data. You can define the page size using the XML attribute IBPPackageSizeInRows. The Offset attribute defines how many records you can skip. The following example payload can be sent to fetch data using the helper iFlows.

<IBPRead>

<xsl:attribute name="Key">

<xsl:value-of select="if (@Key) then else $IBPQueryKey1"/>

</xsl:attribute>

<xsl:attribute name="PackageSizeInRows">

<xsl:value-of select="if (@PackageSizeInRows) then @PackageSizeInRows else $IBPPackageSizeInRows"/>

</xsl:attribute>

<xsl:attribute name="ParallelThread">

<xsl:value-of select="if (@ParallelThread) then @ParallelThread else $IBPQueryParallelThread"/>

</xsl:attribute>

<xsl:attribute name="Offset">

<xsl:value-of select="number($IBPQueryOffset1)"/>

</xsl:attribute>

</IBPRead>

If there are more pages to read, the helper iFlows returns a Boolean message property called: - IBPhasMoreRecords. The fetch helper iFlow can be called multiple times in a loop. You can exit the loop when this message property IBPhasMoreRecords is false.

Once the data is fetched from the backend, it can be transformed to the database schema that you defined while creating a table in the space on SAP Datasphere. After transformation, this data is then written to SAP Datasphere using the JDBC adapter.

Writing data to SAP Datasphere

Before we write to SAP Datasphere, we need to setup a few things. We will be using the JDBC protocol and Open SQL. We need a space, table, and a database user. Let’s do this step by step:

Database user

Navigate to the Space management menu and create a new Space. Open the space and click on the Database Access menu option. Create a new database user. Below is the screen shot of the user I have created. It also creates an Open SQL Schema to which you can grant read and write access.

Once created, press on the “info” button on the far right corner of the user you just created and note down the following information:

Database User Name:

Open SQL Schema

Host Name and Port

Password

You can also request a new password to start with.

We need a table

Select the database username you just created and press the “Open Database Explorer” menu option to the left of the search input. This will open the SAP HANA Database Explorer in a new window. On the top left of the screen, just below the SAP logo – you will see the button to open an SQL Console. Click on that to open a SQL Console and enter the following SQL command to create a new table.

create TABLE IBPFORECAST (

PRODUCT VARCHAR(50),

CUSTOMER VARCHAR(50),

LOCATION VARCHAR(50),

CONSDEMAND DOUBLE,

KEYFIGUREDATE DATE,

UNITS VARCHAR(4),

COMMENTS VARCHAR(100)

);

You can adapt the statement to suite your needs. Once done, you can click on the green “Run” button to execute the SQL statement. This will create a new table which you can view under the Catalog –> Tables menu tree on the left. I created two tables. One was to read data from SAP Datasphere called IBPDEMAND and the other was to write data into Datasphere called IBPFORECAST. The idea was to read demand relevant data and write to SAP IBP, do the planning run in IBP and then write back forecast data into SAP Datasphere.

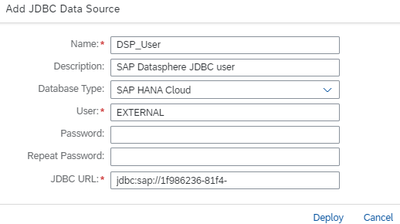

JDBC settings on SAP Cloud Integration

We need to use the SAP Datasphere - JDBC credentials that we created in the previous steps to create a connection between SAP Cloud Integration and SAP Datasphere. For this,

- Open the SAP Cloud Integration instance.

- In the menu on the left, open Monitor -> Integrations and API’s. Scroll to the Manage Security group and click on the JDBC Material pad.

- Add a new JDBC Data Source. Enter the name, description and select “SAP HANA Cloud” as the Database type. Enter the username and the password.

- For the JDBC URL, create the url in this format:- jdbc:sap://< database host name>:<Port>

- Save this setting using the “Deploy” button.

Now, we can use this JDBC data source name in our iFlows.

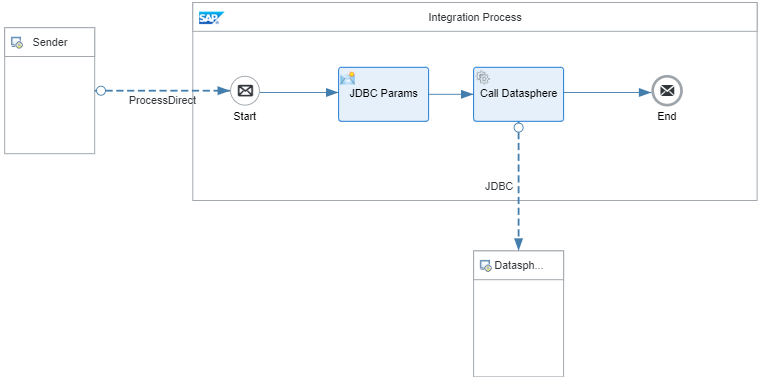

Using the JDBC Data Source in the iFlow

We use the JDBC protocol to write data to SAP Datasphere from Cloud Integration. Once you have the data that you want to write to Datasphere, we create the Open SQL Insert statements using an XSLT mapping. The section of the iFlow would look like this,

Fig. 7 Using the JDBC Data source to write in SAP Datasphere

This section is exposed as a separate iFlow which is called via a Process Direct. This iFlow is then called from the main iFlow after we fetch the data from IBP. Writing to SAP Datasphere is done in a loop as we read data in batchs. Each batch is written directly as it is read and transformed.

The SQL INSERT statement is prepared in an XSLT file. The following code snippet, generates the insert statement as documented in this help

<root>

<Insert_Statement>

<dbTableName action="INSERT">

<table><xsl:value-of select="$SAPDSPTarget"/></table>

<xsl:for-each select="/IBPReadKeyFigures/item">

<access>

<PRODUCT hasQuot="Yes"><xsl:value-of select="./PRDID"></xsl:value-of></PRODUCT>

<CUSTOMER hasQuot="Yes"><xsl:value-of select="./CUSTID"></xsl:value-of></CUSTOMER>

<LOCATION hasQuot="Yes"><xsl:value-of select="./LOCID"></xsl:value-of></LOCATION>

<CONSDEMAND hasQuot="No"><xsl:value-of select="./CONSENSUSDEMANDQTY"></xsl:value-of></CONSDEMAND>

<KEYFIGUREDATE hasQuot="Yes">2024-03-01</KEYFIGUREDATE>

<UNITS hasQuot="Yes"><xsl:value-of select="./UOMID"></xsl:value-of></UNITS>

<COMMENTS hasQuot="Yes">Inserted from XSLT</COMMENTS>

</access>

</xsl:for-each>

</dbTableName>

</Insert_Statement>

</root>

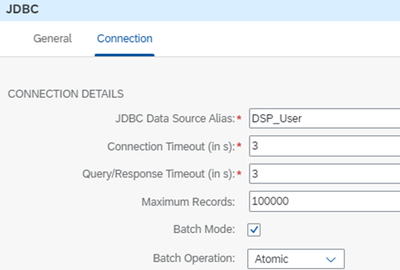

This XSLT generates an XML payload which is then passed as a body for the JDBC request. This XML payload is generated for each batch as we fetch data from SAP IBP. In the request-reply adapter, we select the protocol as JDBC and we use the JDBC Data Source name as the Data Source Alias under the JDBC -> Connection screen as shown below,

Fig. 8 JDBC Connection Details

This Once data is written to the table in the SAP Datasphere space, the JDBC request sends the following response in XMl format.

<?xml version="1.0" encoding="UTF-8" standalone="no"?>

<root>

<Insert_Statement>

<table>IBPFORECAST</table>

<insert_count>200000</insert_count>

</Insert_Statement>

</root>

The above XML response shows that, in one call, 200K rows of data were inserted into the SAP Datasphere table called IBPFORECAST.

Conclusion

In this blog, I shared a few details on how I,

- Use the SAP IBP interfaces and SAP Datasphere based JDBC data sources in SAP Cloud Integration.

- Reading data from SAP IBP using WS-RFC adapter – which is wrapped in the helper iFlows which are part of the 2402 release of SAP IBP.

- Writing data into SAP Datasphere using the JDBC adapter

In the next blog we take a closer look into how we can read data from a space in SAP Datasphere, transform this data and write it into SAP Integrated Business Planning using SAP Cloud Integration.

- SAP Managed Tags:

- SAP Datasphere,

- SAP Integration Suite,

- SAP Integrated Business Planning for Supply Chain

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

Business Trends

169 -

Business Trends

24 -

Catalog Enablement

1 -

Event Information

47 -

Event Information

4 -

Expert Insights

12 -

Expert Insights

39 -

intelligent asset management

1 -

Life at SAP

63 -

Product Updates

500 -

Product Updates

66 -

Release Announcement

1 -

SAP Digital Manufacturing for execution

1 -

Super Bowl

1 -

Supply Chain

1 -

Sustainability

1 -

Swifties

1 -

Technology Updates

187 -

Technology Updates

17

- SAP IBP Real-Time Integration: Empowering Businesses for Smarter, Faster Decisions in Supply Chain Management Blogs by SAP

- Announcement: New integration platform in SAP Business Network for Logistics in Supply Chain Management Blogs by SAP

- “Mind the Gap” – Improves ROI, Cost & Margin by Merging Planning Processes in Supply Chain Management Blogs by SAP

- Drive productivity, safely and sustainably, with SAP manufacturing solutions in Supply Chain Management Blogs by SAP

- RISE with SAP advanced asset and service management package in Supply Chain Management Blogs by SAP

| User | Count |

|---|---|

| 8 | |

| 7 | |

| 6 | |

| 4 | |

| 4 | |

| 4 | |

| 3 | |

| 3 | |

| 3 | |

| 3 |