- SAP Community

- Products and Technology

- Spend Management

- Spend Management Blogs by SAP

- Replicate SAP Ariba analytical data to Big Query u...

Spend Management Blogs by SAP

Stay current on SAP Ariba for direct and indirect spend, SAP Fieldglass for workforce management, and SAP Concur for travel and expense with blog posts by SAP.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Developer Advocate

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

10-18-2020

9:01 PM

In this blog post, I will cover how we can move analytical data available in the SAP Ariba APIs to Big Query. To achieve this, I will use the trial version of the Integration Suite available in SAP Cloud Platform. I will build an integration flow in SAP Cloud Platform Integration and configure an Open Connector instance to send data to Big Query.

Update 1: If you want to send the data to SAP HANA Cloud, check out this follow-up blog post - https://blogs.sap.com/2020/11/03/how-to-replicate-sap-ariba-analytical-data-to-sap-hana-cloud/

Update 2: If you are interested in knowing how to configure step by step a connection from SAP Cloud Integration to the SAP Ariba API, including sample code and sample integration flow, please check out this blog post: https://blogs.sap.com/2021/03/22/step-by-step-how-to-configure-sap-cloud-integration-to-communicate-...

To complete the steps explained in this blog post, there are some prerequisites that we will need to complete first:

Now that we have access to the different systems, I will proceed to explain how to get SAP Cloud Platform Integration talking with SAP Ariba and Big Query.

I will build an integration flow in SAP Cloud Platform Integration whose goal is to extract analytical data from SAP Ariba and send the data to BigQuery. To accomplish this we will do the following:

The integration flow end result is shown in Fig. 1.

⚡ Navigate to the Google Cloud Platform console and create an OAuth 2.0 client.

In Google Cloud Platform, select the project and go to

⚡ Access the Open Connectors UI, from within the Cloud Platform Integration Suite, and create an instance of the BigQuery connector. Once created, copy the Authorization header details displayed in the API docs.

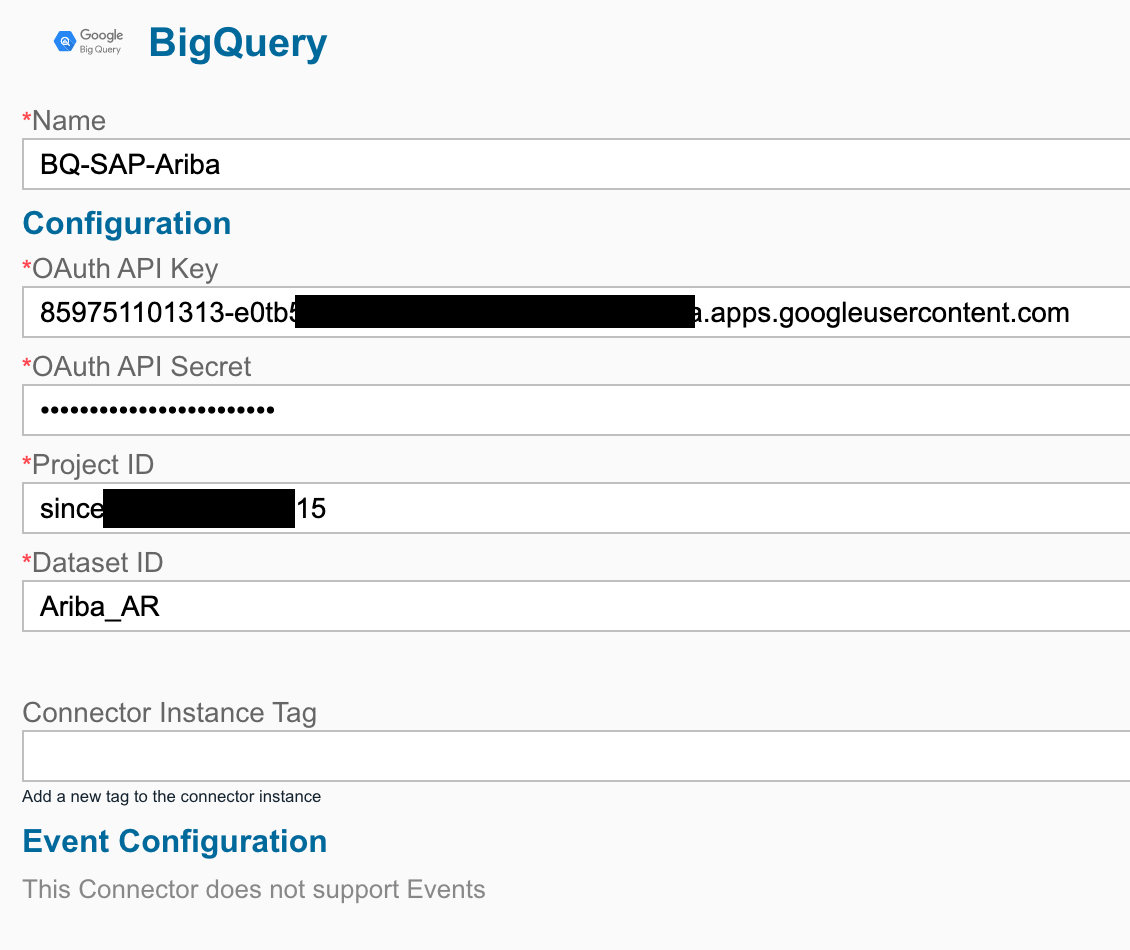

In Open Connectors, go to Connectors and search for BigQuery and select Authenticate. It will open the configuration screen to create a new instance. Enter the required information and complete the authorization flow to grant Open Connectors access to BigQuery.

Once the authentication is complete, visit the API docs of the connector instance just created (Instances > Your connector > API Docs). In the API docs, select any method and copy the value included as an Authorization header, e.g.

⚡ Go to your SAP Cloud Platform Integration instance and create/deploy 2 security materials (Monitor > Manage Security > Security Material). This will be used by the integration flow to communicate with SAP Ariba and BigQuery.

⚡ Create an integration flow in SAP Cloud Platform Integration following the guidelines below.

Given that it is possible to receive large amounts of data from the SAP Ariba Analytical Reporting APIs, there might be a need to paginate the response. This is taken in consideration in the integration flow, hence why there is a looping process in it.

I created the externalized parameters below to ensure that the integration flow is reusable.

The integration flow includes an integration process that can start on a schedule and a looping process call to handle the SAP Ariba response pagination. The loop will stop when the header pageToken value is STOP. This is handled by the script responsible of processing the SAP Ariba API response.

This local integration process retrieves the analytical data from SAP Ariba and sending it to BigQuery. Below, the details on what each block in the process is doing:

Now that all steps are completed, we deploy the integration flow and check the records created in BigQuery.

As we can see, we have replicated the SAP Ariba data to BigQuery. We can now use this dataset to create a report/dashboard in reporting tools that connect to BigQuery.

You've made it to the end of the post ? ?. Thank you. I've covered how we can retrieve data from the SAP Ariba APIs and implement pagination in SAP Cloud Platform Integration, by using the looping process call. Also, how we can leverage Open Connectors to communicate with external services, e.g. BigQuery. Finally, an integration flow that can handle the end-to-end process of extracting the data and sending it to BigQuery.

Update 1: If you want to send the data to SAP HANA Cloud, check out this follow-up blog post - https://blogs.sap.com/2020/11/03/how-to-replicate-sap-ariba-analytical-data-to-sap-hana-cloud/

Update 2: If you are interested in knowing how to configure step by step a connection from SAP Cloud Integration to the SAP Ariba API, including sample code and sample integration flow, please check out this blog post: https://blogs.sap.com/2021/03/22/step-by-step-how-to-configure-sap-cloud-integration-to-communicate-...

To complete the steps explained in this blog post, there are some prerequisites that we will need to complete first:

- Create application in SAP Ariba Developer Portal and request API access to the Analytical reporting APIs. Unfortunately there is no trial version of SAP Ariba but I guess that if you are reading this blog post, it is because your company uses SAP Ariba :-). I covered in a previous blog post (Using the SAP Ariba APIs to extract inactive suppliers) how to create an application and request API access in the SAP Ariba Developer Portal.

If you prefer watching videos, you can watch the first two videos available in the SAP Ariba for Developers YouTube playlist ? https://www.youtube.com/playlist?list=PL6RpkC85SLQDXSLHrSPtu8wztzDs8kYPX.

- An SAP Cloud Platform trial account. Make sure to enable to a subscription to the Integration Suite (Go to your trial subaccount > Subscriptions > Integration Suite). Once in the Integration Suite application, add the

Design, Develop and Operate Integration Scenarios (SAP Cloud Platform Integration) and Extend Non-SAP Connectivity (Open Connectors) capabilities.

To find out how to create a trial account: https://developers.sap.com/tutorials/hcp-create-trial-account.html

- Access to BigQuery. Google Cloud Platform offers a free trial, through which you can use Big Query. Go ahead and create a trial account if you don’t have access to Google Cloud Platform - https://cloud.google.com/free/.

Now that we have access to the different systems, I will proceed to explain how to get SAP Cloud Platform Integration talking with SAP Ariba and Big Query.

I will build an integration flow in SAP Cloud Platform Integration whose goal is to extract analytical data from SAP Ariba and send the data to BigQuery. To accomplish this we will do the following:

- Create an OAuth application in Google Cloud Platform -> BigQuery

- Set up a BigQuery instance in Open Connectors

- Deploy security material in SAP Cloud Platform Integration

- Create integration flow in SAP Cloud Platform Integration

The integration flow end result is shown in Fig. 1.

Fig. 1 - Integration flow

Step 1 - Create an OAuth 2.0 application in Google Cloud Platform

⚡ Navigate to the Google Cloud Platform console and create an OAuth 2.0 client.

In Google Cloud Platform, select the project and go to

API & Services > Credentials. Create a credential for OAuth Client ID and select Web application as the application type. Make sure to add https://auth.cloudelements.io/oauth as an Authorised redirect URI. Once the client is created, copy the Client ID and Client secret as we will need them to configure an instance of BigQuery in Open Connectors.

Fig. 2 - Google Cloud Platform OAuth credentials

Step 2 - Set up a BigQuery instance in Open Connectors

⚡ Access the Open Connectors UI, from within the Cloud Platform Integration Suite, and create an instance of the BigQuery connector. Once created, copy the Authorization header details displayed in the API docs.

Fig. 3 - Integration Suite UI

In Open Connectors, go to Connectors and search for BigQuery and select Authenticate. It will open the configuration screen to create a new instance. Enter the required information and complete the authorization flow to grant Open Connectors access to BigQuery.

Fig. 4 - BigQuery connector configuration

Once the authentication is complete, visit the API docs of the connector instance just created (Instances > Your connector > API Docs). In the API docs, select any method and copy the value included as an Authorization header, e.g.

User QNBF4V=, Organization a0f234e, Element d3jbWv5/xxx/yyyyyyy/zzzzxqrk=. We will use this value to configure the Open Connector credentials in the next step.

Fig. 5 - Open Connector API docs

Step 3 - Deploy security material in SAP Cloud Platform Integration

⚡ Go to your SAP Cloud Platform Integration instance and create/deploy 2 security materials (Monitor > Manage Security > Security Material). This will be used by the integration flow to communicate with SAP Ariba and BigQuery.

| Name | Type | Fields |

|---|---|---|

| Ariba_AnalyticalReporting | OAuth2 Client credentials | Enter the Token service URL, Client ID and Client Secret |

| OC_BigQuery | User credentials (Open Connectors) | Enter the User, Organization, and Element details from Open Connectors |

|  |

Fig. 6 - Security material

Fig. 6 - Security material

Step 4 - Create and set up integration flow in SAP Cloud Platform Integration

⚡ Create an integration flow in SAP Cloud Platform Integration following the guidelines below.

Given that it is possible to receive large amounts of data from the SAP Ariba Analytical Reporting APIs, there might be a need to paginate the response. This is taken in consideration in the integration flow, hence why there is a looping process in it.

To understand how pagination works in the SAP Ariba APIs, check out the explanation in Exercise 08 of the Cloud APIs virtual event - Data pagination with SAP Ariba APIs.

I created the externalized parameters below to ensure that the integration flow is reusable.

Fig. 7 - Externalized parameters

⚠️ When developing the integration flow I faced an issue when posting the payload to BigQuery (Open Connector). Given that I will be creating multiple records in a single request, I need to post the file as a . When posting the file, I was always getting the error below. I followed mattisebastian advice to replace the\nwith\r\n, but still was unable to successfully post the message using the Open Connectors adapter. Hence why I end up using the HTTP adapter and constructing/cleaning the payload (Set Content-Type and form-data elements content modifier) in the integration flow.

com.sap.it.rt.adapter.openconnectors.exceptions.OpenConnectorsException: Error occured in Http Request :

com.sap.it.rt.adapter.openconnectors.exceptions.OpenConnectorsException: Invalid Multipart Formdata Payload, cause:

com.sap.it.rt.adapter.openconnectors.exceptions.OpenConnectorsException: Invalid Multipart Formdata Payload

Integration Process

The integration flow includes an integration process that can start on a schedule and a looping process call to handle the SAP Ariba response pagination. The loop will stop when the header pageToken value is STOP. This is handled by the script responsible of processing the SAP Ariba API response.

Fig. 8 - Looping process conditions

Retrieve Analytical Reporting data from SAP Ariba (Local integration process)

This local integration process retrieves the analytical data from SAP Ariba and sending it to BigQuery. Below, the details on what each block in the process is doing:

- Set Ariba dateFilter parameter (JS script): Specify the date filter expected when calling the view template. The dateFilter included in the script below has been hardcoded for simplicity purposes. In a production scenario this can be dynamically set based on your reporting needs and how often the integration flow will run.

importClass(com.sap.gateway.ip.core.customdev.util.Message);

importClass(java.util.HashMap);

function processData(message) {

// Set the value for filters query parameter

message.setHeader("dateFilter", '{"createdDateFrom":"2019-07-01T00:00:00Z","createdDateTo":"2020-06-05T00:00:00Z"}');

return message;

}- Ariba API Key header: Creates the 'apiKey' header which is required when calling the SAP Ariba APIs. It sets the value specified for the

{{Ariba_APIKey}}external parameter. - GET analytical reporting data (HTTP to SAP Ariba):

- Process SAP Ariba response (JS script): This script checks for the

PageTokenvalue in the API response and handles its value. This will be used to indicate if the looping process should continue or finish. It also prepares the file that the Open Connector BigQuery instances expects. The contents of the file expects a JSON structure per line and every line will be the equivalent of a record in BigQuery.

importClass(com.sap.gateway.ip.core.customdev.util.Message);

importClass(java.util.HashMap);

function processData(message) {

var messageLog = messageLogFactory.getMessageLog(message)

//Parsing body to JSON

var body = JSON.parse(message.getBody(new java.lang.String().getClass()));

/* ===========

Handle PageToken

=============*/

// Retrieving PageToken from payload if one exists

if("PageToken" in body) {

messageLog.setStringProperty("PageToken", body['PageToken']);

message.setHeader("pageToken", body["PageToken"]);

} else {

messageLog.setStringProperty("PageToken", "NONE!");

message.setHeader("pageToken", "STOP");

}

/* ===========

Create payload

=============*/

var fileContents = "";

var i = 0;

var arr = body['Records'];

for(var x = 0; x < arr.length; x++) {

var record = arr[x];

messageLog.setStringProperty("record", record);

i += 1;

// Create JSON line document

fileContents += '{"Status": "' + record['Status'] + '","InternalId": "' + record['InternalId'] + '","Title": "' + record['Title'] + '"}\n';

}

messageLog.setStringProperty("TotalRecordsProcessed", i);

message.setBody(fileContents);

return message;

}- Set Content-Type and form-data elements: As mentioned before, here is where I set the Content-Type and payload.

- Message Header: Set Content-Type to "multipart/form-data; boundary=----WebKitFormBoundary7MA4YWxkTrZu0gW". The same boundary is specified in the message body.

- Message Body: Set type to Expression and the payload below as Body.

------WebKitFormBoundary7MA4YWxkTrZu0gW

Content-Disposition: form-data; name="file"; filename="payload.json"

Content-Type: application/json

${body}

------WebKitFormBoundary7MA4YWxkTrZu0gW--

- Set Authorization and cleaning payload (Groovy script): Given the problems faced when using the Open Connector adapter, I end up retrieving the Open Connector credentials from the SecureStoreService and setting the Authorization header. Also, end up cleaning the payload as suggested by mattisebastian.

import com.sap.gateway.ip.core.customdev.util.Message;

import com.sap.it.api.ITApiFactory;

import com.sap.it.api.securestore.SecureStoreService;

import com.sap.it.api.securestore.UserCredential;

import com.sap.it.api.securestore.exception.SecureStoreException;

def Message processData(Message message) {

def messageLog = messageLogFactory.getMessageLog(message);

/* ============

Set Authorization header

=============== */

def service = ITApiFactory.getApi(SecureStoreService.class, null);

if( service != null)

{

// Retrieve credential name from property

def credentialName = message.getProperties()["OC_Credential"];

//Get UserCredential containing user credential details

def credential = service.getUserCredential(credentialName);

message.setHeader("Authorization", new String(credential.getPassword()));

}

/* ============

Modify payload

=============== */

def bodyStr = message.getBody(java.lang.String) as String;

messageLog.setStringProperty("beforeBody", bodyStr);

// Replacing problematic characters

body = bodyStr.replaceAll("\n", "\r\n");

messageLog.setStringProperty("afterBody", body);

message.setBody(body);

return message;

}- POST table (HTTP to BigQuery): The address field value is set from the

{{OC_bulk_table_URL}}external parameter.

Fig. 9 - BigQuery HTTP adapter configuration

Now that all steps are completed, we deploy the integration flow and check the records created in BigQuery.

Fig. 10 - BigQuery table data

As we can see, we have replicated the SAP Ariba data to BigQuery. We can now use this dataset to create a report/dashboard in reporting tools that connect to BigQuery.

You've made it to the end of the post ? ?. Thank you. I've covered how we can retrieve data from the SAP Ariba APIs and implement pagination in SAP Cloud Platform Integration, by using the looping process call. Also, how we can leverage Open Connectors to communicate with external services, e.g. BigQuery. Finally, an integration flow that can handle the end-to-end process of extracting the data and sending it to BigQuery.

- SAP Managed Tags:

- SAP Integration Suite,

- SAP Ariba Extensibility,

- API,

- Open Connectors,

- SAP Ariba Strategic Sourcing Suite

Labels:

3 Comments

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

Business Trends

113 -

Business Trends

12 -

Event Information

44 -

Event Information

3 -

Expert Insights

18 -

Expert Insights

25 -

Life at SAP

32 -

Product Updates

253 -

Product Updates

27 -

Technology Updates

82 -

Technology Updates

14

Related Content

- Discover SAP Ariba REST API Try Out feature – an essential yet overlooked tool! in Spend Management Blogs by SAP

- SAP Ariba Integration scenarios with SAP S/4HANA Public Cloud system in Spend Management Blogs by SAP

- Navigating the Data-Driven World of Procurement Analytics: The Roadmap to Achieving Best-In-Class St in Spend Management Blogs by SAP

- SAP Ariba REST APIs: Gain a comprehensive understanding of rate limits! in Spend Management Blogs by SAP

- Integration of CIM with S/4 Hana Cloud in Spend Management Q&A

Top kudoed authors

| User | Count |

|---|---|

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |