- SAP Community

- Products and Technology

- Spend Management

- Spend Management Blogs by SAP

- Extracting SAP Ariba Reporting API Data using SAP ...

Spend Management Blogs by SAP

Stay current on SAP Ariba for direct and indirect spend, SAP Fieldglass for workforce management, and SAP Concur for travel and expense with blog posts by SAP.

Turn on suggestions

Auto-suggest helps you quickly narrow down your search results by suggesting possible matches as you type.

Showing results for

Product and Topic Expert

Options

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

11-14-2022

9:40 PM

Earlier this year I was able to show you how to to extract SAP Ariba Analytical Reporting API Data using SAP Integration Suite (CPI). Here is a link for the first part -

https://blogs.sap.com/2022/06/01/extracting-sap-ariba-reporting-api-data-using-sap-integration-suite...

By now, we've managed to create an end to end process that can fully automate the extraction of SAP Ariba Analytical Reporting data using CPI and sending it to SAP Data Warehouse Cloud (DWC) to create dashboards with SAP Analytics Cloud (SAC) on Top. This leverages a variety of capabilities that live within the SAP Business Technology Platform (BTP). We are using the flexibility of CPI, and the data warehousing and analytics capabilities to store and visualize data.

The purpose of this blog is provide a high level overview of the components involved.

Here is a video of what the end product looks like with Spend Analytics -

https://www.youtube.com/watch?v=_QoZ0orFY5c

Architecture

What we've done is make this full process available from end to end with SAP products, without the need for using a 3rd party. We can leverage the prebuilt content and stories on SAC to easily create dashboards. Currently, we've used the Spend Visibility offering facts and dimensions to send the data into SAC via CPI and DWC -

However, we have successfully configured the iFlow to work with any of the out of the box Analytical Reporting API fact tables. This can unlock further potential with feeding other SAP Ariba reporting API's into SAC via DWC. You can be flexible and establish this connection with a HANA DB as well.

Enablement

You can enable this by following the steps in mentioned in Part 1 of this blog. We will have a live SAP Discovery Center Mission published soon that will have a step by step implementation guide, along with a public Git Repository that will allow you to access the iFlows we built and modify them to access the data tables you want from the Analytical Reporting API.

The licenses needed to make this work is CPI, DWC , and SAC alongside with SAP Ariba to get access to the reporting data. If you have a CPEA BTP license, you can use CPI and SAP DWC on free tier mode to test out business process.

We chose to use DWC because it integrates nicely withSAC, and currently there is not a native connection between SAP Ariba and SAC. The data from SAP Ariba needs a data store to flow into, and DWC is was the choice since users can leverage the pre built business content that is available on SAC.

For more information on how to connect SAC with DWC, please refer to this blog -

https://blogs.sap.com/2020/05/19/how-to-connect-sap-analytics-cloud-and-sap-data-warehouse-cloud/

Before you can import any SAP or partner content package, the space into which the content will imported, needs to be created. SAP content is currently imported into space SAP_CONTENT.

Therefore, create a space with the technical name “SAP_CONTENT” once before you import any content package. Use the description “SAP Content”. In addition, assign the user that is to import the content to space SAP_CONTENT.

You need the Administrator role to create a space.

Take note of the database user name and password. You need this information when you connect the SAP Integration Suite integration flow with DWC. Verify that you can open the Database Explorer with this user.

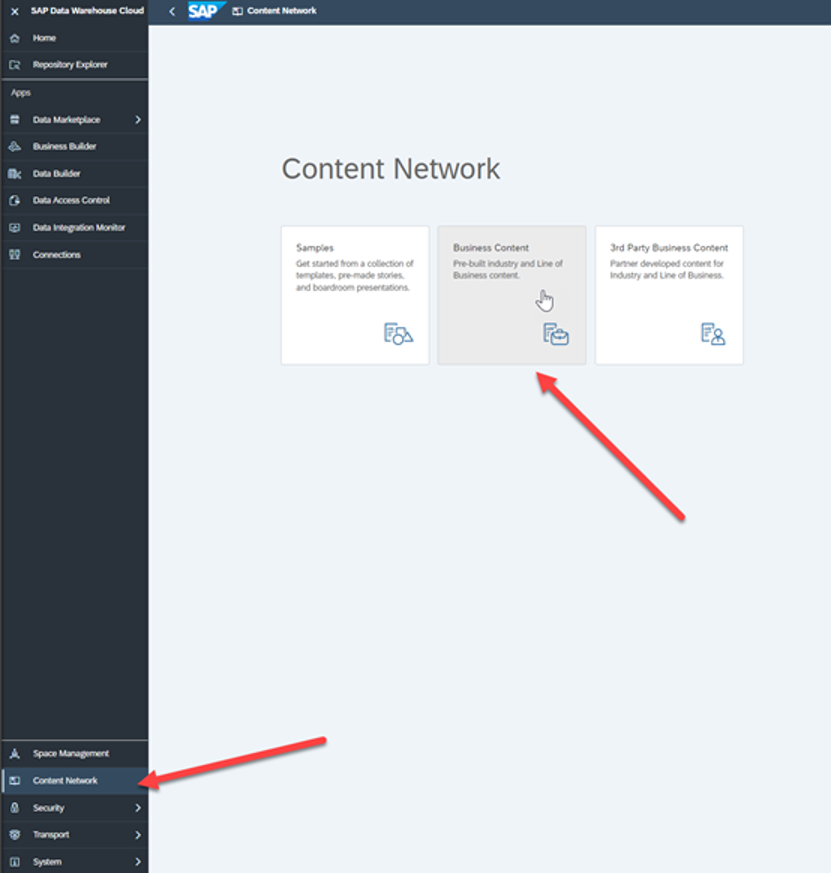

Navigate to the Content Network area of DWC and click on Business Content.

The available business content is displayed. Select the item “SAP Ariba: Spend Analysis”. The Overview screen for this package is opened.

If you download or deploy the Spend Analysis package for the first time, you don't need to change any of the default settings under ‘Import Options’. You will deploy all views manually. Because of this, check that ‘Deploy after Import’ is not selected, and click “Import”

DWC downloads the content in the background. When the download is finished, you can check the import summary screen to confirm the content was downloaded.

As a next step, the following tasks are to be performed:

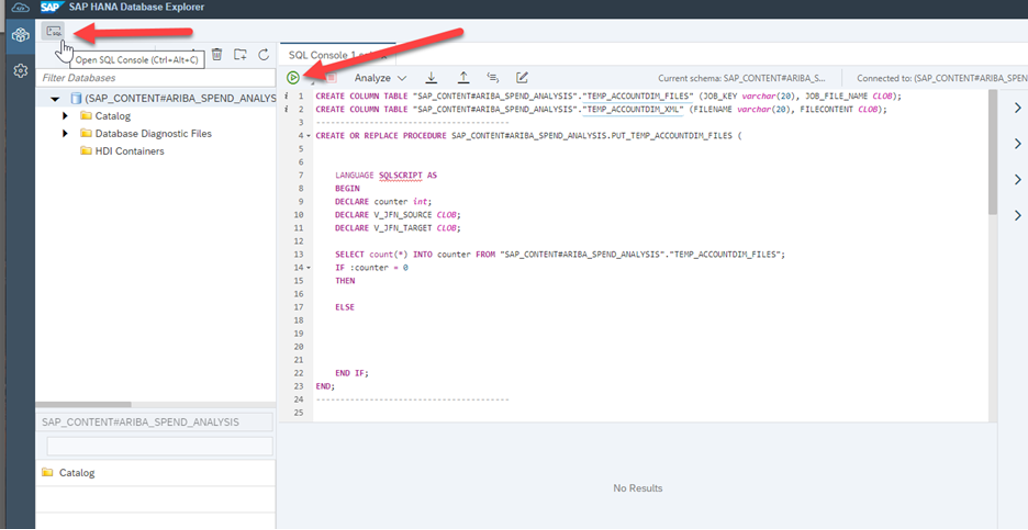

These tasks are performed using the SAP HANA DB Explorer in DWC.

Download or copy the following 10 SQL scripts:

The ISM_BASE_TABLE_CREATES script creates the 10 final destination tables in that SAP HANA system that is the target system for the SAP Ariba data. The script creates 8 dimension and 2 fact tables.

The other 10 scripts create the temporary tables and stored procedures for each dimension and fact table. These tables are are reused by the SAP Integration Suite integration flow during the data transfer.

Perform the following steps for each SQL script:

To create all base tables. repeat these steps for each downloaded SQL script file.

With the previous steps, you successfully created the SAP HANA Cloud tables (input layer). Now, you can deploy the DWC views ().

Navigate to the Repository Explorer and select the SAP & Partner Content space.

If you’ve downloaded other business content packages into this space, you can reduce the list of views to be deployed by selecting the ‘Not Deployed’ filter in the search pane.

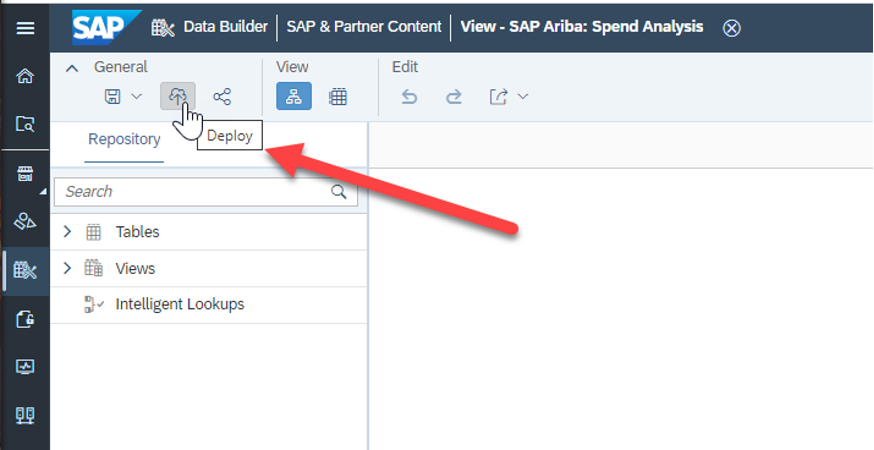

To deploy a view, click on the view name. The view name is displayed on the Data Builder screen for the view. On the Data Builder screen, click on the Deploy button for the view.

Caution: Avoid navigating back and forth from the list of views to the data builder when deploying these views (by right-clicking the view name in the list and selecting ‘open in new tab’). After you deployed the view, close that tab.

Deploy the input layer views first, followed by harmonization layer views, and, finally, the reporting layer views.

Deploy the following views:

Connecting SAP Integration Suite with DWC

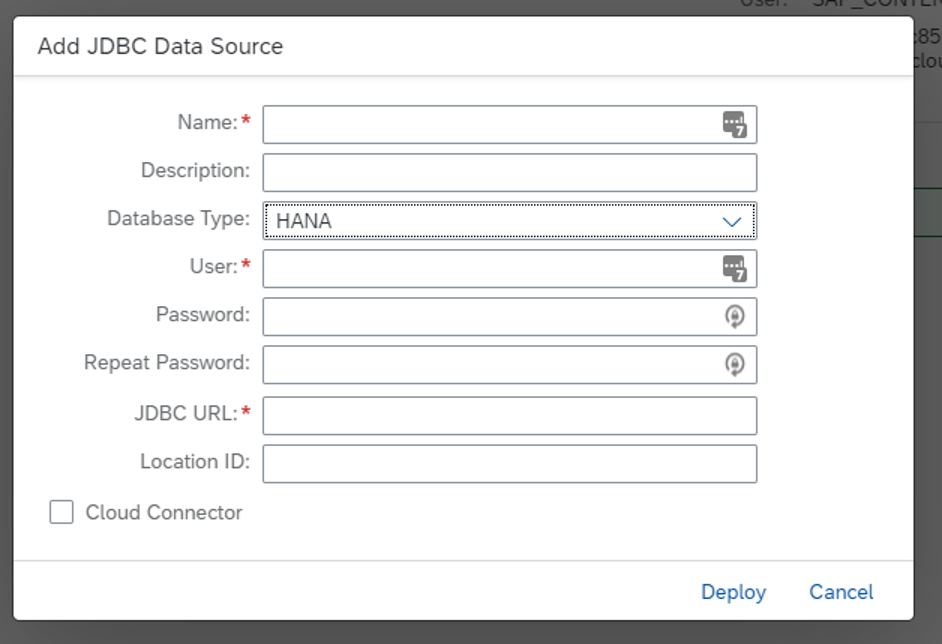

Create a JDBC connection to DWC. JDBC is the protocol over which the XML blobs are moved to DWC.

Log in to your SAP BTP cockpit and access CPI.

On the CPI homepage, select Design, Develop, and Operate Integration ScenariosAnalog to the previous step, click on the ‘Eye‘ icon (Monitor), then click on the JDBC Material tile in the Manage Security section.

Create a new JDBC connection by clicking the Add button.

Click Deploy.

Next Steps

I mentioned earlier as of now, we have an SAP Discovery Center Mission and the iFlows available on a public Git that will help you adopt this use case. We've increasingly seen customers who want to leverage the power of SAC with SAP Ariba, and we're more than happy to help guide your company to achieve this. If you're interested in deploying this, or learning more please reach out to me via email, and me and team and discuss with you further.

https://blogs.sap.com/2022/06/01/extracting-sap-ariba-reporting-api-data-using-sap-integration-suite...

By now, we've managed to create an end to end process that can fully automate the extraction of SAP Ariba Analytical Reporting data using CPI and sending it to SAP Data Warehouse Cloud (DWC) to create dashboards with SAP Analytics Cloud (SAC) on Top. This leverages a variety of capabilities that live within the SAP Business Technology Platform (BTP). We are using the flexibility of CPI, and the data warehousing and analytics capabilities to store and visualize data.

The purpose of this blog is provide a high level overview of the components involved.

Here is a video of what the end product looks like with Spend Analytics -

https://www.youtube.com/watch?v=_QoZ0orFY5c

Architecture

What we've done is make this full process available from end to end with SAP products, without the need for using a 3rd party. We can leverage the prebuilt content and stories on SAC to easily create dashboards. Currently, we've used the Spend Visibility offering facts and dimensions to send the data into SAC via CPI and DWC -

However, we have successfully configured the iFlow to work with any of the out of the box Analytical Reporting API fact tables. This can unlock further potential with feeding other SAP Ariba reporting API's into SAC via DWC. You can be flexible and establish this connection with a HANA DB as well.

Enablement

You can enable this by following the steps in mentioned in Part 1 of this blog. We will have a live SAP Discovery Center Mission published soon that will have a step by step implementation guide, along with a public Git Repository that will allow you to access the iFlows we built and modify them to access the data tables you want from the Analytical Reporting API.

The licenses needed to make this work is CPI, DWC , and SAC alongside with SAP Ariba to get access to the reporting data. If you have a CPEA BTP license, you can use CPI and SAP DWC on free tier mode to test out business process.

We chose to use DWC because it integrates nicely withSAC, and currently there is not a native connection between SAP Ariba and SAC. The data from SAP Ariba needs a data store to flow into, and DWC is was the choice since users can leverage the pre built business content that is available on SAC.

For more information on how to connect SAC with DWC, please refer to this blog -

https://blogs.sap.com/2020/05/19/how-to-connect-sap-analytics-cloud-and-sap-data-warehouse-cloud/

SAP Data Warehouse Cloud Space creation

SAP Data Warehouse Cloud Spaces

Before you can import any SAP or partner content package, the space into which the content will imported, needs to be created. SAP content is currently imported into space SAP_CONTENT.

Therefore, create a space with the technical name “SAP_CONTENT” once before you import any content package. Use the description “SAP Content”. In addition, assign the user that is to import the content to space SAP_CONTENT.

You need the Administrator role to create a space.

- For more information on the steps to create a space in DWC, go to

- Assign a user to the space in the ‘Member Assignment’ section. You need this use to test the final content package in SAP Analytics Cloud. For more instructions, go to Assign Members to Your Space

- Create a database user in the Database Users section. You need this user to access the SAP HANA Cloud layer and run the SQL Table Creation scripts (needed for the Inbound Layer objects for the content). For more instructions, go to Create a Database User

Take note of the database user name and password. You need this information when you connect the SAP Integration Suite integration flow with DWC. Verify that you can open the Database Explorer with this user.

SAP Data Warehouse Cloud content download

Navigate to the Content Network area of DWC and click on Business Content.

The available business content is displayed. Select the item “SAP Ariba: Spend Analysis”. The Overview screen for this package is opened.

If you download or deploy the Spend Analysis package for the first time, you don't need to change any of the default settings under ‘Import Options’. You will deploy all views manually. Because of this, check that ‘Deploy after Import’ is not selected, and click “Import”

DWC downloads the content in the background. When the download is finished, you can check the import summary screen to confirm the content was downloaded.

SAP Data Warehouse Cloud Create Table Scripts

As a next step, the following tasks are to be performed:

- Building the SAP HANA Cloud base tables in in the DWC system

- Building the the stored procedures that handle parts of the data orchestration.

These tasks are performed using the SAP HANA DB Explorer in DWC.

Download or copy the following 10 SQL scripts:

- ISM_BASE_TABLE_CREATES

- ISM_Script_Account_Dim.sql

- ISM_Script_CompanySite_Dim.sql

- ISM_Script_Contract_Dim.sql

- ISM_Script_CostCenter_Dim.sql

- ISM_Script_InvoiceLineItem_Fact.sql

- ISM_Script_Part_Dim.sql

- ISM_Script_POLineItem_Fact.sql

- ISM_Script_SourceSystem_Dim.sql

- ISM_Script_Supplier_Dim.sql

- ISM_Script_UNSPCS_Dim.sql

The ISM_BASE_TABLE_CREATES script creates the 10 final destination tables in that SAP HANA system that is the target system for the SAP Ariba data. The script creates 8 dimension and 2 fact tables.

The other 10 scripts create the temporary tables and stored procedures for each dimension and fact table. These tables are are reused by the SAP Integration Suite integration flow during the data transfer.

Perform the following steps for each SQL script:

- Navigate to the SAP_Content Space in your DWC system.

- Navigate to Space Management -> Database Users section. Select the previously createddatabase user and Launch “Open Database Explorer”. A new tab with the SAP HANA Database Explorer for SAP HANA Cockpit is opened.

- Open a new SQL console.

- Paste the SQL script from the downloaded file into the console.

- Click RUN (green circled arrow in the console).

To create all base tables. repeat these steps for each downloaded SQL script file.

SAP Data Warehouse Cloud Deploy Content

Deploying SAP Data Warehouse Cloud Business Content

With the previous steps, you successfully created the SAP HANA Cloud tables (input layer). Now, you can deploy the DWC views ().

Navigate to the Repository Explorer and select the SAP & Partner Content space.

If you’ve downloaded other business content packages into this space, you can reduce the list of views to be deployed by selecting the ‘Not Deployed’ filter in the search pane.

To deploy a view, click on the view name. The view name is displayed on the Data Builder screen for the view. On the Data Builder screen, click on the Deploy button for the view.

Caution: Avoid navigating back and forth from the list of views to the data builder when deploying these views (by right-clicking the view name in the list and selecting ‘open in new tab’). After you deployed the view, close that tab.

Deploy the input layer views first, followed by harmonization layer views, and, finally, the reporting layer views.

Deploy the following views:

Input Layer Views – technical names

- SAP_PROC_SA_IL_AccountDim

- SAP_PROC_SA_IL_SupplierDim

- SAP_PROC_SA_IL_PartDim

- SAP_PROC_SA_IL_CompanySiteDim

- SAP_PROC_SA_IL_UNSPSCDim

- SAP_PROC_SA_IL_ContractDim

- SAP_PROC_SA_IL_SourceSystemDim

- SAP_PROC_SA_IL_CostCenterDim

- SAP_PROC_SA_IL_POLineItemFact

- SAP_PROC_SA_IL_InvoiceLineItemFact

Harmonization Layer Views – technical names

- SAP_PROC_SA_HL_PPA_Variance

- SAP_PROC_SA_HL_PPV_Variance

- SAP_PROC_SA_HL_SOC_Variance

- SAP_PROC_SA_HL_SupplierDim

Reporting Layer Views – technical names

- SAP_PROC_SA_RL_PART_OPTIMIZATI

- SAP_PROC_SA_RL_SPEND_ANALYSIS

Entity Relationship diagram – technical name

- SAP_PROC_SA_ER_DEPLOY

Connecting SAP Integration Suite with DWC

Create a JDBC connection to DWC. JDBC is the protocol over which the XML blobs are moved to DWC.

Log in to your SAP BTP cockpit and access CPI.

On the CPI homepage, select Design, Develop, and Operate Integration ScenariosAnalog to the previous step, click on the ‘Eye‘ icon (Monitor), then click on the JDBC Material tile in the Manage Security section.

Create a new JDBC connection by clicking the Add button.

Name the JDBC connection ‘Data Warehouse Cloud_HANACLOUD’, and fill in the JDBC URL, the User and the Password fields. You can get this information from DWC. You find this information in Database User Details information box. You can access this box  by clicking the info icon to the right of the DB User in the DWC space.

by clicking the info icon to the right of the DB User in the DWC space.

Click Deploy.

Next Steps

I mentioned earlier as of now, we have an SAP Discovery Center Mission and the iFlows available on a public Git that will help you adopt this use case. We've increasingly seen customers who want to leverage the power of SAC with SAP Ariba, and we're more than happy to help guide your company to achieve this. If you're interested in deploying this, or learning more please reach out to me via email, and me and team and discuss with you further.

- SAP Managed Tags:

- SAP Analytics Cloud,

- SAP Datasphere,

- SAP Integration Suite,

- SAP Ariba Extensibility,

- SAP Ariba Procurement

Labels:

1 Comment

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

Labels in this area

-

Business Trends

113 -

Business Trends

12 -

Event Information

44 -

Event Information

3 -

Expert Insights

18 -

Expert Insights

25 -

Life at SAP

32 -

Product Updates

253 -

Product Updates

27 -

Technology Updates

82 -

Technology Updates

14

Related Content

- SAP Ariba 2405 Release Key Innovations Preview in Spend Management Blogs by SAP

- SAP Ariba Supplier Management 2405 Release Key Innovations Preview in Spend Management Blogs by SAP

- SAP Sustainability Data Exchange: Your Key to a Sustainable Supply Chain in Spend Management Blogs by SAP

- Discover SAP Ariba REST API Try Out feature – an essential yet overlooked tool! in Spend Management Blogs by SAP

- Navigating the Data-Driven World of Procurement Analytics: The Roadmap to Achieving Best-In-Class St in Spend Management Blogs by SAP

Top kudoed authors

| User | Count |

|---|---|

| 2 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 | |

| 1 |