- SAP Community

- Products and Technology

- Additional Blogs by SAP

- Mastering Concurrency: Unveiling the Magic of Go's...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

What the article is about?

By now, Golang's reputation for the exceptional concurrency management using goroutines has likely reached your ears. These little powerhouses are not only efficient but also cost-effective, scalable to millions, all thanks to Go's distinctive design and scheduler.

How exactly does Go master the art of concurrency?

Let's explore the inner workings of the Go Scheduler to uncover the magic behind its capabilities.

This article extends its utility to every developer, irrespective of the technology stack they wield. The patterns, ideas, and creative insights from the Go Scheduler transcend language boundaries, enriching your day-to-day developer experience across all realms.

Go Scheduler

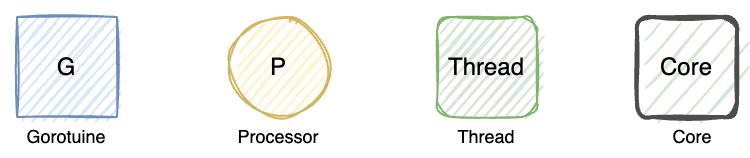

We are going to start with some crucial element which we should understand their existence and role.

Annotation

System Calls

System calls act as the vital communication channel between user-level programs and the heart of the operating system, the Kernel. Think of them as the carefully crafted interfaces that allow applications to request essential services and resources from the underlying operating system.

It's similar to how web services present a user-friendly REST API, enabling seamless interaction between users and the service.

Threads

Threads, a fundamental concept in computer science, serve as the backbone of multitasking and parallel execution within modern computing systems.

Imagine your computer's processor as a powerhouse with multiple cores, each functioning as an independent processing unit capable of executing tasks autonomously.

Threads, in essence, provide an abstraction layer above these cores. Through this abstraction, we can create/delete threads within our program, enabling us to execute specific code on each thread. These threads, once created, are then managed and scheduled by the operating system's Kernel to run on individual cores.

Threads are essential in multitasking because they prevent chaos. In a computer, many programs run at once, all wanting to work on different tasks. If programs directly used cores, they could monopolize resources (or system deadlock), causing problems. They're created using system calls, allowing programs to work together without causing system issues.

Goroutines

A goroutine in Go is a lightweight, concurrent unit of execution.

It's similar to a thread in concept but more efficient.

Goroutines allow programs to perform tasks concurrently, meaning they can run independently alongside other goroutines, enabling efficient use of resources.

Goroutines are not directly mapped to operating system threads, the Go runtime manages a pool of operating system threads to execute goroutines. These threads are responsible for running the goroutines, providing the necessary parallelism and concurrency for Go programs.

How the whole thing works as flow

The M:N Scheduler

In Go's M:N scheduler, M goroutines are efficiently mapped to N kernel threads.

So, users can create however many goroutines they want, but we have control over a finite set of threads, and we schedule their goroutines on a thread for a certain period of time.

The M:N Go Scheduler provides flexibility as millions of goroutines can be intelligently managed and run on a smaller set of kernel threads, optimizing system resources and allowing for high levels of concurrency. This flexibility and efficient utilization of threads are key features that contribute to Go's ability to handle massive numbers of concurrent tasks efficiently.

Let's mention the difference between concurrency and parallelism

Concurrency is when two or more tasks can start, run, and complete in overlapping time periods. It doesn't necessarily mean they'll ever both be running at the same instant. Check the picture below.

Example:

- Multitasking on a single-core machine. The Go Scheduler gives time on different goroutines at overlapping time on the thread.

One Core System

Parallelism is when tasks literally run at the same time, e.g., on a multicore processor.

Example:

- Parallelism and concurrency on multicore system

Two Core System

States of Goroutines

A goroutine can be in one of three states: Waiting, Runnable or Executing.

Running: This means the Goroutine has been placed on a Thread and is executing its instructions.

Runnable: A goroutine is runnable when it is ready to be executed and waiting for the kernel thread to execute it.

Waiting: This means the Goroutine has stopped and waits for event to happen - reasons:

- synchronization calls (atomic, mutex, channel operations, system calls)

- asynchronous calls (network calls)

- long-running (explained below)

OS Scheduler

Traditional operating systems use preemptive schedulers, meaning the system can interrupt tasks and switch to another task without the program's explicit consent. The kernel is making decisions - you can’t predict what the scheduler is going to do at any given time.

Go Scheduler

Up-to Go 1.10

Cooperative Preemption

Go has used cooperative preemption with safe-points only at function calls. This means that Go can only switch between concurrently-executing goroutines at specific points. (As switching G1 off and G2 on - diagram above)

From execution point of view you can give goroutine processor time (execution time) only on specific events (safe-points) which are function calls.

Real Life Example:

Imagine you have a list of tasks to do, like cooking, cleaning, and shopping. In the older versions of Go, these tasks (goroutines) could only switch when the person doing them took a break.

In our analogy, these breaks are like "break times" during work. When you're cooking, you might take a break after chopping vegetables.

Similarly, in Go, tasks (goroutines) could only switch when they were between tasks, like when one goroutine finished a task/function (like chopping vegetables) and was about to start another.

There were many problems with such approach as evolving of the Go Runtime introduced situation where "break" could not occur. What happens if you can not take a break from cleaning but the dish is on fire?

Example: If you run any tight loops that are not making function calls, you will cause latencies within the Go Scheduler and garbage collection.

You can read more about original Go paper here or here

What is now

Non-Cooperative Preemption

Go introduced non-cooperative preemption because of the problems mentioned above.

In non-cooperative preemption, the Go runtime can forcibly pause a running goroutine even if it doesn't explicitly yield control. This preemptive behavior ensures that no single goroutine can monopolize the CPU for an extended period.

Benefits of Non-Cooperative Preemption:

- If one goroutine is stuck in a loop or a long-running operation, the Go Scheduler can pause it, allowing other tasks to proceed

- By preventing any single goroutine from hogging the CPU, non-cooperative preemption ensures fair execution of tasks. Each goroutine gets a chance to run, leading to better utilization of system resources and a smoother user experience

- The Go Scheduler can efficiently manage the execution of goroutines without relying solely on their cooperation.

How does the scheduler work

Tracking of goroutines

There are two different run queues in the Go Scheduler:

- Global Run Queue (GRQ)

- Local Run Queue (LRQ)

When a goroutine begins, it joins a common waiting area called the Global Run Queue.

Later on, a system component (analysed below - processors) assigns it to a specific, smaller waiting area called the Local Run Queue.

Each processing thread primarily deals with its own local queue, choosing a goroutine from there to execute. This approach efficiently distributes tasks among threads, ensuring that each thread manages its own queue independently.

Because each local queue is handled by just one thread, there's no need for complex locking mechanisms like mutexes, simplifying the process and improving performance.

Both queues follows the FIFO (First in First Out) Principle.

The Problem

It's all good for now, but what will happen if Go makes a system call that might take a long time (like reading from a file)?

During this time, the thread handling that goroutine has no new tasks and is essentially inactive because it waits (blocked).

This can lead to a problem:

- If many threads are waiting for system calls (or other blocking operations) to finish, our program becomes unproductive as they can not take other goroutines to execute

To solve this issue, we need a way for threads to continue working on other tasks while waiting for system calls to complete.

To solve this problem, we add another layer of abstraction, called the "processor".

The "Processor"

"The Processor" entity is in charge of managing the interaction between the local run queues, the global run queue, and the kernel threads.

How it looks the Go Scheduler with processor

Processor States:

- Free Processor - it can acquire an idle thread or start a new thread to execute a goroutine.

- Running Processor - will run thread until it is blocked by goroutine, then will decide what to do

- Blocked Processor - means that the thread is blocked, and it could be detached (this is called handoff explained below)

The number of processors is the maximum number of GOMAXPROCS

What affects the Go Scheduler decisions

We have talked about the higher level concepts but how our application development affects the Go Scheduler and when it has to do a decision.

There are a couple of "events" that occur in your Go programs that allow the Go Scheduler to make scheduling decisions (put a goroutine in queue, give goroutine processor time, take processor time from goroutine, attach/detach thread, etc.)

- The use of the keyword

go - Garbage Collection

- Synchronization calls (atomic, mutex, channel operations, system calls)

- Asynchronous calls (network calls)

- Long-running (not exactly event but part of scheduling decisions)

This does not mean it will always happen on one of these events. It means the Go Scheduler gets the opportunity.

Handoff

We have seen how goroutines end up running on the kernel threads, but what happens when a thread blocks itself?

For instance, if a goroutine does a system call, it will block the kernel thread causing the other goroutines in the local run queue to be starved.

When this happens, the Go Runtime will detach/release the thread from "the processor", and it will be assigned to an existing idle thread or will create new thread.

Go Runtime keeps already created threads without work idle as it is most cost-efficient that creating/deleting such. The number of the threads depends on the load, goroutines/cores, so it's impossible to say how much it would keep as this is very generic question answer.

Switching tasks (or handoffs) can be costly, especially when creating new threads. For system calls, some are quick, and threads don't stay blocked for long. To optimize, Go's runtime handles this intelligently.

- Go Scheduler will do immediate handoff if it knows that the syscall will be blocking for a long time.

- In other cases, it will let the processor be in a block (in shorter operations for example). It will then set the status to reflect that it is in a syscall.

How Go Scheduler understand that syscall is ready and what it does with such processes

All I/O must be done through syscalls.

The syscalls in Go are always called through code that is controlled by the runtime.

This means that when you call a syscall, instead of just calling it directly (thus giving up control of the thread to the kernel), the runtime is notified of the syscall you want to make, and it does it on the goroutine's behalf.

This allows it to let the goroutine know later once the result is ready.

In other words Go Scheduler

- Know which are dislocated thread with goroutines

- When a goroutine makes a system call, it enters a state where it's waiting for the operating system to complete the requested operation (e.g., reading from a file, waiting for I/O).

Operating systems often use signals to indicate when system calls are completed. When the operating system signals that a system call is done, the Go runtime can respond accordingly.

When you return from handed-off syscall Go Scheduler will check:

- If the old processor (the one that was handed off) is available, the goroutine will be assigned to its LRQ

- If the older processor is NOT available, the goroutine will associate itself with any idle processor

- If there are no idle processors, the goroutine will be added to the global run queue.

Network Queue

Network poller is component like a traffic director, especially helpful when dealing with tasks like asynchronous system calls such as network I/O.

How it works:

Imagine you have a busy street (your computer) with various vehicles (goroutines) moving around. Now, sometimes, a vehicle (goroutine) needs to wait for something to happen, like a green signal at a junction (network request completion). In regular scenarios, this waiting could create a jam (blocking the system).

Here's where the network poller steps in:

- When a goroutine is waiting for a network request (like waiting at the junction), it's added to the network poller.

- By doing this, the goroutine doesn't block the main road (kernel thread). Instead, it patiently waits in the network poller queue, allowing other goroutines to keep moving freely.

If there is a runnable goroutine in the network poller, which finished it network I/O waiting the processor will add it to its local run queue.

Work-Stealing "The Order of Stealing"

The work stealing is a technique that is used to balance the load between the processors and respectively between kernel threads.

The order of work is as follows:

- Check once in a while Global Run Queue once to ensure fairness (higher priority - step 1)

- It only checks the run queue 1/61 of the time. This is done to avoid the overhead of checking the run queue all the time.

- Starvation - There is a possibility that goroutines keep getting filled up in the Global Run Queue and each Processor keeps working on its own Local Run Queue. This can cause the goroutines in the global run queue to essentially starve. That's why it periodically checks there with priority.

- Otherwise, two goroutines can completely occupy the local queue by constantly respawning each other.

- It is set to 61 so that it is not too low to result in excessive checking of the global run queue, nor too high to result in starvation of goroutines in the queue.

- Another reason is that 61 is a prime number and prime numbers are beneficial for hashing purposes.

- Polls from Local Run Queue

- Polls from the Global Run Queue (that is executed if nothing in the LRQ)

- Polls Network Queue

- Steals from another Processor

Steals from another "Processor"

If the global run queue, local run queue, and network poller are empty

The Processor 2 is idle. It will check LRQ, GRQ and NQ which are empty

Then it will then have to randomly pick a processor to steal the half of work from.

It took half of the work from processor 1

Reference to the order of work stealing execution - runtime/proc.go.

To prove that it is as describe above, check the code snippet taken from Go Scheduler below.

// Check the global runnable queue once in a while to ensure fairness.

// Otherwise two goroutines can completely occupy the local runqueue

// by constantly respawning each other.

if pp.schedtick%61 == 0 && sched.runqsize > 0 {

lock(&sched.lock)

gp := globrunqget(pp, 1)

unlock(&sched.lock)

if gp != nil {

return gp, false, false

}

}

// local runq

if gp, inheritTime := runqget(pp); gp != nil {

return gp, inheritTime, false

}

// global runq

if sched.runqsize != 0 {

lock(&sched.lock)

gp := globrunqget(pp, 0)

unlock(&sched.lock)

if gp != nil {

return gp, false, false

}

}

// Poll network.

// This netpoll is only an optimization before we resort to stealing.

// We can safely skip it if there are no waiters or a thread is blocked

// in netpoll already. If there is any kind of logical race with that

// blocked thread (e.g. it has already returned from netpoll, but does

// not set lastpoll yet), this thread will do blocking netpoll below

// anyway.

if netpollinited() && netpollWaiters.Load() > 0 && sched.lastpoll.Load() != 0 {

if list := netpoll(0); !list.empty() { // non-blocking

gp := list.pop()

injectglist(&list)

casgstatus(gp, _Gwaiting, _Grunnable)

if traceEnabled() {

traceGoUnpark(gp, 0)

}

return gp, false, false

}

}

// Spinning Ms: steal work from other Ps.

//

// Limit the number of spinning Ms to half the number of busy Ps.

// This is necessary to prevent excessive CPU consumption when

// GOMAXPROCS>>1 but the program parallelism is low.

if mp.spinning || 2*sched.nmspinning.Load() < gomaxprocs-sched.npidle.Load() {

if !mp.spinning {

mp.becomeSpinning()

}

gp, inheritTime, tnow, w, newWork := stealWork(now)

if gp != nil {

// Successfully stole.

return gp, inheritTime, false

}

if newWork {

// There may be new timer or GC work; restart to

// discover.

goto top

}

now = tnow

if w != 0 && (pollUntil == 0 || w < pollUntil) {

// Earlier timer to wait for.

pollUntil = w

}

}Preemption

What happens when there is long-running goroutine?

This could potentially make other goroutines to starve in the Local Run Queue.

Take for example cashiers (threads):

Both cashier has very loaded first clients. This will slow down the whole system (two pipelines, two threads)

That's why we should do something, we should put aside "long-running clients" so we can optimize the pipelines.

With that optimization we will process quickly the other ones which should not starve. Of course there are scenarios where the second client could be also "long-running" which should have the same fate or not.

Who identifies such long running goroutines?

The Go standard library dedicates a thread to watch after your application and help with bottlenecks your program could face called sysmon

Stands for system monitor and it is not linked to any Processor, Thread, Goroutine in our model.

It is not taken into account in the Go Scheduler too. It is always running but is smart enough to not consume resources when there is nothing to do. Its cycle time is dynamic and depends on the current activity of the running program.

Any goroutine running for more than 10ms is marked as preemptible (soft limit). Therefore, the Go Scheduler can decide if it should be return to the LRQ or continue running on the thread. If we are in situation as the example above both, first clients (goroutines) will be put in the local queue as there are other less time-consuming clients.

That's how the Go Scheduler tackles long running goroutines

Conclusion

Certainly, the Go scheduler is a critical component of Go's concurrency model. It efficiently manages goroutines, ensuring fair execution and optimal resource usage. By utilizing non-cooperative preemption, local and global, network run queues, work-stealing, the scheduler enables millions of concurrent tasks to run efficiently, making Go a powerhouse for concurrent programming.

- SAP Managed Tags:

- Research and Development

- Cloud Integration - Adapter Development - Multiple Workers in Technology Q&A

- SAP IQ Best Practices From The Field in Technology Blogs by SAP

- Concurrency patterns for Hyperledger Fabric Go chaincode in Technology Blogs by SAP

- SAP DS (BODS) : Dynamic Job execution (Concurrently) in SAP Data services in Technology Q&A