- SAP Community

- Products and Technology

- Technology

- Technology Blogs by SAP

- Checking HANA Cloud Vector Engine performances

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

I am composing this blog to check the performance of HANA Vector Store, with particular emphasis on the following aspects:

- The quantum of memory utilized to store embeddings as well as to conduct a search.

- The duration required to perform a search.

Summary

Here are the summary of environment, data and measured memory and time. Search time will be shorten when vector indexing is implemented. One of SAP HANA's advantageous features is its attribute search capability. Users can perform similarity and attribute search simultaneously, resulting in a more efficient and effective search process.

- SAP HANA Cloud:

- Memory: 60 GB

- CPU: 4 vCPUs

- Version: 4.00.000.00.1710841718 (fa/CE2024.2)

- Data: 1024 dimension * 300,000 records

- Memory Usage of table: 13GB

- Search

- Memory Usage: 8.1MB(Consumed memory was smaller than calculated based on help doc.)

- Time: 97ms

Environment

Python on Google Colaboratory

As of 2024/4, python version is 3.10.12 on Google Colaboratory.

| Package | Version |

| hana_ml | 2.20.24031902 |

Jupyter Implementation to store embeddings

1. pip install

First of all, install hana_ml using pip.

!pip install hana_ml

2. import packages

Next, import required packages.

from hana_ml import ConnectionContext

from hana_ml.dataframe import create_dataframe_from_pandas

import pandas as pd

import numpy as np

3. Check SAP HANA Cloud Connection

Now check if SAP HANA Cloud is available.

cc= ConnectionContext(

address='<* * *>',

port='443', # 443 is usual

user='<* * *>',

password='<* * *>',

encrypt=True

)

print(cc.hana_version())

print(cc.get_current_schema())

You can see HANA Cloud version and your current schema name.

4.00.000.00.1710841718 (fa/CE2024.2)

<Your schema name>

Just define table name, dimension and row as constants.

TAB = 'VECTOR_TEST'

DIMENSION = 1_024

ROW = 300_000

4. Create random embeddings

Create random embeddings using numpy and pandas. It takes about 5 minutes.

df = pd.DataFrame({'ID': np.arange(ROW)})

df['VECTOR_STR'] = df.apply(lambda row: str(np.random.random(DIMENSION).astype('float32').tolist()), axis=1)

df['VECTOR'] = np.nan

Here are the dataframe info.

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 300000 entries,

0 to 299999 Data columns (total 3 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 ID 300000 non-null int64

1 VECTOR_STR 300000 non-null object

2 VECTOR 0 non-null float64

dtypes: float64(1), int64(1), object(1)

memory usage: 6.9+ MB5. Define table and upload data

Proceed to define a table and upload a dataframe into this newly defined table. Ordinarily, I would use the "create_table" function, however, as of April 2024, the data type REAL_VECTOR is unavailable. It takes about an hour.

def create_table(cc, sql, df):

if cc.has_table(TAB):

cc.drop_table(TAB)

cc.execute_sql(sql)

dfh = create_dataframe_from_pandas(

connection_context=cc,

pandas_df=df,

table_name=TAB,

append=True

)

cc.execute_sql(f'''UPDATE {TAB} SET VECTOR = TO_REAL_VECTOR(VECTOR_STR);''')

print(dfh.get_table_structure())

display(dfh.head(3).collect())

return dfh

#sql = f'''CREATE LOCAL TEMPORARY COLUMN TABLE {TAB}(

sql = f'''CREATE TABLE {TAB}(

ID INT,

VECTOR_STR NCLOB,

VECTOR REAL_VECTOR(1024));''' # create_table hana_ml 2.20.24031902 doesn't deal with REAL_VECTOR

dfh = create_table(cc, sql, df)

6. Similarity Search

Create vector search function and test the search function. You can use L2DISTANCE as well. It takes 857 ms, which includes network time between google colab and HANA. Later I search again from HANA SQL Console with printed SQL.

def run_vector_search(k=10):

query_vector = str(np.random.random(DIMENSION).astype('float32').tolist())

sql = f'''SELECT TOP {k} "ID",

"COSINE_SIMILARITY"("VECTOR", TO_REAL_VECTOR('{query_vector}')) as "COSINE_SIMILARITY"

FROM "{TAB}"

ORDER BY "COSINE_SIMILARITY"("VECTOR", TO_REAL_VECTOR('{query_vector}')) DESC'''

hdf = cc.sql(sql)

print(sql)

df_context = hdf.head(k).collect()

return df_context

df_context = run_vector_search()

print(df_context)

Here are the result of the search.

ID COSINE_SIMILARITY

0 157139 0.780948

1 227217 0.776527

2 231114 0.776151

3 87678 0.774138

4 116329 0.774049

5 38166 0.773831

6 220076 0.773783

7 253965 0.773703

8 134814 0.773081

9 256103 0.772673

7. Close HANA connection

Close HANA connection and the local temporary table is dropped automatically.

cc.close()

Performances

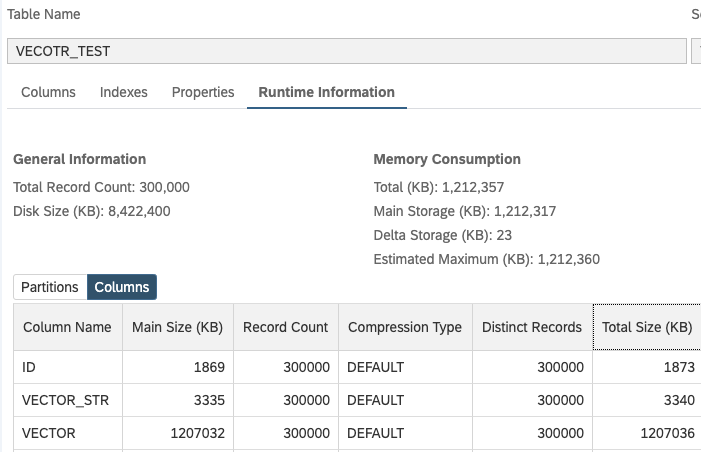

Memory usage of the table

Memory usage and time when searching

From SQL console, I executed the query printed on jupyter step 6. Consumed memory was smaller than calculated based on help doc.

Client elapsed time: 97.00 ms

Statement prepare time: 0.9140 ms elapsed time, 0.2370 ms CPU time

Statement execute time: 91.57 ms elapsed time, 297.9 ms CPU time

Peak memory consumed: 8.131 MB

Using Attribute search simultaneously

I have added the condition "id < 1000". In practice, conditions can be based on customer name, date, or any other relevant criteria. Then performance has improved.

- SAP Managed Tags:

- SAP HANA Cloud, SAP HANA database

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

-

ABAP CDS Views - CDC (Change Data Capture)

2 -

AI

1 -

Analyze Workload Data

1 -

BTP

1 -

Business and IT Integration

2 -

Business application stu

1 -

Business Technology Platform

1 -

Business Trends

1,658 -

Business Trends

103 -

CAP

1 -

cf

1 -

Cloud Foundry

1 -

Confluent

1 -

Customer COE Basics and Fundamentals

1 -

Customer COE Latest and Greatest

3 -

Customer Data Browser app

1 -

Data Analysis Tool

1 -

data migration

1 -

data transfer

1 -

Datasphere

2 -

Event Information

1,400 -

Event Information

69 -

Expert

1 -

Expert Insights

177 -

Expert Insights

322 -

General

1 -

Google cloud

1 -

Google Next'24

1 -

GraphQL

1 -

Kafka

1 -

Life at SAP

780 -

Life at SAP

13 -

Migrate your Data App

1 -

MTA

1 -

Network Performance Analysis

1 -

NodeJS

1 -

PDF

1 -

POC

1 -

Product Updates

4,576 -

Product Updates

367 -

Replication Flow

1 -

REST API

1 -

RisewithSAP

1 -

SAP BTP

1 -

SAP BTP Cloud Foundry

1 -

SAP Cloud ALM

1 -

SAP Cloud Application Programming Model

1 -

SAP Datasphere

2 -

SAP S4HANA Cloud

1 -

SAP S4HANA Migration Cockpit

1 -

Technology Updates

6,873 -

Technology Updates

454 -

Workload Fluctuations

1

- Understanding the Data Review steps in Onboarding and the impact on Employee Central in Technology Blogs by SAP

- Deliver Real-World Results with SAP Business AI: Q4 2023 & Q1 2024 Release Highlights in Technology Blogs by SAP

- RHEL AND SAP UPGRADE WITH COMPATIBILITY CHECKS #ATR in Technology Blogs by Members

- Authority check - better performance time (after select query or in select where condition) in Technology Q&A

| User | Count |

|---|---|

| 24 | |

| 9 | |

| 8 | |

| 7 | |

| 7 | |

| 6 | |

| 6 | |

| 6 | |

| 6 | |

| 6 |