- SAP Community

- Products and Technology

- Technology

- Technology Q&A

- Odatajs doesn't find any data in db table SCP Web ...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Odatajs doesn't find any data in db table SCP Web IDE

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 07-10-2020 8:08 AM

Hello experts,

currently I am working on getting an odata service up and running in order to display data from a HANA table.

The challenge that I am facing is with the odata service that throws an error every time I run the odatajs as an Application

the odatajs.xsodata has the following simple code:

service

{

"DummyData" as "scenarios";

}Also my workspace file structure looks like the following:

Does anyone has idea how I can fix this error?

Would be much appreciated.

- SAP Managed Tags:

- Node.js,

- OData,

- SAP HANA,

- SAP Web IDE,

- SAP Workflow Management, workflow capability

Accepted Solutions (1)

Accepted Solutions (1)

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Hi Maissa,

Your db design time artefacts all have "pending deployment" label. Build and deploy that first to create the DB objects.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

You need to click on the show hidden files button (eye icon). It is at the toolbar section of your project (workspace) explorer. And the config file should be in your db > src folder.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

I found it and added the line of code that you wrote me but deployment failed with code 1 and it seems there's another adjustment that has to be done in the .hdiconfig file

the file .hdiconfig has the following line of codes:

{

"minimum_feature_version": "1000",

"file_suffixes": {

"hdbapplicationtime": {

"plugin_name": "com.sap.hana.di.applicationtime"

},

"hdbcalculationview": {

"plugin_name": "com.sap.hana.di.calculationview"

},

"hdbconstraint": {

"plugin_name": "com.sap.hana.di.constraint"

},

"txt": {

"plugin_name": "com.sap.hana.di.copyonly"

},

"hdbdropcreatetable": {

"plugin_name": "com.sap.hana.di.dropcreatetable"

},

"hdbflowgraph": {

"plugin_name": "com.sap.hana.di.flowgraph"

},

"hdbfunction": {

"plugin_name": "com.sap.hana.di.function"

},

"hdbgraphworkspace": {

"plugin_name": "com.sap.hana.di.graphworkspace"

},

"hdbindex": {

"plugin_name": "com.sap.hana.di.index"

},

"hdblibrary": {

"plugin_name": "com.sap.hana.di.library"

},

"hdblogicalschema": {

"plugin_name": "com.sap.hana.di.logicalschema"

},

"hdbprocedure": {

"plugin_name": "com.sap.hana.di.procedure"

},

"hdbprojectionview": {

"plugin_name": "com.sap.hana.di.projectionview"

},

"hdbprojectionviewconfig": {

"plugin_name": "com.sap.hana.di.projectionview.config"

},

"hdbreptask": {

"plugin_name": "com.sap.hana.di.reptask"

},

"hdbresultcache": {

"plugin_name": "com.sap.hana.di.resultcache"

},

"hdbrole": {

"plugin_name": "com.sap.hana.di.role"

},

"hdbroleconfig": {

"plugin_name": "com.sap.hana.di.role.config"

},

"hdbsearchruleset": {

"plugin_name": "com.sap.hana.di.searchruleset"

},

"hdbsequence": {

"plugin_name": "com.sap.hana.di.sequence"

},

"hdbanalyticprivilege": {

"plugin_name": "com.sap.hana.di.analyticprivilege"

},

"hdbview": {

"plugin_name": "com.sap.hana.di.view"

},

"hdbstatistics": {

"plugin_name": "com.sap.hana.di.statistics"

},

"hdbstructuredprivilege": {

"plugin_name": "com.sap.hana.di.structuredprivilege"

},

"hdbsynonym": {

"plugin_name": "com.sap.hana.di.synonym"

},

"hdbsynonymconfig": {

"plugin_name": "com.sap.hana.di.synonym.config"

},

"hdbsystemversioning": {

"plugin_name": "com.sap.hana.di.systemversioning"

},

"hdbtable": {

"plugin_name": "com.sap.hana.di.table"

},

"hdbmigrationtable": {

"plugin_name": "com.sap.hana.di.table.migration"

},

"hdbtabletype": {

"plugin_name": "com.sap.hana.di.tabletype"

},

"hdbtabledata": {

"plugin_name": "com.sap.hana.di.tabledata"

},

"csv": {

"plugin_name": "com.sap.hana.di.tabledata.source"

},

"properties": {

"plugin_name": "com.sap.hana.di.tabledata.properties"

},

"tags": {

"plugin_name": "com.sap.hana.di.tabledata.properties"

},

"hdbtrigger": {

"plugin_name": "com.sap.hana.di.trigger"

},

"hdbvirtualfunction": {

"plugin_name": "com.sap.hana.di.virtualfunction"

},

"hdbvirtualfunctionconfig": {

"plugin_name": "com.sap.hana.di.virtualfunction.config"

},

"hdbvirtualpackagehadoop": {

"plugin_name": "com.sap.hana.di.virtualpackage.hadoop"

},

"hdbvirtualpackagesparksql": {

"plugin_name": "com.sap.hana.di.virtualpackage.sparksql"

},

"hdbvirtualprocedure": {

"plugin_name": "com.sap.hana.di.virtualprocedure"

},

"hdbvirtualprocedureconfig": {

"plugin_name": "com.sap.hana.di.virtualprocedure.config"

},

"hdbvirtualtable": {

"plugin_name": "com.sap.hana.di.virtualtable"

},

"hdbvirtualtableconfig": {

"plugin_name": "com.sap.hana.di.virtualtable.config"

},

"hdbcds": {

"plugin_name": "com.sap.hana.di.cds"

}

}

}

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

I'm not familiar with this config:

"minimum_feature_version": "1000",<br>How did you generate your hana db module? Via SAP Web IDE template?

This is my .hdiconfig generated by SAP WebIDE 6 months ago:

{

"plugin_version": "2.0.30.0",

"file_suffixes": {

"csv": {

"plugin_name": "com.sap.hana.di.tabledata.source"

},

"hdbafllangprocedure": {

"plugin_name": "com.sap.hana.di.afllangprocedure"

},

"hdbanalyticprivilege": {

"plugin_name": "com.sap.hana.di.analyticprivilege"

},

"hdbcalculationview": {

"plugin_name": "com.sap.hana.di.calculationview"

},

"hdbcds": {

"plugin_name": "com.sap.hana.di.cds"

},

"hdbcollection": {

"plugin_name": "com.sap.hana.di.collection"

},

"hdbconstraint": {

"plugin_name": "com.sap.hana.di.constraint"

},

"hdbdropcreatetable": {

"plugin_name": "com.sap.hana.di.dropcreatetable"

},

"hdbflowgraph": {

"plugin_name": "com.sap.hana.di.flowgraph"

},

"hdbfulltextindex": {

"plugin_name": "com.sap.hana.di.fulltextindex"

},

"hdbfunction": {

"plugin_name": "com.sap.hana.di.function"

},

"hdbgraphworkspace": {

"plugin_name": "com.sap.hana.di.graphworkspace"

},

"hdbhadoopmrjob": {

"plugin_name": "com.sap.hana.di.virtualfunctionpackage.hadoop"

},

"hdbindex": {

"plugin_name": "com.sap.hana.di.index"

},

"hdblibrary": {

"plugin_name": "com.sap.hana.di.library"

},

"hdbmigrationtable": {

"plugin_name": "com.sap.hana.di.table.migration"

},

"hdbprocedure": {

"plugin_name": "com.sap.hana.di.procedure"

},

"hdbprojectionview": {

"plugin_name": "com.sap.hana.di.projectionview"

},

"hdbprojectionviewconfig": {

"plugin_name": "com.sap.hana.di.projectionview.config"

},

"hdbreptask": {

"plugin_name": "com.sap.hana.di.reptask"

},

"hdbresultcache": {

"plugin_name": "com.sap.hana.di.resultcache"

},

"hdbrole": {

"plugin_name": "com.sap.hana.di.role"

},

"hdbroleconfig": {

"plugin_name": "com.sap.hana.di.role.config"

},

"hdbsearchruleset": {

"plugin_name": "com.sap.hana.di.searchruleset"

},

"hdbsequence": {

"plugin_name": "com.sap.hana.di.sequence"

},

"hdbstatistics": {

"plugin_name": "com.sap.hana.di.statistics"

},

"hdbstructuredprivilege": {

"plugin_name": "com.sap.hana.di.structuredprivilege"

},

"hdbsynonym": {

"plugin_name": "com.sap.hana.di.synonym"

},

"hdbsynonymconfig": {

"plugin_name": "com.sap.hana.di.synonym.config"

},

"hdbsystemversioning": {

"plugin_name": "com.sap.hana.di.systemversioning"

},

"hdbtable": {

"plugin_name": "com.sap.hana.di.table"

},

"hdbtabledata": {

"plugin_name": "com.sap.hana.di.tabledata"

},

"hdbtabletype": {

"plugin_name": "com.sap.hana.di.tabletype"

},

"hdbtextconfig": {

"plugin_name": "com.sap.hana.di.textconfig"

},

"hdbtextdict": {

"plugin_name": "com.sap.hana.di.textdictionary"

},

"hdbtextinclude": {

"plugin_name": "com.sap.hana.di.textrule.include"

},

"hdbtextlexicon": {

"plugin_name": "com.sap.hana.di.textrule.lexicon"

},

"hdbtextminingconfig": {

"plugin_name": "com.sap.hana.di.textminingconfig"

},

"hdbtextrule": {

"plugin_name": "com.sap.hana.di.textrule"

},

"hdbtrigger": {

"plugin_name": "com.sap.hana.di.trigger"

},

"hdbview": {

"plugin_name": "com.sap.hana.di.view"

},

"hdbvirtualfunction": {

"plugin_name": "com.sap.hana.di.virtualfunction"

},

"hdbvirtualfunctionconfig": {

"plugin_name": "com.sap.hana.di.virtualfunction.config"

},

"hdbvirtualpackagehadoop": {

"plugin_name": "com.sap.hana.di.virtualpackage.hadoop"

},

"hdbvirtualpackagesparksql": {

"plugin_name": "com.sap.hana.di.virtualpackage.sparksql"

},

"hdbvirtualprocedure": {

"plugin_name": "com.sap.hana.di.virtualprocedure"

},

"hdbvirtualprocedureconfig": {

"plugin_name": "com.sap.hana.di.virtualprocedure.config"

},

"hdbvirtualtable": {

"plugin_name": "com.sap.hana.di.virtualtable"

},

"hdbvirtualtableconfig": {

"plugin_name": "com.sap.hana.di.virtualtable.config"

},

"jar": {

"plugin_name": "com.sap.hana.di.virtualfunctionpackage.hadoop"

},

"properties": {

"plugin_name": "com.sap.hana.di.tabledata.properties"

},

"tags": {

"plugin_name": "com.sap.hana.di.tabledata.properties"

},

"txt": {

"plugin_name": "com.sap.hana.di.copyonly"

}

}

}<br>Try to check if that helps you.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

can't tell what's the issue based on the logs. can you share your xs-security config and mta.yaml?

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Sure, I appreciate it.

xs-security.json:

{

"xsappname": "myproject",

"scopes": [ { "name": "$XSAPPNAME.view", "description": "View data" },

{ "name": "$XSAPPNAME.create", "description": "Create data"}

],

"tenant-mode": "dedicated",

"description": "Security profile of called application",

"role-templates": [

{

"name": "ClimatEx",

"description": "Role for viewing data",

"scope-references": [

"$XSAPPNAME.create","$XSAPPNAME.view"

]

}

]

}

.hdiconfig:

{

"plugin_version": "2.0.30.0",

"file_suffixes": {

"csv": {

"plugin_name": "com.sap.hana.di.tabledata.source"

},

"hdbafllangprocedure": {

"plugin_name": "com.sap.hana.di.afllangprocedure"

},

"hdbanalyticprivilege": {

"plugin_name": "com.sap.hana.di.analyticprivilege"

},

"hdbcalculationview": {

"plugin_name": "com.sap.hana.di.calculationview"

},

"hdbcds": {

"plugin_name": "com.sap.hana.di.cds"

},

"hdbcollection": {

"plugin_name": "com.sap.hana.di.collection"

},

"hdbconstraint": {

"plugin_name": "com.sap.hana.di.constraint"

},

"hdbdropcreatetable": {

"plugin_name": "com.sap.hana.di.dropcreatetable"

},

"hdbflowgraph": {

"plugin_name": "com.sap.hana.di.flowgraph"

},

"hdbfulltextindex": {

"plugin_name": "com.sap.hana.di.fulltextindex"

},

"hdbfunction": {

"plugin_name": "com.sap.hana.di.function"

},

"hdbgraphworkspace": {

"plugin_name": "com.sap.hana.di.graphworkspace"

},

"hdbhadoopmrjob": {

"plugin_name": "com.sap.hana.di.virtualfunctionpackage.hadoop"

},

"hdbindex": {

"plugin_name": "com.sap.hana.di.index"

},

"hdblibrary": {

"plugin_name": "com.sap.hana.di.library"

},

"hdbmigrationtable": {

"plugin_name": "com.sap.hana.di.table.migration"

},

"hdbprocedure": {

"plugin_name": "com.sap.hana.di.procedure"

},

"hdbprojectionview": {

"plugin_name": "com.sap.hana.di.projectionview"

},

"hdbprojectionviewconfig": {

"plugin_name": "com.sap.hana.di.projectionview.config"

},

"hdbreptask": {

"plugin_name": "com.sap.hana.di.reptask"

},

"hdbresultcache": {

"plugin_name": "com.sap.hana.di.resultcache"

},

"hdbrole": {

"plugin_name": "com.sap.hana.di.role"

},

"hdbroleconfig": {

"plugin_name": "com.sap.hana.di.role.config"

},

"hdbsearchruleset": {

"plugin_name": "com.sap.hana.di.searchruleset"

},

"hdbsequence": {

"plugin_name": "com.sap.hana.di.sequence"

},

"hdbstatistics": {

"plugin_name": "com.sap.hana.di.statistics"

},

"hdbstructuredprivilege": {

"plugin_name": "com.sap.hana.di.structuredprivilege"

},

"hdbsynonym": {

"plugin_name": "com.sap.hana.di.synonym"

},

"hdbsynonymconfig": {

"plugin_name": "com.sap.hana.di.synonym.config"

},

"hdbsystemversioning": {

"plugin_name": "com.sap.hana.di.systemversioning"

},

"hdbtable": {

"plugin_name": "com.sap.hana.di.table"

},

"hdbtabledata": {

"plugin_name": "com.sap.hana.di.tabledata"

},

"hdbtabletype": {

"plugin_name": "com.sap.hana.di.tabletype"

},

"hdbtextconfig": {

"plugin_name": "com.sap.hana.di.textconfig"

},

"hdbtextdict": {

"plugin_name": "com.sap.hana.di.textdictionary"

},

"hdbtextinclude": {

"plugin_name": "com.sap.hana.di.textrule.include"

},

"hdbtextlexicon": {

"plugin_name": "com.sap.hana.di.textrule.lexicon"

},

"hdbtextminingconfig": {

"plugin_name": "com.sap.hana.di.textminingconfig"

},

"hdbtextrule": {

"plugin_name": "com.sap.hana.di.textrule"

},

"hdbtrigger": {

"plugin_name": "com.sap.hana.di.trigger"

},

"hdbview": {

"plugin_name": "com.sap.hana.di.view"

},

"hdbvirtualfunction": {

"plugin_name": "com.sap.hana.di.virtualfunction"

},

"hdbvirtualfunctionconfig": {

"plugin_name": "com.sap.hana.di.virtualfunction.config"

},

"hdbvirtualpackagehadoop": {

"plugin_name": "com.sap.hana.di.virtualpackage.hadoop"

},

"hdbvirtualpackagesparksql": {

"plugin_name": "com.sap.hana.di.virtualpackage.sparksql"

},

"hdbvirtualprocedure": {

"plugin_name": "com.sap.hana.di.virtualprocedure"

},

"hdbvirtualprocedureconfig": {

"plugin_name": "com.sap.hana.di.virtualprocedure.config"

},

"hdbvirtualtable": {

"plugin_name": "com.sap.hana.di.virtualtable"

},

"hdbvirtualtableconfig": {

"plugin_name": "com.sap.hana.di.virtualtable.config"

},

"jar": {

"plugin_name": "com.sap.hana.di.virtualfunctionpackage.hadoop"

},

"properties": {

"plugin_name": "com.sap.hana.di.tabledata.properties"

},

"tags": {

"plugin_name": "com.sap.hana.di.tabledata.properties"

},

"txt": {

"plugin_name": "com.sap.hana.di.copyonly"

}

}

}

mta.yaml:

ID: myproject

_schema-version: '2.1'

version: 0.0.1

modules:

- name: myhdbmod

type: hdb

path: myhdbmod

requires:

- name: hdi_myhdbmod

- name: odatajs

type: nodejs

path: odatajs

requires:

- name: odatajs

- name: hdi_myhdbmod

- name: uaa_myproject

- name: myhdbmod

provides:

- name: odatajs_api

properties:

url: '${default-url}'

- name: MyClimatExUI

type: html5

path: MyClimatExUI

parameters:

disk-quota: 512M

memory: 256M

build-parameters:

builder: grunt

requires:

- name: uaa_myproject

- name: dest_myproject

resources:

- name: hdi_myhdbmod

properties:

hdi-container-name: '${service-name}'

type: com.sap.xs.hdi-container

- name: uaa_myproject

parameters:

path: ./xs-security.json

service-plan: application

service: xsuaa

type: org.cloudfoundry.managed-service

- name: dest_myproject

parameters:

service-plan: lite

service: destination

type: org.cloudfoundry.managed-service

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

DummyData.hdbtabledata:

{

"format_version": 1,

"imports":

[ {

"target_table" : "myproject.myhdbmod::cdsArtifact.DummyData",

"source_data" : { "data_type" : "CSV", "file_name" : "myproject.myhdbmod::DummyData.csv", "has_header" : false },

"import_settings" : { "import_columns" : ["key ID","ISIN","COMPANY_NAME","SECTOR_LV1", "SECTOR_LV2", "WEIGHT_FLAG", "WEIGHTING",

"NAICS_NUM", "NAICS_NAME", "SCENARIO", "ADAPTIVE_CAP", "REGION", "TECHNOLOGY", "YEAR", "EBITDA", "EBIT",

"DEPRECIATION", "SALES", "VOLUME" ] }

}

]

}

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

cdsArtifact.hdbcds:

context cdsArtifact {

/*@@layout{"layoutInfo":{"x":-238,"y":-141.5}}*/

entity DummyData {

key ID : String(3) not null;

ISIN : String(20);

COMPANY_NAME : String(255);

SECTOR_LV1 : String(255);

SECTOR_LV2 : String(255);

WEIGHT_FLAG : String(255);

WEIGHTING : Decimal(23,19);

NAICS_NUM : String(255);

NAICS_NAME : String(255);

SCENARIO : String(255);

ADAPTIVE_CAP : String(255);

REGION : String(255);

TECHNOLOGY : String(255);

YEAR : String(255);

EBITDA : Decimal(23,19);

EBIT : Decimal(23,19);

DEPRECIATION : Decimal(23,19);

SALES : Decimal(23,19);

VOLUME : Decimal(23,19);

};

};

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

It looks like the issue is due to the namespace. It doesn’t look like you are using namespace on your cds model but somehow you have namespace on your hdbtabledata config file. You either make sure you drop all the namespace or make sure you declare the namespace in your cds model.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

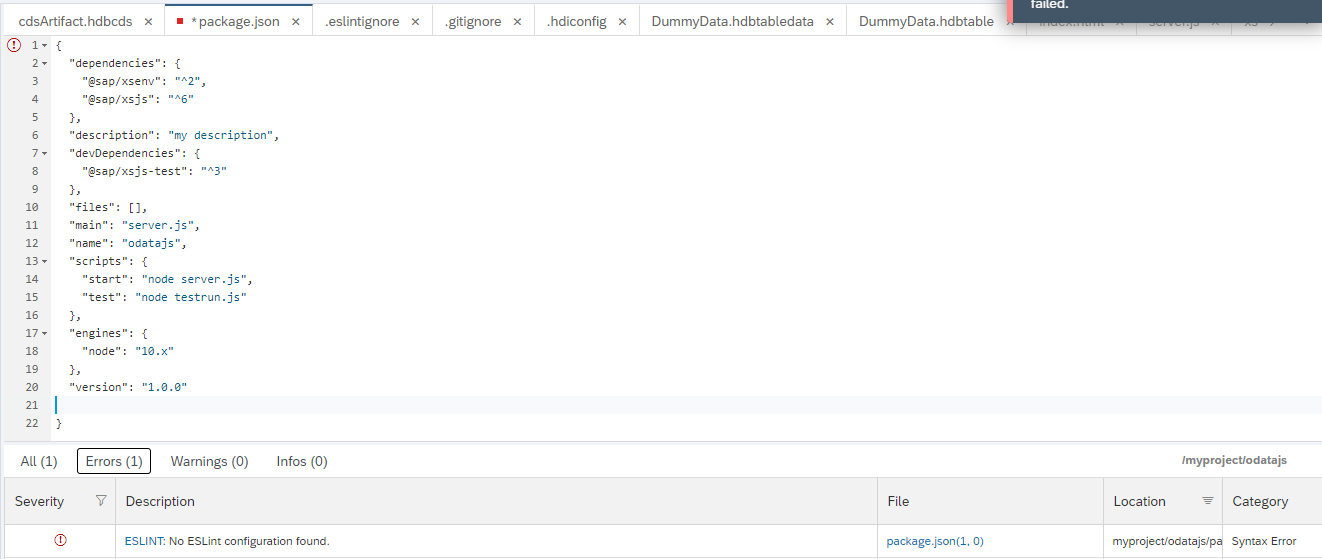

I deleted the namespace file and removed it from .hdbtabledata but then the following errors appeared:

and my DummyData.hdbtabledata looks like this now:

{

"format_version": 1,

"imports":

[ {

"target_table" : "cdsArtifact.DummyData",

"source_data" : {

"data_type" : "CSV",

"file_name" : "DummyData.csv",

"has_header" : true,

"type_config" : {

"delimiter" : ","

}

},

"import_settings" : {

"import_columns" : [

"key ID","ISIN","COMPANY_NAME","SECTOR_LV1", "SECTOR_LV2", "WEIGHT_FLAG", "WEIGHTING",

"NAICS_NUM", "NAICS_NAME", "SCENARIO", "ADAPTIVE_CAP", "REGION", "TECHNOLOGY", "YEAR", "EBITDA", "EBIT",

"DEPRECIATION", "SALES", "VOLUME" ],

"include_filter" : []

}

}

]

}

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

I'm now highly suspicious that there's an issue with webide right now (and not because of your code). Your best bet is to try a different IDE. Do you have a setup using visual studio code? Another option is the use of SAP Business Application Studio (FYI -- SAP WebIDE is being replaced by this new tool).

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

To me, it looks like you are on a dead-end situation. I would use other IDE to build and deploy if i were you.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

You can follow this tutorial to get started with SAP BAS:

https://developers.sap.com/tutorials/appstudio-onboarding.html

But take note that if you continue learning from succeeding tutorials, they tend to teach you the newer programming model which obviously you are not using yet. so what i can suggest is to stop following the tutorials once you already have setup your own SAP BAS.

The next step for you to do is to import your current project into SAP BAS. You can achieve this if you have save your project into a central git repository. It's like this, you push your current from SAP WebIDE to your central git repository (i like to use GitHub), then switch to SAP BAS and then clone your repository from there.

Then lastly, I have this blog that provides details on how to deploy an MTA project using the terminal -- i used VS Code IDE but you can do the same in SAP BAS. Follow the steps starting from step 4 of Deploy the application to SCP Cloud Foundry:

https://blogs.sap.com/2020/05/27/cap-consume-external-service-part-2/

If this does help you, kindly mark this answer as "Accepted" 🙂

Answers (0)

- Multiplication Sap Analytics cloud in Technology Q&A

- SAC Linked Analysis | RemoveDimensionFilter API in Technology Q&A

- Filtering a SAPUI5 Fiori Elements Smart Table Using A Variable Informed in log on. in Technology Q&A

- Problem with Table in Popup in Technology Q&A

- Calculated Measure lock for planning in Technology Q&A

| User | Count |

|---|---|

| 80 | |

| 9 | |

| 9 | |

| 7 | |

| 7 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 4 |

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.