- SAP Community

- Products and Technology

- Technology

- Technology Q&A

- Why does select statement taking too long when pri...

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Why does select statement taking too long when primary key is present?

- Subscribe to RSS Feed

- Mark Question as New

- Mark Question as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

on 02-06-2020 3:33 PM

Hi everyone!

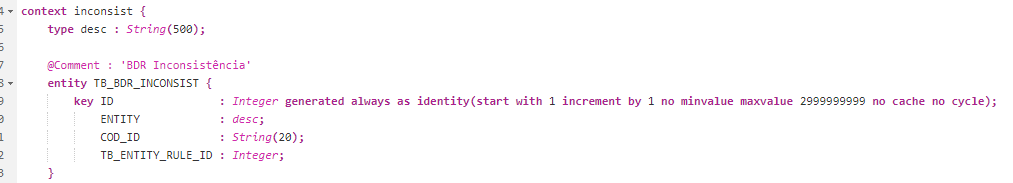

I can not figure out why my sql statement is taking too long when the primary key field is present.

This slow is reflecting in others artifacts such as my calculation views. Perhaps there is misunderstanding on my part about how to use/modeling this artificat. I do appreciate if you could to explain.

- SAP Managed Tags:

- SAP HANA,

- SAP Web IDE

Accepted Solutions (1)

Accepted Solutions (1)

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

——- Updated answer (after actually testing my ideas)

TL;DR

In this case the difference in runtime comes from the unloading and reloading of the very large data structure that makes up the primary key column.

Because the primary key column has only unique values, it cannot be well compressed and ends up being very large compared to the other columns. HANA’s memory management tries to avoid out-of-memory situations by freeing up memory early and removes the big primary key column data from memory right after using it.

The next time a query needs the data it has to be reloaded into memory - that’s what’s taking a long time (few seconds in this case). Changing the “unloading” behaviour to keep the data in memory makes the query with primary key as fast as the one without.

A more verbose version of this can be found here: https://lbreddemann.org/one-in-a-million/

——-

Ok, with just the query text and the effect you observed there is not much to go on and analyze. I would recommend using EXPLAIN PLAN and PLANVIZ as usual to get some more insights.

However, I have a suspicion here:

Could it be that the three other columns (not the ID column) contain quite a lot of repeating data?

If so, then it is not all that surprising to see the difference in runtime.

See, when HANA returns records it tries to work with the internal representation of actual values (value IDs) as long as possible. That is because in many cases, these value IDs are much smaller and allow processing with SIMD instructions easily.

Only when the actual value of a record field is absolutely required, e.g. when it should be handed to the client, it is filled in. That process is called "materialization" and HANA tries to employ a "late materialization" approach.

So far, so good.

Now, you run your query with columns that each have say 100.000 different values.

That's 300.000 different values HANA has to keep in reach to materialize all result records. Too easy, that's quick.

Next, you run the same query but include the ID column, which by definition has a different value for every single entry. Here HANA has to keep 300.000 + 88Mio (or whatever yor table count was) values ready to materialize the records.

We can assume that the lookup of actual values via the value ID is done by a dictionary lookup (i.e. via HASH function -> O (1)), but looking up 88Mio records is still two orders of magnitude more effort than 300K.

On top of that is, of course, the additional memory that is required to keep the 88 Mio uncompressible values of the primary key.

To be clear here, the important bit is that the primary key contains only unique values. This effect would show with any field that only contains unique values if the table is large.

At this point, you might say: "But I only take 1000 records".

That's true, but the definition of TOP and LIMIT requires that these options are applied to the final result set which obviously has to be completely computed before.

So, what can you do here?

Reduce the number of records that are processed. Put in a filter condition that makes sense for your use case.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

lbreddemann, that is a good argument you bring up here. So assuming that the finding of the TOP 1000 records takes the same time for both approaches, but that the difference of 4ms to 6921ms could be more likely explained in the fact, that it retrieves and returns the values for columns with more identical values faster than values for columns with truely unique values (since HANA has column based storage with compression of column values).

avidotto, you might want to give that assumption a shot and analyse more thoroughly how many distinct values there are in your other selected columns versus the unique key, and also try to find a column in your table that has the 'most' distinct values, besides the unique key. The difference in performance is too high for me that I would want to believe in that assumption, but after all you have 'close to' 100 million records, that is in general a lot for a database to handle.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Yes, that's pretty much what I wrote, except for that HANA uses a column store.

The materialization of records is done per column.

A quick reproduction on an HXE 2 SP04 with 95Mio records and only 3.500 and 12.000 different values in the non-key columns confirmed my suspicion.

The majority of time is spent during the materialization.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Hi Lars, thank you so much.

I have a bunch of similar values in that dataset, which really do explains the performance diff. I got the point around the resultset materialization, very meaningful.

Thank you all for your time helping me with this understanding.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Sorry, but I have to amend this answer again.

Thinking through this it became obvious, that the dictionary lookup per se cannot be the deciding factor for the increased runtime.

As written in my first correction (not sure where my head was when I first wrote the answer), the per-column value dictionary is searched through by a binary search. Binary search has a worst-case runtime of O(log n). That means, that even two orders of magnitude more values would only require 2 more steps.

So, no, this cannot possibly explain the ca. 1750x runtime increase!

After some more test'n'try I found that the primary key column (AAA) data structures are huge compared with the other columns.

AAA weighed in at 1,031 GB with 725 MB just for the dictionary. The other columns combined came up to 330 MB for everything.

So, what you may think - and so did I. My HANA Express VM runs on 30 GB RAM, that's plenty for this table.

Nevertheless, I found that column AAA got unloaded after every single time I accessed it.

I tried to avoid this by setting UNLOAD PRIORITY for the table to 0, but nothing changed.

Checking the global.ini parameter [memoryobjects] - unload_upper_bound showed that it was set to 800M. So, whenever the memory usage would reach or surpass 800M, HANA would start unloading objects and that included the AAA/primary key column.

After bumping this parameter up sufficiently (effectively disabling this limit on my machine) the table stayed in memory.

And now, the runtime of the query is much closer to the one without the primary key column:

Statement 'select top 1000 aaa , aai , abi , _validfrom , _validto from io_test'

successfully executed in 6 ms 745 µs(server processing time: 4 ms 632 µs)TL;DR

Yes, the runtime was different because of the many unique values, but only because the size of the column was so large HANA unloaded it right after using it.

When the column is in memory, both queries perform nearly identical.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Kudos to you lbreddemann, this Story and your Solution is definitely worth another blog entry of yours 😉

Love it.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Thanks a lot @Michael Piesche!

For the interested, the blog post can be found at https://lbreddemann.org/one-in-a-million/.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Great analysis Michael P and Lars B. I enjoyed reading the detailed explanation which I only came across by accident from LinkedIn. Your database blog about Inside Track Melbourne 2020 caught my eye. Then I decided to read some of your other blogs and right there in Feb 2020 was this detailed explanation. I am still learning about HANA and did not even think about the loading/unloading possibility.

Thanks again to both of you!

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Answers (2)

Answers (2)

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Have you compared this behaviour against other large tables? After all, you have almost 100 Million entries! Did you try out the limit command at the end instead of the top after the select?

Did you check the records that are retrieved by each of the two calls? Because the only thing I could think of, is that the TOP 1000 with the key, gives you the very first 1000 entries with the lowest ids, whereas the TOP 1000 without the key, gives you maybe 'random' 1000 entries that are easier to fetch than those with primary key. I couldnt find any info though about this assumption with TOP n and no key selected.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Two thoughts, one is whether the table is indexed. Possibly it should be. How many fields are in the table and is it organized by rows or columns (should be columnar for best use of HANA'S capabilities). Second is try adding an order by ID clause when running the query.

As mentioned above, there are almost 100 million records which is a bit excessive. In light of that, what is your database admin (DBA) doing for archiving records. Despite it being HANA, there are still optimization tasks which should be performed to keep the database running efficiently.

Cheers, Mike

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Hi Mike, I really do appreciate your thougths.

For sure there is a variety of tasks to be performed in order to optimize HANA and we'll keep that in mind.

The question is why removing the primary key from the requested resultset the query is performed quite fast. What should I do to get a better performance querying such that statement.

Edit.: I tested with order by as well.

- Mark as New

- Bookmark

- Subscribe

- Subscribe to RSS Feed

- Report Inappropriate Content

Hi Alex,

I suspect that including the primary key causes all the records to be analyzed before the selection takes place (which is not really very efficient). Possibly it could be improved if the table were indexed, but I am not that familiar with indices and columnar tables (if such is the case with this table and with HANA). Whereas without the primary key, it only grabs the first 1000 records it receives. I would think that the internal workings might need to be reviewed with someone who knows the guts of how HANA works before a correct answer could be provided. If you could provide the table script, it might provide some more insight into how it is behaving.

Regards, Mike

- Data Flows - The Python Script Operator and why you should avoid it in Technology Blogs by Members

- Workload Analysis for HANA Platform Series - 3. Identify the Memory Consumption in Technology Blogs by SAP

- How to properly merge N:N Dimensions from 2 queries from different Universes? in Technology Q&A

- Exploring Integration Options in SAP Datasphere with the focus on using SAP extractors in Technology Blogs by SAP

- What you need to know about Finance in SAP S/4HANA Cloud, Public Edition in Technology Blogs by SAP

| User | Count |

|---|---|

| 93 | |

| 10 | |

| 10 | |

| 9 | |

| 9 | |

| 7 | |

| 6 | |

| 5 | |

| 5 | |

| 4 |

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.